|

Recently there was a bit of a furore surrounding UserBenchmark's "reviews" of AMD's latest and greatest Zen 3-based processors. I came a bit late to the party and, considering I've used the website many times in the past, I was interested in what, exactly, had gone down. Taking a closer look, I really fail to see what all the fuss was about. I've checked NotebookCheck's article and I can't see what they're saying and how it lines up with what's on the website. I've looked at the complaints on twitter and I don't entirely see what the issue is.

So let's get into it...

|

| Hmmm. "Aggressive and successful marketing campaign", you say? Isn't that usually called "good value for money"? |

The bad...

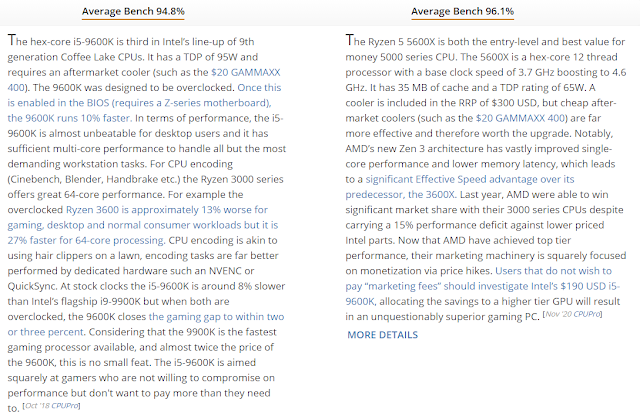

First thing's first, let's acknowledge and get out of the way the thing that everyone agrees on as a point of common ground: Whoever writes the concluding thoughts for each product (it's allegedly an owner of the site) has a MAJOR, almost incoherent Intel bias. We're not just talking a slight leaning, here. This is full blown "almost conspiracy theory" territory where advances in marketshare is often chalked up to "marketing" and insinuated unsavoury behaviour.

But that's not the entirety of the site... so let's move on.

|

| Look, you clearly have an opinion. It might not be entirely correct, but I can't say it's entirely incorrect... |

The not-so-bad...

Aside from the fact that this person (or, more likely, persons) appears very biased and a little unhinged what they are saying isn't technically untrue or inaccurate... and this is something that I'm surprised that the people complaining about these things are deaf or blind to - considering they are technical and scientific people themselves.

Let's look at this Ryzen 5 5600X "conclusion" (note: not a review) above.

- Specs are correct

- Price is correct

- Comment on cooling - a personal preference but not incorrect

- Zen 3 arch improvements - correct

- Comment on Zen 2 gaming performance versus fewer core but similarly performant in the majority of games - correct

- "Marketing machine price hikes" - nonsense

- Price increase per level of SKU - correct

- Advising gamers to put more money into a GPU to get more performance - correct

- Saying an i5-9600K will provide around the same level of gaming performance as a 5600X? - Apparently, correct!*

* I did a lot of searching around for reviews of the Zen 3 parts and didn't find any of this information. This will come up in the next section as a point in UserBenchmark's favour...

Now, I may be crazy here... but Intel bias aside, where's the problem? An i5-9600K is £189 and an R5 5600X is £299 (or would be, if it were in stock anywhere after it sold out due to high demand!) Looking at the comparison I was able to find* (again, not on any reputable review site), would an average gamer notice a few FPS difference on their (most likely) 60 Hz or 120 Hz 1080p monitor?

Would that average gamer be running XMP and optimised dual channel memory configurations? Would they be better served buying an RTX 2060 (£307) instead of a GTX 1660 Super (£208)? I'd argue that they would... Plus, they could get a decent Z-390 motherboard for £99 compared to the cheapest B550M motherboard for £78 which skews the money for a graphics card down to £297 for which you could still manage to grab an RTX 2060 KO for £286... or an RX 5600 XT for a similar price. Which, again, is a better investment of money for a gaming system.

* Just in case people don't pick up on it, review sites only compared against the 10600K, so I was just confirming the % difference between 9600K/10600K with the second video. Though you can also find that comparison elsewhere... though Google desn't help you because none of those video reviews are able to be indexed!

This argument also scales to the 5800X, 5900X and 5950X - which is also a point made by this person. Now, I might disagree with them on the basis of this being a dead-end platform with zero future-proofing but, given the statistics on how many people actually upgrade their CPU within the same motherboard (it was mentioned in a Youtube video - which I now cannot find! But *hint* it's quite a low % of all buyers...) that doesn't really seem to matter. Any way you slice it, a 9600K gives *equivalent* performance to a 5600X for less money overall (including motherboard). The lack of PCIe gen 4 means nothing when playing at 1080p/1440p on a graphics card that can't even saturate a gen 3 x16 bus.

Sure, the delivery of this "conclusion" isn't ideal but the actual facts are there for all to see - most of all those tech personalities and fans that are having a fit over this...

Notebookcheck's article is especially incoherent in this regard:

'Looking solely at the results of the Ryzen 9 5950X, which has been described as offering "a new level of consumer grade performance accross the board" by the respected review site Anandtech, and it is clear to see the Vermeer CPU has benchmarked well on UserBenchmark. Unsurprisingly, the 16-cre part amasses a huge score in the 64-core test (results in screenshots below) but as is well known UserBenchmark doesn't place too much emphasis, or score, on multi-core benchmarks.'

This argument is facetious because as all of these review sites know: more cores does not equal more gaming performance. Unfortunately, due to binning, product segmentation and silicon quality, the processors with more cores tend to have higher sustainable and base frequencies... it is not actually the higher core count that is counting towards better gaming performance.

|

| Gaming performance is 2% difference - not overall performance... |

Despite this fact, Notebookcheck then go on to say there's no sense in the 5950X winning the per-core benchmarks but losing in the ranking to the 10900K.... only, there is?! It's a benchmarking tool, specifically from users and the 5950X has an incredibly small sample size right now (342 at the time of writing). There's no way to tell if there is some sort of statistical weighting based on sample size. If UserBenchmark have any sort of statistical knowledge, then they should have! Small sample sizes can tend to give unrealistic results. I think it's entirely plausible that we'll see the positions switch as more results are entered into the database from the users.

In fact, you can clearly see that the speed rank of the 5950X is adversely affected by the low number of samples and the relatively high quantity of them clocked at a slower frequency than they should be. That's on consumers - not Userbenchmark.

Even if the results don't change, are we really bothered by a 2% difference either way?! Okay, let's say we are but then look at the way the performance is rated (above) it's specifically gaming performance. So the result is naturally skewed towards fewer cores performance. They even give you a definition for their effective speed for this avg% right there in the table header and it tells you it's a metric for general consumers - not workstation applications. Why are Notebookcheck calling foul at minimising 64-core performance in that regard?

|

| I can't even call favouritism on UserBenchmark's part - they're advocating cheaper and older CPUs for all Intel's 10th gen parts... including the 10900K |

The good...

However, a lot of the disquiet from commentators has been about the data and this is where I am completely lost in the argument. As far as I can see, UserBenchmark's data is "good" in that it is a benchmark with pre-defined objectives and is run on user-submitted systems.

Both Gamers' Nexus and Hardware Unboxed have been saying that the data is terrible or that the site itself is terrible and that other, 'more worthy' sites (such as Anandtech) should be getting the traffic UserBenchmark is. Well, I think they both have good intentions but they're coming at this from the wrong perspective, in my opinion.

In much the same way that any benchmark is biased and limited in the story it tells about a product, UserBenchmark also has its limitations. However, they are KNOWN limitations and it is KNOWN that the site prioritises single core and four core performance on CPUs (I'm not going to get into GPUs for this blogpost). It might be a very academic thing to say but, coming from a scientific background, as long as they do not lie about what they're doing (they don't and no one has accused them of that as far as I know) then it is up to the user to decide how to absorb the data they provide.

Secondly, this is not a "review" site. It is a comparison site that is focussed on real-world performance. You cannot compare this data to that of a controlled environment like that provided by both Gamers' Nexus* and Hardware Unboxed or Anandtech (along with practically countless other tech outlets) which cover each product in a high level of detail on a standarised (as much as is possible) platform.

* I'm writing this with an apostrophe because that's correct English (as far as I can tell) but I think the site is technically without an apostrophe? That's kinda weird and I never noticed it until now.

So, yes, if you are talking about purely scientific testing then the data on Userbenchmark is kinda crap. However, NONE of any of these tech sites can test for every single CPU out there against the latest and greatest and, in fact, most of them just test the same comparisons whenever a new piece of tech is released. I can watch ten different youtube videos showing the same graphs in different colours and read five more articles covering the same information. It's duplicate. Of course, duplication of data (otherwise known as independent confirmation of results) is valuable. But that's not the only data of value to consumers.

This is where sites like Geekbench and Userbenchmark come to the fore.

They're not the best quality of data out there but they are universal. You can compare virutally any part/product you can think of and get a decent overview out of that comparison - it's usable data. Better yet, it's not inaccurate. It just not might be 100% precise.

Sites like the venerated Anandtech are amazing but their datasets are very limited (and Anandtech's "Bench" dataset is far more comprehensive than the majority of individual reviews) but they are also, quite frankly, not user friendly.

|

| Actually, broken up like this, this small section isn't too bad to read but imagine an almost infinite scrolling number of these benchmarks. Also, the faded-out left-hand side of the bar charts, small font size on the left and result numbers far out on the right is terrible for legibility... Plus, a user unfamiliar with the website would have trouble finding the tool in the first place!! It's not even highlighted or anything... |

Anandtech's Bench is practically hidden on the front page of the site, with complete focus given to the news/reviews sections of the enterprise (which is perfectly fine, but it makes the point that this is a minor side project of minor importance to the site). If, and this is a big IF, a user knows about the tool, knows what it is called and finds their way to it then they face yet more hurdles to accessing the useful, accurate and precise information that is locked therein:

- The list of CPUs is not searchable

- You cannot filter types of benchmarks when comparing specific CPUs

- At 25% scale in chrome, it takes four complete bars to get to the bottom of the benchmark list

Now, I can't argue that this is not thorough and complete. What I can argue is that this is overwhelming and hard to parse for a large swathe of users. I actually know a thing or two about presentation of data (maybe you hate my blog and think this is a joke but I have won an award for this in a prior company I worked for) and this is, quite frankly, dire.

Further to this, Youtube videos are terrible at conveying information in a dense format. They are also terrible for finding specific information. You may get a search hit for what you're looking for or you may not and if you do get a video, you have to watch 10-20 minutes of things you didn't want in order to know whether your information was there in the first place. Compared to a webpage, it's very inefficient.

So, the incredulity that UserBenchmark has more traffic that more obscure, less easy to use and poorer SEO-designed sites and Youtube channels just baffles me.

|

| This is actually useful data... and when I run this benchmarking tool on my own rig, I can see where it sits in that set of data. |

Another thing I regularly use the site for is their "curves". When you benchmark a system, you can see where, on the spectrum of user-submitted results, your system lies and whether something is very wrong or perfectly right. That can help a user identify a potential issue with their build - something that no synthetic, controlled review could ever hope to accomplish. This data is also true and accurate, providing a picture of real consumers' systems.

In conclusion...

The thing you have to realise is that the traffic UserBenchmark garners is not undeserved traffic and it certainly provides a valuable service to many people, worldwide, as much as review sites do.

It's just a different type of service.

No comments:

Post a Comment