|

| Again, literally what happens... |

It may have escaped your notice that I picked up an RX 6800 last November. It's not been entirely smooth sailing, however: Two inexplicably dead DisplayPorts later*, I think I've managed to get a handle on the way in which to scale the RDNA 2 architecture.

*Completely unlinked to any tinkering on my part - since they happened when bringing the computer back from sleep - I don't keep my PC in sleep mode any longer. Never had a problem with it on my RTX 3070!

Last year, I looked at the power scaling of the RTX 3070 because I was conscious about getting the most out of my technology, with a focus on efficiency (both power and performance). So, I'm applying that same focus to the new piece of kit in my arsenal. Come along for the ride...

Princes and paupers...

There's quite a difference between the process of optimising Radeon cards and their Geforce counterparts. Astonishingly, Nvidia's cards are not really locked down, whereas AMD's offerings are pretty limited in what options are available to the consumer through 1st or third party apps... at least, at a surface level.

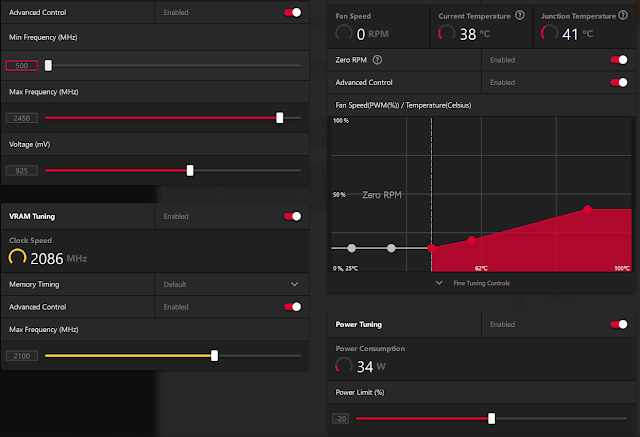

This was something that I was not generally aware of until after I purchased the card and wanted to optimise the performance per Watt and found that, as per stock, the user is only able to minimally affect the power limit - though clock frequency and core voltage are relatively free for the user to adjust. On the other hand, the memory frequency can only be increased (though, to be fair, I would not want to decrease it... but it could be useful for scientific purposes).

|

| A lot of people say that you should raise the minimum core frequency along with the maximum target... but I found that it introduces unnecessary instability in certain scenarios. |

In order to actually do what I set out to do for this article, I had to rely on the community tool called MorePowerTool, hosted over at Igor's lab. It's a very detailed programme that allows the user to really delve into the inner workings of their GPU and affect It's performance in many ways.

Unfortunately, the documentation isn't that great and the example walk-through given at the download page explicitly tells potential users NOT to do the thing they must do in order to use it as I have done.

I.e. You must press "Write SPPT" (Soft Power Play Table) to actually affect the accessible range of the exposed settings on your GPU. A very simple step but one that took me a lot of time to actually confirm...

At any rate, I'm not currently that interested in playing around with things other than wanting to be able to power limit the card, to be able to perform my testing.

This is where the second difference between Nvidia cards and AMD cards rears its head:

An Nvidia card will effectively throttle the core clocks when power is limited on the card, without the user having to put a hard limit on the card's performance. Instead, the user needs to provide the card with enough voltage to achieve whatever frequency is defined in the voltage frequency curve. AMD cards, or at least the RX 6000 series, will attempt to boost to the maximum set core frequency, whether or not there is enough overhead to the power limit or enough voltage to do so. However, even if there IS enough voltage to maintain the clock, if the power limit is not sufficient, it will also result in a crash of some sort...

This difference in behaviour means that stability in one programme, does not mean stability in all programmes (in a less predictable manner than for Nvidia cards), and may indicate why AMD have chosen to lock down their graphics cards so heavily (even moreso for the RX 7000 series*), to avoid support threads for people mucking around with the operation of their cards and causing driver and/or game crashes.

*Though this is looking more likely to be the result of AMD's relative lack of gen-on-gen performance increase, leading to them having to artificially limit the potential performance of the products in the RX 7000 series, so that the user cannot bump up their purchase to meet or exceed the product above...

That doesn't make the choice to do that any easier to swallow for their more experienced customers, though.

|

| Unigine Heaven just didn't scale until I was forced to drop the core frequency at the 55% power limit... |

Performance scaling...

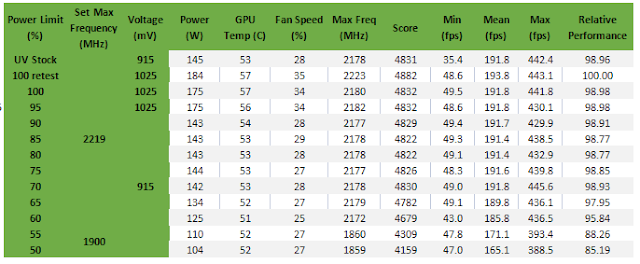

So, knowing what I just laid out above, you can understand why the scaling tests performed here are as they are: just as when overclocking, the stable boost clock requires enough board power to not crash, as the power limit is decreased, the same scenario is reached, but in underclocked situations.

First up, to explore the scaling of the RX 6800, I went to the trusty Unigine Heaven benchmark. Attempting to repeat what I did with the RTX 3070, I set a modest overclock on both the core and memory, whilst undervolting, and attempted to scale back the power limit to a minimum of 50% in comparison with the stock settings.

Unfortunately, Heaven is just too old and too light a workload for the RX 6000 series and I essentially saw no real scaling down to the 65% power limit - performance was flat, almost identical to the stock result.

What this appears to show is that because Heaven is not demanding enough, the card is able to reach high clockspeeds, without needing more power to keep those speeds stable. Additionally, I was worried that portions of the die, or more accurately functions of the silicon design, that would require more power or voltage to work at high clocks are not activated and, thus, not stressed.

So, I needed to corroborate this result with another type of workload.

|

| Performance is flat... Heaven just isn't demanding enough. |

Picking out Metro Exodus Enhanced Edition as a demanding RT benchmark, I set out to see how the scaling would progress. I was rewarded with a similar scaling of performance with power... (actually a little worse) and so I concluded that could be normal behaviour for the RX 6000 series.

With this application, in order to be able to not have crashes and artefacts at lower power limits, I had to play with both core voltage and frequency - showing that, indeed, this type of workload was more stressful on the silicon than Heaven.

|

| Performance drops very quickly at 55% power limit.... |

But this is a very specific type of game engine... would I get a flatter profile, like we did in Heaven, at the same power in a more generalised stressful workload? Last time, I had used Unigine Superposition to test the power scaling of the RTX 3070 and found that performance started to properly drop around the 75% power mark (165 W).

Of course, total power draw is not accurately comparable between the two products because of the way that Nvidia and AMD report power measured to the available sensors. So, in theory, we always need to add some amount on top of the reported value in programmes like HWinfo. I'm not going to do that here because I would just be guessing the amount.

However, the ratio between 100% and each step can be compared and in Metro Exodus we're seeing a drop at around the 65% mark, which would mean that, potentially, the RX 6800 can be much more efficient in its operation... but let's compare apples to apples.

|

| RT performance drops quite quickly with the reduced power limit... |

Knowing that the card was now stable in a demanding workload that tests all parts of the silicon and not throwing errors and artefacts with these clocks, voltages, and power limits, I was now able to apply these to the same benchmark I used when looking at the RTX 3070 - Unigine Superposition.

|

| Unigine Superposition scale nicely, but if we didn't have that substantial overclock the results would be much worse... |

Something that I observed during this testing, and I'm not sure if it's a 'me' thing or not, is that RDNA2 can get relatively large gains in performance from undervolting and overclocking compared to Ampere. Here, we're looking at 9% performance increase above stock at the same power draw. That's pretty impressive given that I was not seeing the same when testing the 3070.

Sure, I'm getting a 9% uplift with 231 extra MHz, but with Ampere I was getting 1% uplift with 60 MHz... if it scaled the same, we'd expect 2.25%.

So, Ampere doesn't scale or clock as well as RDNA 2 does. The big difference is that Ampere didn't need its hand holding to determine what stable core frequency it could maintain at any given power/voltage limit.

|

| Scaling performance with power shows the efficiency of the architecture... |

If I had just jumped into running Superposition straight away, I wouldn't even have gotten these numbers because I actually tried raising the clock during this testing and I found that the card could be stably benchmarked in configurations that were actually, provably not stable in Metro Exodus (and presumably other applications, too).

In a way, this makes RDNA 2 more dangerous for casual overclockers like myself because there is a higher chance that they could be running an unstable configuration on their card, thinking that any crashes they encounter are due to poorly optimised software.

Going back to stock scaling, without an overclock, the RX 6800 starts really losing performance at the 85% power limit and, from the above chart, we can see that it's because the core cannot maintain the specified frequency.

If that were the whole story, then the AMD card would be the loser, here. As it stands, it's really the winner because that drop happens from a 9% advantage - noting the caveats mentioned above.

So now that we've explored the scaling and found correct and stable configurations at each power limit, let's look at how much performance we're losing across the same applications as we did for the RTX 3070...

50% scaling...

Looking over the results, we get a geomean of around 0.85x - approximately the same as with the RTX 3070. The big difference is that Metro is the application that is dragging the average down in contrast to it bouying the results of the 3070.

If there's one take away from that, it's that in future game applications that incorporate ray tracing, performance scaling will be worse for the AMD card... and that's not a surprising result.

|

| I find it impressive that minimum fps is not affected as much as the maximum... |

What I will note, though, is that due to the massively oversized cooler, temperature and associated fan noise were never really a concern during the entirety of this testing - in fact, I stopped listing the fan speed in later tables because of the very low rpm when in operation.

There are two big things to note, though. First, the fan curve out of the box for this XFX card was ABSOLUTELY HORRENDOUSLY LOUD... *ahem*. Seriously, it was obnoxious to the point of driving me insane. That was luckily fixed by tweaking the fan curve in Adrenaline. Which brings us to the second point...

The Radeon software is just more buggy than Geforce's. Even at stock settings, with just my fan curve applied, Adrenaline's tuning settings will crash (sometimes at system boot), returning everything to stock - without the user even being aware unless they load up the application to double check.

Adrenaline also had some small conflicts with MSI Afterburner being installed (the aforementioned fan curve could not be set, initially, until Afterburner was uninstalled).

|

| Scaling in various applications is pretty good... |

I also could not work out how to get the monitored parameters showing on the screen in-game without recording them, like you can with Frameview, Afterburner/RTSS, and CapframeX. Maybe you can't even do that... which seems a huge oversight.

[EDIT 19/Feb/2023]

It seems that this is a software bug I encountered in Adrenaline and the actual setting I needed to select was, for some reason, hidden until it was enabled. The only way to find it and select it was to search in the application search bar for the keyword "monitoring". Now, I have the full functionality available and the option shows up in the menu like it should.

I also dislike how much AMD is locking down their GPU hardware. I understand that they wouldn't let people dangerously over clock/volt, leading to hardware damage, but undervolting and down clocking can do no such thing... there's no reason to stop any enthusiast trying to also save energy as much as they wish.

Conclusion...

AMD's RDNA2 architecture has a good amount of overclocking potential hidden behind the curtain but that power needs to be thoroughly tested in multiple applications in order to actually prove what is stable, due to the way that performance is tied to the core and memory clock frequency. In contrast, Ampere appears to have less extra in the tank, so to speak, but is much more adjustable and friendly to manipulate by the user.

Unfortunately, for the user to really get the most out of their expensive graphics, they need to go behind AMD's back and get help from the community. This shouldn't be the case and, unfortunately, it's worse for the current generation of GPUs.

Interestingly, one you've gotten around the hurdle that AMD have put in your path, both RTX 3070 and RX 6800 manage to achieve approximately 85% of their stock performance at 50% of their power limit, showing that both architectures are equally power efficient when they are set to be.

Looking at the benchmark, Superposition, at stock the RTX 3070 leads in performance, but once overclocked, the RX 6800 blasts it out of the water. Moving to a 50% power limit, both cards deliver approximately the same performance for around the same power draw (approx. 110 W).

It has been said many times that Ampere was a very power inefficient architecture, but my experience shows that it's basically just as efficient as RDNA2, with the caveat that it cannot clock as high due to the Samsung process node. Additionally, as we have known for a while now, Ampere's doubling of the FP32 units does correspond to an actual doubling of use due to the dual nature of the second set.

So, taking an approximate 1.5x modifier to the shader unit number (5,888) gives us 3,925 - very close to the number in RDNA2's RX 6800 (3840), which explains their relatively close performance scaling (except at the high end).

With this knowledge obtained, it seems that, in hindsight, it was obvious that Nvidia's Ada GPUs would be massively more efficient than AMD's RDNA3. But that's it for now - hope you enjoyed the post!

No comments:

Post a Comment