|

One question I've often wondered about is the performance variability between individual GPUs we buy as consumers. Sure, individual models might have different performance/temperature profiles based on model power delivery or cooling design but the chip-to-chip variation has not really been tested - at least as far as I've been able to discover!

Since I have access to two identical model GPUs, I thought I'd take a VERY limited look into the issue...

What's in a Chip...?

This question has been explored from the CPU-side of the equation multiple times. The upshot of all those tests was that there are small differences in unit to unit performance but the overall performance delta is pretty small (on the order of around 1-2%). All of those tests also had small sample sizes, with the largest being 22 units of the same product but, for a CPU, the system around it* can have a larger effect than the inter-silicon differences.

*Motherboard design, cooler/heatsink design, effective heatsink mounting, case airflow, ambient temperature, etc.

The same logic can be applied to GPUs, as well - with many of those peripheral systemic effects applying - the only difference is that GPUs come as an assembly. So, in theory, inter-unit variation within the same product should be low.

Why hasn't this sort of testing been performed on GPUs? Well, unfortunately, in general they cost a lot more than CPUs* and getting ahold of mulitple of the same SKU is difficult**. I'm a little lucky in that back in 2020, I grabbed an RTX 3070 for myself and my dad when a batch became available as we both needed a GPU. Amazingly, this batch wasn't bought up by an army of bots within seconds of being posted, but I did pay over the odds for them, so... Yay!?

Anyway, I was able to actually play games (including Cyberpunk 2077), so that was a decent Christmas present to myself...

*You can buy a mid-range CPU for $200 - 300 but a mid-range GPU is typically $500 - 700, nowadays...

**Unless you happen to be a cryptocurrency miner...

The one area where there appears to be active community* research and investigation is in the super computer and large scale data management arenas. This community is heavily reliant on the consistent long-term performance and low short-term variability of individual units in their arrays and, so, it's only logical that they would have a focus on these effects.

*As opposed to the manufacturer...

However, saying that, their conclusions are more designed around mitigating problems in order to maintain the uptime and output of these "big iron" devices, than identifying actual individual unit variations - though one should be able to tease out that information from their underlying data.

Reading through the aforementioned papers, you can see the broad conclusions are:

- Cooling solutions are important for consistent temperature and frequency operation.

- Scheduled maintenance is important to keep the GPUs clean and TIM refreshed.

- Power-off cycles, or low frequency operation in lull-times is important to avoid NBTI and PBTI.

As such, there isn't much data available for us to look at inter-unit performance variability.

|

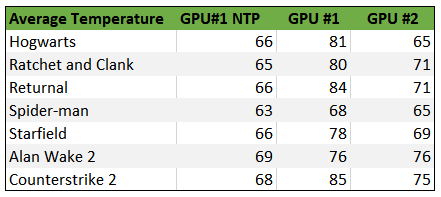

| Same system, same drivers, same day... except for the NTP results. |

Interesting results...

Maybe I'm burying the lede there with the title but I found the results I obtained are really interesting! Aside from the fact that all results are within 5 % of each other (not surprising), having some tests perform better, whilst others worse was surprising for me.

The test system was as follows:

- i5-12400

- Gigabyte B760 Gaming X AX

- 32 GB DDR5 6400

- Zotac Gaming RTX 3070 Twin Edge OC (x2)

- Windows 10 (Latest version)

- Geforce driver 551.52*

*Yes, this testing was performed a while ago... Unfortunately, I'm a busy person!

You see, the thing here is that these two cards are not exactly identical. No, one has spent four years in a warm country and the other has spent that time in the cold northern parts of the UK. Granted, not all of that time has been spent powering through graphically intensive games, but it surely plays a part. This is something that I think explains these results because when I look at these performance numbers and then take the data for GPU temperature which I have available from this testing we see a slight difference...

|

| GPU#1 is consistently hotter than GPU#2... |

Yeah, that's not a very small difference! On average GPU#1 (my GPU) was, on average 10 °C hotter in the same workloads compared to GPU #2 - though it really depended on the workload. For instance, Alan Wake 2 showed zero difference (despite it being a heavy GPU-driven rendered game) whereas Hogwart's had a 16 °C delta between the two cards!

If we look at the relationship between the delta in temperature and delta in performance we get this nice little graph where we see that at increased temperatures, we tend to get those slight dips in gaming performance. Now, that's not an unknown phenomenon for silicon-based computing. Hell, the logic applies to all types of computing - just not necessarily the working range. But what I decided to do was to take a look at the thermal paste/pad on GPU#1 and see what may be causing the issue.

Unfortunately, in my excitement/nervousness about dismantling the card (which was incredibly easy to do compared to other cards I've dismantled previously) I forgot to take a picture. However, the machine-applied thermal paste had mostly migrated off of the GPU core, resulting in patchy or thinner coverage of the thermal compound.

So, I cleaned up the core and applied fresh thermal paste and retested - this is what I am calling the "NTP" result (New Thermal Paste).

|

| Hotter bad, colder good! ...Who knew?! |

We can see a substantial reduction in temperature in all the tests and even slightly below that of GPU#2, which must also have a little of the same reduction in thermal transfer efficiency, just not as much. With the NTP applied, the spread of temperatures decreases by a large margin and the results cluster more closely around those of GPU#2 - but the results themselves still have some variation.

It's clear to me that the thermal paste I applied is doing a better job than the cheap stuff they put in the GPU because I immediately noticed that the fan noise was significantly reduced during the testing and even when I first obtained the card back in 2020, it was quite loud - the only reason I wasn't very happy with the model despite liking the form factor. Now it purrs like a kitten!

However, despite the obvious acoustic improvements, and the odd game like Hogwart's being a bit slower (5% is only 3 fps in this example!) performance is effectively the same.

Even though GPU#1 was around 15 °C hotter before the repaste, the performance was basically unaffected. So, yes, my sample size is statistically insignificant but I can say that it's more likely that the performance of your GPU cooling solution is going to have a stronger effect (though likely still negligible) on your GPU's gaming performance...

|

| Looking at the results, despite the reduced temperatures, performance is much of a muchness... |

And that's it and that's all... Nothing complicated today, just a little look at two GPUs and how they performed relative to each other. I can observe no difference in silicon quality between these two parts and, even when taking into account a relatively large temperature delta, performance is not negatively affected in any appreciable manner.

1 comment:

It would have been more statistically interesting to have repasted BOTH 1 and 2 with the same paste/pads. That said, I'm willing to wager you'd find no perceivable difference in games between the two even by also repasting 2, with numbers in the 90's/100's they're probably both as equally GPU-Boost limited (assuming identical vBIOS on both cards).

Post a Comment