|

| Radioactive... |

So, one of the reasons why I haven't really had a big technology post in a while (aside from being super busy at work) is that the rumours for RDNA3 have just been all over the place... it has meant that anything I analyse or tried to predict was just going to be out of date in a few days once any given leaker decides to change their leak.

However, with the latest leak, I thought I thought it would be interesting to see how that stacks up against the 3 times the performance of the RX 6900XT claim we repeatedly keep seeing bandied about, as well as my predictions made at the beginning of this year...

Shenanigans...

First off, let's get this out of the way: I was never on-board with the 3x performance of the RX 6900 XT claim. We have NEVER seen this level of a jump gen-on-gen in the history of dedicated graphics processing devices. Perhaps we can claim this level of advancement when comparing products from different companies or different graphics display paradigms* and, despite being quite bullish on where technology is going in terms of rate of advancement over the next few years, I just could not evision a scenario where one company grants such a performance bump for their products when demand and market is not guaranteed to be there for them to soak up the profits.

And this is all just ignoring the fact that the architectural advancements required to gain such a performance jump have no indication of existing. We're not jumping from products produced on a 100 nm process to a 7 nm process. We're not entirely rearchitecting** the core FP32, ALUs and whatnot in the design... so where would SUCH a jump come from?

*CPU processing to GPU processing, non-dedicated RT hardware to dedicated RT hardware - for example.

**There are no hints or leaks that this is on the table, just an evolution of the existing RDNA/RDNA2 architecture.

|

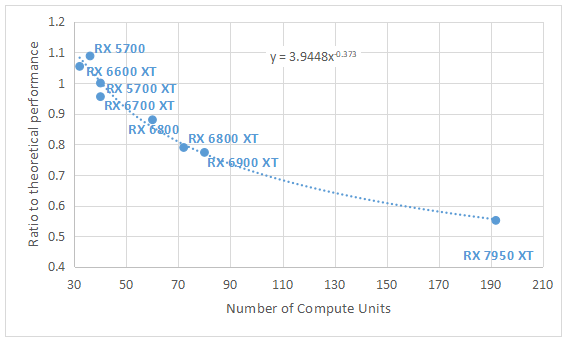

| The expected performance of 240 CUs (15360 cores) for 3x the RX 6900 XT just showed unrealistic efficiency based on how wide the architecture would be... |

Looking at the available leaks back in December 2020, I made predictions for the performance of a theoretical 15360 core 240 CU behemoth that was leaked by Greymon55*. Later on, in late January, I engaged with a twitter user in a conversation about potential performance of Navi 33 and, through the discourse we discovered that the leaks being put out by this person were inconsistent. They were stating an architecture with 64 cores per CU as RDNA 2, and then switched to the same number of cores but with 128 cores per CU, therefore halving the CU count. Now, we're at the point where almost none of the above was accurate.

*In a now-deleted tweet, which - IMO - shows that they know they were wrong and do not stand by what they said... which is, again - IMO - as unforgivable as posting an April 1st "joke" that is as close to their actual 'leaks' as to be interpreted as truth... which they did and passed-off as being unrealistic...

Now, I don't know how AMD or Nvidia actually make their architectures from a design stage to production stage - so maybe I'm completely incorrect in my assumptions and conceptions. However: I'm sure there are multiple ideas but, if they're anything like my industry or my experience in academia, given that they are starting from a known position and not a blank slate, they will move forward from what they last had AND they will have specific design ideas and implementations in mind when doing so, based on the experiences and lessons learned from the prior architecture.

i.e. If AMD saw that their downstream silicon throughput isn't challenged from data sent from the DCUs, then they might feel like trying doubling the amount of resources in the DCUs, in order to fully utilise those downstream elements.

The point is, when AMD started work on RDNA 3, they knew what they would be trying. Given the information we appear to have to hand right now - they never intended to go SO wide. The leaks of 15360 cores appear to have been either extrapolations or inventions of Greymon55 and, given their other recent behaviour regarding the April 1st tweet, means that I find it hard to put any weight behind anything they leak going forward.

I am going to put this out there but in the same way insider trading is "NOT OKAY", joking about your professional activity and/or viability is a sign of immaturity and lack of understanding about how your actions can affect businesses, investments and other people's trust in you.

I have the exact same response to LeBron James' April fools' tweet. You're injured for the rest of the season?! Stock drops, fans rail... not funny and too close to possible truth than it should be. If he wanted to tweet that he broke his legs after trying for the skydiving height record or something ridiculous, that's fine... but posting mundane - totally within reason - "jokes" relative to your job/position is unprofessional and you won't be able to convince me otherwise...

|

| The whole point of April fools is to get people to believe the unbelievable... This is akin to the Gamestop CEO tweeting that they're going into receivership. (Psych!) [Source] |

A leak should be a leak - not the invention of the leaker. And if the leak turns out to be wrong - no biggie, it was information provided by another party but the leaker themselves still has to shoulder the responsibility of explaining why they should continue to be trusted and what led to them believing the person (or document etc.) who leaked the information to them in the first place that led to them sharing incorrect information. That's what I do (and did) when I am wrong.

But enough of this - back to my analysis of the actual potential hardware.

AMD Navi 33/32/31 (updated) chip data, based on rumors & assumptions

— 3DCenter.org (@3DCenter_org) May 9, 2022

As @kopite7kimi pointed out, old info from last Oct is outdated 😉

updated:

- 20% less WGP

- no more double GCD for N31/N32

- 6 MCD for N31 = 384 MB IF$

- 4 MCD for N32 = 256 MB IF$https://t.co/rj2G2gi9CU pic.twitter.com/yDqeTTdSAT

Predictions...

I showed why I didn't think the behemoth of a 15360 core RDNA 3 would meet 3x the performance of an RX 6900 XT. However, let's look at the new "suggested" specs for a full die of 12288 cores with the new configuration of 256 cores per WGP, instead of 128 cores, at a higher frequency than was previously leaked.

Going from the leaks of 3x the performance of the RX 6900 XT we get the below extrapolations based on the real world performance of RDNA/RDNA2 cards in a composite sample of games running at a native 1440p with the numbers taken from the review data over at TechPowerUp.

To summarise the comparison between suggested RDNA 3 (Navi 31) and RDNA 2 (Navi 21) - indications from the latest leaks are that each CU has quadruple the number of FP32 cores in them - 48 WGP with two compute units in them (to make a DCU complex) for a total of 96 CU and 256 cores per CU - compared with 64 cores per CU in RDNA/RDNA2.

Now, I'm not going to say that this is a 100% accurate comparison - it's not. It's more to give an idea. For one, I am assuming a 100% utilisation in terms of efficiency per CU/FP32 resource between RDNA2 to this rumoured RDNA3 architecture.

I doubt that is true.

Ampere did something similar compared to Turing, doubling FP32 cores per SM and its performance was only increased by a factor of 1.4x. Sure, this is with optional integer operations on half of those cores in each SM, meaning that gaming performance would have been mixed but it's unrealistic to think that utilisation will scale linearly with these resources when there is no historical precedent of that in RDNA - RDNA2.

|

So, let's switch to what I had predicted at the beginning of the year.

Now, I'm basing these following numbers off of my own performance expectations of the upper bound being 1.95x the RX 6900 XT in gaming performance at 1440p because I inserted the numbers for a chip performing 1.7x and they look too unrealistic in their under performance so, given the new clock speed target of 3 GHz and the new GCD layout, I believe that 1.95x is more likely than it was for the prior rumours.

So, let's look at those curves!

The curves tell the story of a potential high-end product that is almost 2x the performance of the RX 6900 XT for a substantial increase in clock speed. That's very slightly better than the similar jump from the RX 5700 XT to the RX 6900 XT - which also incurred a significant increase in compute units and frequency to achieve (in this dataset) a real-world 1440p performance increase of 1.88x.

Just comparing the scaling per CU (corrected for sustainable workload frequency) we're talking of a ratio between the "5700 XT to 6900 XT" (0.0259) and "6900 XT to 7950 XT" (0.0211) of 81% which continues to show that you don't get linear scaling with resources.

However, that's not the end of the story.

I also had said that I thought there was an outside chance of an absolute performance increase of up to 2.24x the RX 6900 XT and that gives us a more attractive scaling curve. Of course, as people are wont to say: beauty in mathematics does not necessarily reflect nature. i.e. The universe doesn't care about beauty and so natural concepts should not be predicted based on the beauty (or lack thereof) of the mathematics involved in their prediction.

With the older, assumed dual GCD die setup at 2.5 GHz, I think my prediction of 1.7x - 1.95x was safer. However, with the revised rumours to a single GCD and 3 GHz clock speed, perhaps it's more likely we're in the range of 1.95x - 2.24x the performance of the RX 6900 XT. Yes, that's not as high as many are predicting but I believe that RNDA 3 will be as pushed to its frequency limit as RDNA 2 was.

Ignoring concerns about the expense of PC gaming, availability and power consumption, I'm really looking forward to seeing how close I am in my predictions. Hopefully, some of you might find these ruminations interesting too...

|

| Better agreement with the scaling curve at 2.24x the performance of the RX 6900 XT... |

No comments:

Post a Comment