|

Released on PS5 a few years ago, Marvel's Spider-man: Remastered made its way to the PC sphere recently and, surprisingly, granted the opportunity to look at the hardware side of things more closely due to the way the engine works and how Nixxes chose to port that engine to the PC.

Looking into this is especially important, from my point of view, because the performance of this game on various hardware appears to be poorly understood by many... Additionally, it may provide an indication of where, in the PC hardware, future games may require more brute strength.

Spider-man is also the third game released on PC with the inclusion of both FSR and DLSS, allowing for us to actually compare different implementations in different game engines to get a handle on how the two stack up in terms of performance increases, as well as power savings.

[Update]

I've now updated the article with the data from the 12400 system...

[Update 2]

Updated Resizeable BAR results for the 5600X...

Whatever a Spider can...

Spider-man does something a little unusual with regards to hardware utilisation: it uses everything you give it.

I've not seen any game that I've played before that will simultaneously load up every core on the CPU, bring a GPU close to 100% utilisation, AND stock the system RAM with data galore, while also seeing a benefit from increased data bandwidth through the RAM and PCIe buses. The game is essentially quite heavy on almost all aspects of a PC, except for the storage, while still providing good performance on a range of hardware from old to new.

It is, as we like to say, a game that scales well with resources: a nirvana that, typically, gamers are crying out for. That has not been the reception that a vocal minority of gamers have been giving it, however.

Claims of it being an "unoptimised" title, because achieving higher than 100 fps at higher settings can be quite difficult on some hardware configurations, and theories of disabling hyperthreading/simultaneous multi-threading granting more fps abound.

So, let's look at the performance of my systems in this game and see what we can about the thread scaling in the process.

Setup 1:

- Ryzen 5 5600X

- 32 GB DDR4 3200 (4x 8 GB dimms)

- RTX 3070 (undervolted and power limited)*

- WD Black SN750 1TB PCIe NVMe (Random Read 4k IOPS Q=32, T=8: 515k)

Setup 2:

- i5-12400

- 16GB DDR4 3200 (2x 8 GB dimms)

- RTX 3070 (undervolted and power limited)*

- WD Blue SN570 1TB NVMe (Random Read 4k IOPS Q=32, T=16: 460k)

*The same graphics card was used in both systems...

General Performance...

|

| Data from the table below plotted in a more readable format... (Tested on R5 5600X) |

Spider-man is a game about moving quickly between streets with lots of occlusion of the scene behind buildings and objects in the foreground, which may become disoccluded at any time. It is a game that has many AI driven agents - people, cars, dynamic and static enemy encounters - all interplaying and interacting with each other and the player.

These two aspects could really put a strain on the CPU resources, but which settings make the biggest difference in terms of fps gains?

For this testing, I've put the game to the highest settings possible (with the exception of using SSAO), without ray traycing, at a native 1080p and then varied a single setting in the options. The test itself is performed mid-way through the game, when the Dragons are spawning in dynamic instances along a street that I've chosen that has a decent amount of geometry, AI agents and even a metro train at its end. To capture the data, I used Nvidia's Frameview, and then selected a data period of around 28.5 seconds as I travelled down the street by web-slinging. I cut the first 1.5 seconds of data and any data past 30 seconds as these included sky (at the beginning of the run) and a static section at the end, which bias the results towards the higher-end of the spectrum.

While this isn't the toughest scene in the game to run, it is more reflective of the average experience a player will encounter - not everyone will be hanging around Times Square for the 8-12 hours of the main story...

One important thing to mention here is that changing the settings in-game applies the setting but does appear to have a bug where computational overhead can sometimes be introduced, meaning that you get lower fps output than you would from a fresh boot of the game. It appears that many people commentating on the performance situation are not realising this and are taking single anomalous points of data as "the truth".

Unfortunately, I was also duped by this and other single points of data before I went deeper into the performance of the game.

| Here you can see the raw numbers obtained during the testing for the 5600X... |

You can see here that the options that increase performance the most are indeed related to the CPU - the traffic and pedestrian densities. Other settings have very minimal performance impacts (at least on my setup) that don't really affect the performance positively or negatively outside of margin of error. In fact, going from the highest to the lowest possible settings in the game (without touching the FOV slider, or ray tracing) only grants you a 25% performance boost to FPS, with 10% of that coming from reducing the AI agents.

This is where I think the game's origins as a console exclusive may be coming into play: the CPU in the PS4 is not particularly powerful by today's standards, especially not in terms of single threaded throughput, but then neither is the Zen 2 CPU, VASTLY greater in overall performance than the PS4's as it is.

Thread Scaling...

In order to overcome these hardware shortcomings, the engine uses a lot of parallel worker threads to manage some aspects of the game world - and these appear to be able to be spawned across a large number of available resources. This results in a game where the smoothness of the presentation increases with increasing CPU threads when played with the same game settings.

However, both the PS4 and PS5 have a locked CPU frequency whereas desktop parts do not. Going over the data for my 5600X, I can see that when SMT is disabled, the clock frequencies are higher than when it is enabled, meaning that more things can be calculated and instructions issued by the CPU in the same amount of time.

|

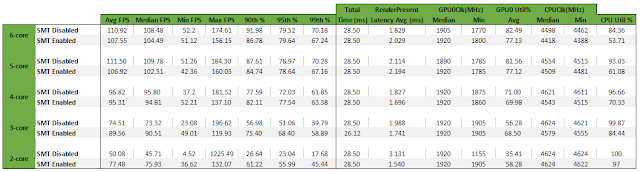

| Summary of the data collected for SMT on/off and number of cores disabled/enabled on my R5 5600X... |

In comparison, the 12400 features a very stable CPU clock speed. Meaning that there's essentially zero difference between the median and minimum clocks reported when enabling or disabling HyperThreading. Additionally, we do see a slight performance bump of a few FPS and this is accompanied by reduced working GPU clock speeds, which indicates to me that the system as a whole is getting things done faster, allowing the GPU to dial back clock speed when not needed.

One thing that is in the negative for the 12400 is that the latency reported for each frame call to start rendering on the GPU, which is a little confusing. If CPU utilisation is up, most FPS metrics are higher and GPU utilisation is up by a good 8-10% then why are we getting a little longer latency for the GPU to do its job? Is that purely a reflection of the lowered clock speed? It seems like it is - but I would expect to observe that in reduced maximum frame output, and indeed we do for over half of the tests.

|

| Summary of the data collected for SMT on/off and number of cores disabled/enabled on the i5-12400... |

Additionally, it appears that asset streaming, a partial issue on PS4 but a solved problem on PS5, is mostly mitigated by also using the available CPU cores to stream and decompress those assets primarily from the system RAM, leaving only a very light requirement of data streaming from the system storage.

In my testing, I've seen the game utilise around 12 GB of RAM, only streaming in a maximum of around 0.086 GB/s of data from the storage, with an average of 0.010 GB/s. For a game where you zip around and can also fast travel, that's really telling. Yes, it's true that loading appears a bit slower than on the PS5 but we're talking of a second or two... That difference is not something that I really care about.

Switching over to fast travel, we also do not see much data loaded into the game from the storage, meaning that the majority of the required data is already present in system memory.

| Spider-man appears to load up the majority of important data into the system memory instead of streaming it from storage... (World streaming on the left, fast travel on the right) |

So, it appears that this game does what I have always wanted and proposed that games to do: load up the system RAM with world data in order to improve performance. I think it really makes sense and I don't know why we're still trying to break that 16 GB RAM requirement barrier for gaming here in 2022.

For a comparison with a title that Streams a lot of data from the storage, moment to moment throughout gameplay, I found AC: Valhalla drew around 0.111 GB/s.

Enabling ray tracing will also add on extra CPU overhead as there are an increased amount of draw calls for items in the myriad reflections that the cityscape has to render. In my testing, the overhead between RT on and off is around 21% on my 5600X and 18% on the 12400.

This is why those claims of better performance when disabling hyperthreading or simultaneous multi-threading were difficult for me to believe: it shouldn't normally be the case, unless there is some other bottleneck in the particular system in question that the user isn't aware of.

For my test scenario, the effect of having HT/SMT disabled, does indeed show that you would observe higher average framerates. But sometimes simple numbers do not tell the whole story...

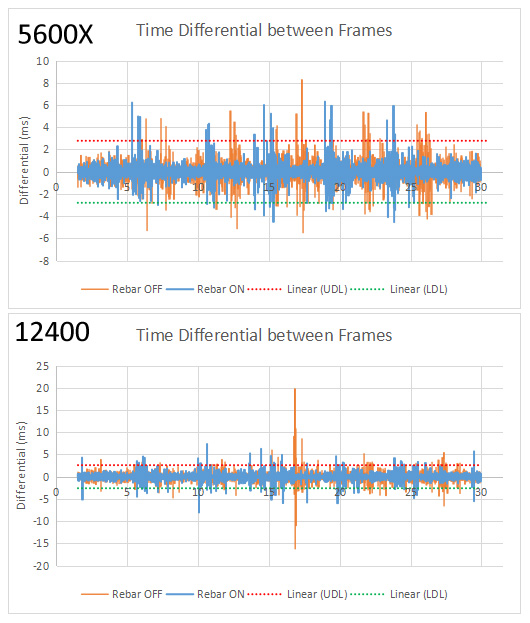

What is apparent from the above data is that, although the average framerates can be higher in some scenes with SMT/HT disabled, the differential frametime graph always shows more inconsistency, meaning that sequential frames are delivered in a less even manner. With SMT/HT enabled, we see that the graph is flatter and I personally think that this small loss of performance* is not really worth the greater inconsistency in the presentation - though I'm not particularly sensitive to frame rate changes in general.

*Of the order of 3-5 fps on average for 4 - 6 core systems.

I've also plotted on the graph the effective process control for the SMT graphs with an upper and lower defined limit (UDL/LDL) of the median FPS +/- 3 standard deviations. This provides a simple indication of how stable the presentation of the sequential images is for the testing run - we'll address this in a moment.

Additionally, it is apparent that when the CPU is more heavily loaded with the ray tracing tasks, disabling SMT/HT has no benefit because doing so on a 6 core chip will overload the cores which are already around 85+% with this graphical feature off.

| When RT is on, the story is the same as with it off for the 5600X... but just showing it here to confirm. |

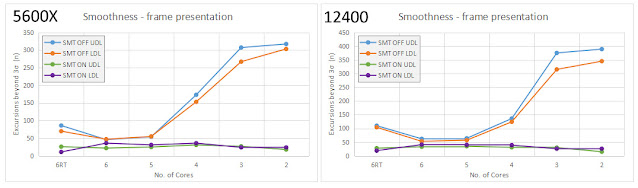

Getting back to the UDL and LDL values, we can see in the graph below that the number of times each of those limits is crossed/breached (however you wish to view it) increases with SMT off and, with it off, increases greatly with reduced numbers of cores. Quite simply, this means that there is a large deviation from the median time to present a sequential frame more frequently under these conditions than with higher core counts or with SMT enabled.

|

| This chart shows the number of times during each test that the UDL/LDL were breached. This is a direct representation of the "smoothness" of the presentation and "SMT/HT ON" wins throughout... |

|

| ...And the above graphical data in numeric form (Plus some data with Rebar disabled) |

Comparing between the 12400 and the 5600X, we see that the former has more excursions in frame presentation past the upper and lower defined limits of 3 standard deviations. The plain and simple reason for this is because the frame to frame variance is larger for the 12400, meaning that frames are presented in a very slightly more even manner by the 5600X.

I believe that this is related to the higher temperature observed for the 12400 (80+ C, compared to 70+ C for the 5600X) and therefore there are scenarios where the 12400 is throttling, causing small spiking bottlenecks during gameplay. Given the turbo frequency of 4.4 GHz for the 12400 and 4.6 GHz for the 5600X, the former achieves 3.9 GHz with 6 cores enabled and the latter achieves 4.5 GHz, indicating this is the most likely explanation.

However, even with these slight imperfections (and they really are slight) the 12400 has a small performance and energy efficiency advantage over the 5600X for this workload: Running in the 6c12t configuration, the 5600X pulled an average of 76 W, while the 12400 pulled only 50 W.

[UPDATE 2]

There is one more performance related issue that was requested by DavidB: the effect of Rebar. I tested this on the 12400 both systems and the results actually surprised me a lot because I didn't see perceptual difference when benchmarking.

I know that I said above that the game heavily utilises RAM and memory bandwidth instead of streaming from the storage but I wasn't of the opinion that it would really make much difference. However, while the majority of the numbers collected really don't show any difference for the 12400, the lowest observed FPS value was 50% worse than that observed with Rebar enabled. Now, given the overall differential time between frames being quite small, I did not observe this stutter during the actual gameplay but, as I mentioned above, I am not sensitive to these small transient stutters so it may not be the case for other players.

However, disabling this feature on the 5600X was different. It resulted in much lower average and median FPS results but no significant drop in the lowest FPS recorded - i.e. no stutter. Now, the only really big difference, (aside from platform, of course) is the use of PCIe Gen 3 on the Intel system and PCIe Gen 4 on the AMD system. It's possible that extra bandwidth does play a role in those lowest of the low FPS numbers during gameplay.

The difference, even with the stutter, is still very small, though.

| Disabling Resizeable BAR has a significantly negative effect on the minimum FPS... on the 12400. This is a strange result! |

I think that the majority of players will not have a bad time playing with Rebar disabled so my initial assessment is mostly correct. In the same vein, I did not notice perceive a difference based on PCIe Gen 3 vs Gen 4 (the Intel system is using Gen 3 due to a dodgy riser cable) but I cannot rule out the possibility that there will be a situation in the game where having resizeable BAR disabled will cause a visible stutter.

|

| Tracking the frames that are presented outside of 3 standard deviations from the median shows that Resizeable BAR OFF gives worse frame presentation - i.e. smoothness... |

One final note is that the 5600X was, again, more consistent in its frame presentation but, overall, having the resizeable BAR off meant worse frame presentations (and thus smoothness) throughout the benchmark on both systems...

Optimisation...

I still think that the game is well-optimised but perhaps too much so. Although we talk about the number of active threads equally when speaking about hardware, to my understanding, hyperthreading and simultaneous multi-threading are not really the same as the complete physical core of a CPU.

I.e. these features are about partial parallelism within a single CPU core in order to mitigate specific bottlenecks, not about having a complete second core to work with.

This means that while a CPU of 6 cores with 6 threads is equivalent to 6 unique and complete threads, a CPU with 6 cores, 12 threads is not double the number of threads it's more like 1.X times 6 threads (i.e. if we had to numberise it: 6.6 - 11.4), total.

Additionally, CPU's tend to struggle to reach higher all-core frequencies than when fewer cores are taxed, meaning that optimising to use more threads can mean a trade-off in the frequency those threads operate at.

At the same time, I'm seeing that we're almost entirely CPU-bound in my testing environments: pushing up the power limit and clock speed of my GPU grants no increases in performance in an iso-setting comparison. Considering that the GPU can keep up with the vastly increased output of the CPU when it's able to make those render requests with lower settings and/or with SMT off, it seems all but certain that the CPU is the limiting factor (outside of ray tracing situations).

In summary, it isn't that the game isn't optimised: it's that it's optimised in one way over another. That isn't good or bad, it just is.

For a single player game to be able to achieve around 60-100 fps at high settings is perfectly fine, in my opinion. If this were an e-sports title, I'd be more concerned.

In Summary...

I think we've put to bed the idea that disabling SMT/HT will result in better performance in this game (and perhaps the logic can be applied to many other games that have been claimed to benefit from this, too). At the end of the day, gameplay performance is not a single moment in time: anyone can screenshot a higher average FPS number but it's only through longer-term data collection that the true effect on performance of any setting change or hardware change can be assessed.

Unfortunately, this sort of analysis is very time consuming and I hope you'll appreciate the extra effort that went into presenting this data in as digestible a manner as possible. I believe that use of statistical tools like process performance is beneficial to the general understanding of consumers about game performance and I'm surprised that the technical reviewing landscape essentially stopped at the huge lurch forward that was 1% and 0.1% lows over a decade ago.

I don't think we've really seen any further advancement in monitoring of performance since then and certainly not in terms of presentation of data to the consumer. This is something that I've been toying around with for a while now but not had the chance to put it together into a useful or timely article.

Good read.

ReplyDeleteI wonder if resizable BAR enabled versus disabled has any impact on these results?

I'm not sure it really has any effect but I'll try and have a look when I'm finishing the 12400 data. For all these tests, rebar was enabled.

DeleteFrom my testing though, I don't see any difference between PCIe 3 and 4 (i didn't mention it in the blogpost)

Exploring reBAR on versus off would be interesting as a data point.

ReplyDeleteTBH, not really surprised no difference PCIe 3 versus 4 (and I'd expect none with 5 either).

Updated the article. Seems to mostly potentially impact the lowest observed fps numbers, causing some small stutters.

DeleteSpecialK Threads optimisation or just its graph could help for an update on this.

ReplyDeletehttps://wiki.special-k.info/