|

I've seen increasing numbers of people commenting on various youtuber videos and hardware/game performance reviews complaining about how the reviewer got it wrong and that they're not even using the "best" RAM for the benchmarks. This is especially rampant now, during the cross-gen benchmarking period where there are some systems using DDR4 and some using DDR5.

You might have seen this behaviour, yourself. Especially if you follow bigger techtubers like HardwareUnboxed or PCWorld - but I'm sure it happens on a wider scale, I just haven't seen every instance of it.

So, what is driving this slowly mounting pressure on the tech review sphere and is it really pushing for better testing or are there misconceptions about the importance of RAM speed and latency?

I figured I'd take a look. Please feel free to skip the first two sections where I ramble a lot and get to the actual data!

Sheep (aka RAMs)...

In the past, I have attempted to tune the RAM in my main gaming PC with literally zero success. Tuning RAM is hard. REALLY hard - but not for the reasons you might immediately conjure to mind. It's difficult because memory is generally a very little understood aspect of the computer setup by the majority of people, it is also filled with very subtle concepts - so small changes can make a big difference, big changes can also make a small difference... and sometimes those changes, individually, can improve performance but in concert, can reduce it - which is rather counterintuitive. There are so many linked steps in getting a bit of data to or from the DRAM die on the memory module that there is no simplistic "slider" like there are for CPUs and GPUs.

The other thing that results from this complicated nature is that there are relatively few, easily digestible resources available to people wanting to start and understand this subject. Sure, there are people, like Buildzoid, who know their stuff... but the format of their presentation is fragmented, long-winded and unfocused. You can glean knowledge by watching a large number of videos but that is not necessarily the best or easiest manner in which to learn.

Similarly, the memtest github repository is like jumping into the deep end of the pool. There are relatively few hand-holds for newcomers to latch onto when things are stated or undefined. Although I used this resource to improve my understanding, once a base level was gained; when starting out, it was quite daunting and confusing in terms of everything presented.

And that's basically it. All other resources are ad-hoc groups that form and dissipate in forums and discord servers and the knowledge passed on there may not actually be accurate or beneficial. I've seen groups that say they all like to overclock their memory and tighten timings but then in the same breath saying that they no longer like to help outsiders because there's just too much support required to help them achieve a good result - and I think that tells the average person just one aspect of why arguing that reviewers should tune their systems used in benchmarks is a non-starter.

So, as a whole, the importance and tunability of memory is communicated to the enthusiast population less so than other, easier to grasp and explain, aspects such as tuning or modifying the behaviour of the CPU, GPU, and storage. The other reason is that the benefits are not always apparent, achievable or able to be demonstrated easily.

In the same way that reviewers tend not to demonstrate benchmarks with overclocked CPUs and GPUs because they do not reflect the average reality or ability of any given system to remain stable and/or achieve the same results, tuning memory falls into a much deeper, darker labyrinthian pit, which may drive those who enter to madness and despair.

However, after the latest controversy over DDR4 vs DDR5 over on HUB's channel, I saw a challenge issued to Steve to use DD4 4400 CL19 (and tune it) instead of DDR4 3200 CL14 in his performance reviews:

$146Aud Patriot Viper 4400MT/s B-Diehttps://t.co/ac6gxQ2Q5R

— linnaeus (@xLinnaeus) September 16, 2022

STEVE FOR THE LOVE OF GOD IT'S RIGHT THERE

And I snapped...

These people just spout the message given by those tech people that are showing gains from tuned memory without actually understanding the nuance. It's really frustrating and tiring. So, I clicked on the link and purchased the RAM kit.

Thus began my journey into RAM tuning...

|

| Is it, though? |

To B-die or not B-die...

Before I had even clicked on the link in the call-to-arms, I had already assessed the landscape from my original attempts to tune my DDR4 3200 kits (Samsung C-die, I believe) and had no success - every small change resulted in either an unbootable system or crashes due to instability. It was so difficult that I was unable to learn anything because I could not tell what it was that I was doing wrong.

Coming from that bitter experience, I had determined that the advice for RAM tuning is generally as follows:

- Buy Samsung B-die

- Use 1usmus RAM tuning guide to start with...

- Tighten timings to get lower latency

- ????

- Profit!

The problem with this advice is that it doesn't actually solve the main problem with RAM tuning: knowledge.

First off, there is no guaranteed way to know that you will get Samsung B-die when you purchase a product. Much like RAM overclocking and tuning, information on what they're selling is a closely kept secret of the RAM manufacturers. It's basically a game of the community buying RAM kits and then having them tested against software that (might) be able to identify them*, or inferring from the CAS latency rating of the RAM which type of memory chip is used to be able to achieve it at the rated XMP profile. Of course, there is no guarantee that the RAM manufacturers will not change the DDR4 IC (Integrated Circuit - aka a die) in the SKU that has been previously determined to include it by users but, so far, I am unaware of that happening like it has with SSDs.

*This software isn't always able to do so!

Secondly, the problem (as I've found it) is that Samsung B-die is incredibly flexible in what primary and secondary timings it will accept without throwing a wobbly - aka, a crash. Seriously, Samsung B-die pretty much lets you abuse it and it will sit there booting happily (though not necessarily without errors) all day long. This is both a boon and a danger.

The benefit is that you, as the potential memory tuner, get the opportunity to learn and understand the craft better. You get to see which numbers go up and down with the numbers you are increasing or decreasing in the RAM settings in the BIOS of your motherboard.

The danger is that you sit there and tell people that tuning the RAM will bring big benefits because you may focus on one or two numbers above all others... or that you're sitting there with an unstable configuration but just don't know it yet - but of course, it's your fault because you didn't pay for a license to be able to run Memtest overnight... (I'm not angry!) After all, you can show you got your memory from 70-80 ns latency down to 50 ns and the bandwidth from 45,000 MB/s to 50,000 MB/s and maybe you also have a game where that increase really shows. Worse still, anyone not using B-die (or who didn't know to buy that specific memory, or was unlucky) will not be able to achieve the same level of tuning flexibility as is possible with the memory sporting Samsung DDR4 B-die.

So, why NOT test with tuned memory?

|

| An excellent analysis and review of the effect of hardware on the game... you should take a look! |

From my perspective, I'm not at all against the argument that a reviewer could choose to use tuned memory... It's a choice as much as the choice to run a benchmark at a given output resolution or quality setting is. A "benchmark" just means that a test was standardised between equipment that was tested. Typically, though, reviewers prefer to test systems that represent the normal end-user experience. In such a scenario, overclocking, tuning, and undervolting/power limiting parts just doesn't even enter the considerations of reviewers - and that's the correct stance to make.

Another argument against it, leaving aside that everyone's personal experience based on luck of the silicon lottery and the interactions between the various parts in their own individual personal system will differ, is that it's basically pointless - as I will show later...

Finally, I admit that this wasn't the specific charge levied at Hardware Unboxed - they were accused of using memory that wasn't fast enough in their test systems. Now, over the next two entries I will also attempt to verify whether this is an accurate accusation or not.

With all that said, let's get to it!

Ryzen 5000 (aka Zen 3)...

I'm not the first person to say this, but memory speed and tunings really don't appear to matter much for Zen 3. It was vitally important for Zen 1, + and 2 CPUs but with the unified cache on the CPU CCX for Zen 3, it appears that these are no longer important. Want the proof? Sure!

I've spent the last month essentially spending all my spare time performing these benchmarks. Unfortunately, I would have loved to have waited for the Intel 12th gen results but real world work is kicking my ass and it will be another month before those are ready. So, in that spirit, I figured that now is as good a time as any to put "part 1" of this testing out there for everyone to enjoy!

My testing methodology is using the graphical settings I would use for the games in question - Highest quality settings at 1080p resolution. My system is as per the below:

- Ryzen 5600X

- MSI B450-A Pro Max

- RTX 3070

- DDR4 Corsair 2x8/4x8 GB 3200 or Patriot 2x8 GB 4400 RAM

Yes, I'm using a mid-to-high end system (depending on your point of view!) so my results will be limited by that aspect... usually, hardware reviewers are tasking the top-of-the-line CPUs and GPUs with these tasks so they may see some differences that I, on my mid-range system, will not.

The full dataset is available here for anyone to peruse if they so desire but I've summarised everything in slightly easier-to-read graphical form below. The easy way to read these graphs is that I have sped up the operating frequency between DDR4 2133, 3200, 3600, 3800, 4000, 4200, and 4400 with some tweaks to the subtimings in order to optimise both memory/system latency and available bandwidth frm the stock settings at those various frequencies.

I've noted the primary CAS latency timing in the graphs but the subtimings are recorded in the google doc link above.

The final caveat I wish to make a note of here is that I am specifically looking at gaming performance and I make no claims about applications outside of that arena.

|

| Me make latency go down... |

|

| ...and bandwidth go up! (This time from Intel Memory Latency Checker) |

The very first principle that many of the people who espouse memory overclocking and tuning is the notion that "lower latency = better" for gaming.

I've explored this notion in my RAM tuning adventure, as you can see from the above graphs. I didn't bother trying at DDR4 2133 or 4400 because of the problems with that at 2133 being outweighed by lack of resources (try not to game with RAM operating like this!) and at 4400 because after the initial round of benchmarking, my system will no longer boot at the stock 4400 settings. Not sure if my memory controller has an issue or if the BIOS has an issue because the settings were changed frequently. I might try and explore this issue further in future but I most likely won't chase latency optimisations at that speed because we're no longer operating at a 1:1 ratio of infinity fabric to memory/controller frequency and we can see that at DDR4 4000 2:1, everything is worse than at 1:1.

And let me tell you, potentially uninformed reader - for Ryzen, (and for Intel too) keeping the frequency of the on-chip memory controller and interface operating at the same timings as the memory and system buses gives a huge performance boost in applications that require low latency. The real question is, how much does this performance depend on that lower latency.

|

| Despite lower latency and higher bandwidth, overall score in Heaven suffers at DDR4 4000 and above, despite FPS numbers appearing equal... |

|

| Superposition has no such sensitivity, though... |

I fear that this is potentially where the misconception lies. We, as gamers and PC system builders, have had it drilled into us to remember to enable XMP profiles and with early AMD Ryzen generations the importance of using faster, lower latency RAM kits dominated the informal tech talk surrounding those products but, at least for Ryzen 5000, this does not appear to apply.

One unspoken issue is that every individual application is sensitive (or not) to these factors in different ways. Of the applications/games I tested, the two most sensitive were Spider-man: Remastered and AC: Valhalla. Most applications, like Unigine Heaven and Superposition appear entirely latency insensitive but Heaven exhibits some strange behaviour beyond DDR4 4000 whereby the FPS statistics appear equal to lower performing RAM but the overall score begins to decline. Having no clear understanding of how the "score" is calculated, I am left wondering if there is some sort of frame pacing issue which is noted by the application but which is not registered by static numbers, as I had previously noted in my Spider-man performance analysis.

|

| Like other applications I tested Arkham Knight has a few dips at standard RAM settings but nothing serious... |

|

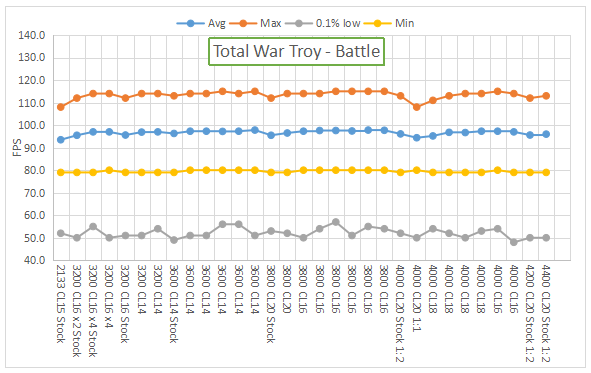

| TW: Troy also exhibits this, but without the slight max fps increases with highly optimised RAM secondary timings... |

What we generally see in other game applications, though, is that with optimised secondary timings for the RAM, the maximum fps will increase somewhat but the average, minimum and lows will not really budge. Meaning that if you're operating with an unlocked framerate you have greater frame-to-frame variance which, as I pointed out in the Spider-man Analyse This, results in an actually worse user experience. You WANT even frame presentation. You don't want a random spike to 200 fps from 100 fps and back again. That wreaks havoc with the GPU and CPU scheduling and can potentially present as a stutter which can make your experience worse, overall.

|

| The outliers per RAM frequency are picked out with a blue column... |

|

| With Ray Tracing enabled, the results are less differentiated due to the GPU bottleneck but there are a few outliers... |

Going back to Spider-man, we see that with ray tracing disabled, this game sees an overall uplift with subtiming optimisation. However, it is clear that this performance has no relation to RAM or system latency as the higher results do not always correspond with the configurations with the lowest latency. However, there does appear to be a loose correlation with the total bandwidth available to the memory - which does make sense given the reliance we previously observed with the PCIe gen 3 vs gen 4 scaling in the prior blogpost.

However, it is VERY important to note that the difference in those results are very minimal. With the exception of one max fps being 20 fps higher than the average, the majority of the results were within a variation of +/- 5 fps, in general. Another important thing to note is that the minimums experienced in this game are random. No amount of memory latency or bandwidth can alleviate this issue and there appears to be a bottleneck elsewhere in this system that is unable to deal with a sudden spike in either processing, compute or data transfer that I am unable to reliably overcome. As such, I would not take too much stock in the minimums for this title.

Moving onto the ray tracing performance of Spider-man with memory optimisations, we expectedly encounter more of an equal experience based on the bottleneck caused by the GPU. However, surprisingly, this is a situation where both memory latency and bandwidth do have a visible trend on performance.

It's not a big difference, but (ignoring the variable minimum fps results) we can see a slight increase for each memory speed with decreasing latency and increasing bandwidth. However, it is apparent that maximising the latency and bandwidth of each memory speed does not appreciably differentiate from the upper limit of the other memory speeds. i.e., a highly optimised x2 3200 CL14 or x4 3200 CL16 set of RAM does not perform sufficiently differently from x2 3800 CL16 or x2 4000 CL16. This is important in terms of the cost to value conversation we typically have around RAM.

|

| I would say that there's a slight bow-shape in the max fps curve but the results are so scattershot that I don't think it really matters... |

AC:Valhalla has the opposite problem. It has VERY consistent minimum fps numbers but the maximum fps numbers are effectively all over the place from benchmark run to run. Thus, there appears to be some slight effect of latency on the max fps of the title. Similarly, bandwidth is effectively minimally correlated with the maximum fps values but not with any of the other metrics measured by this benchmark. Effectively making it a moot point if you're going to be limiting your fps output (as you should be).

All's well that ends well...

Despite many protestations that "reviewers are doing it wrong"... there appears to be little evidence of that on Ryzen 5000/Zen 3 systems. While it remains to be seen on Intel's 12th gen architecture, historically, Intel's ringbus design has typically been less sensitive to RAM speed/latency and, while it appears that DDR5 gives it an uplift (we're talking a few fps, here), this doesn't appear to be related to either bandwidth or latency - it might be something to do with the integrated memory controller or the way DDR5 memory works.

Really, as I pointed out above, I'm not the first person to come to these conclusions or to write about how memory speed, latency and bandwidth are really not very important for gaming on modern AMD and Intel systems... so these results are not a surprise. What IS a surprise is the number of people who keep pushing the narrative that memory speed makes a big difference and that reviewers should utilise or optimise their memory configurations for testing hardware and software.

Next time... we'll take a look at the results for my 12400 system. Stay tuned!

No comments:

Post a Comment