|

Starfield has been a much anticipated title for a number of years now but the hype, counter-hype and social media battles have been raging pretty strongly around this one since it was made an Xbox and PC exclusive.

Normally, though, those concerns usually melt away shortly after launch when players can actually get their hands on the game and just play. In Starfield's case, this hasn't been quite the experience - there are many players who are struggling to run the game because it can be quite demanding of PC hardware. There are raging debates as to whether the problem lies with the developers, the engine, or the hardware manufacturers...

Since I had my savegame destroyed in Baldur's Gate 3 and didn't feel like repeating thirty hours of gametime I instead decided to switch over to Starfield for a change in pace. As a happy coincidence, I have a lot of familiarity with prior Bethesda Game Studios titles and a penchant for testing on various hardware configurations... and it just so happens that I have a new testing rig (mostly) up and running so it has turned out that Starfield is a prime target for the shakedown of this new testing capability...

|

| OC3D have a good review of the performance limitations of the game, along with Hardware Unboxed and Gamers Nexus... |

Graphics Performance...

One thing I've been seeing is that people are complaining about how poorly the game runs on high-end hardware when using the highest settings, without upscaling, at (effectively) the highest resolution (aka 4K). I really do not see an issue with this - the game is technically quite challenging to run because of the way the engine/game is designed.

On the one hand, you have the heavily CPU reliant physics and item tracking systems, along with the NPC AI demands when using crowds at high and ultra settings*, and on the other hand, you have low process RAM utilisation and a heavy reliance on data streaming from the storage** which will also heavily tax the CPU in individual moments due to the way that those calls and asset decompression is handled.

*Honestly, this is a game where I can say that the world feels lived-in, in terms of numbers of occupants...

The way that the game is designed to load the data from the disk happens in relatively large batches, resulting in large frametime spikes*, causing dips and drops in measured average fps when this loading occurs. Unfortunately, as per my informal testing, it does not appear that this can be mitigated through faster storage, RAM or CPU (though I only have the two SKUs to test on)...

In this sense, the person benchmarking this title needs to ensure that they are not crossing boundaries which result in streaming of data from the storage as this will negatively affect the benchmark result and, in effect, means that the benchmark is actually just testing the game engine's streaming solution rather than the CPU and GPU performance.

This is not something I've seen mentioned in any technical review of the game and it potentially calls into question some of the results we've seen thrown around the internet because these performance dips due to loading are not consistent between runs.

*I have made an example video to show this effect in action...(please note that there is a slight delay in the HWinfo monitoring of the read rate)

Test Systems and Results...

Getting back to things, I've tested the game using the following systems:

- System 1

- i5-12400

- Gigabyte B660i Aorus Pro DDR4

- 16 GB DDR4 3800 CL15

- SN770 2 TB

- System 2

- i5-12400f

- Gigabyte B760 Gaming X AX

- 32 GB DDR5 6400 CL32

- P5 Plus 2 TB

- System 3

- R5 5600X

- MSI B450-A Pro Max

- 32 GB DDR4 3200 CL18

- SN750 1 TB

- Crucial P1 1 TB (game install drive)

I also have tested using the following graphics cards:

- RTX 3070

- RTX 4070

- RX 6800

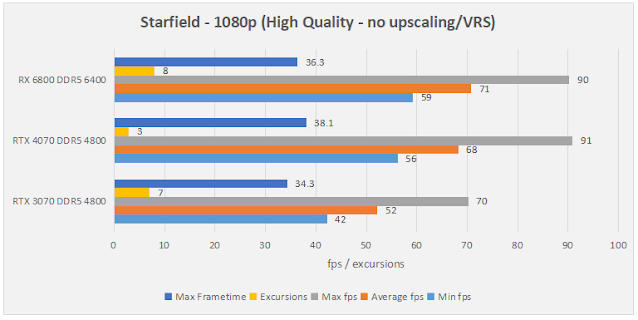

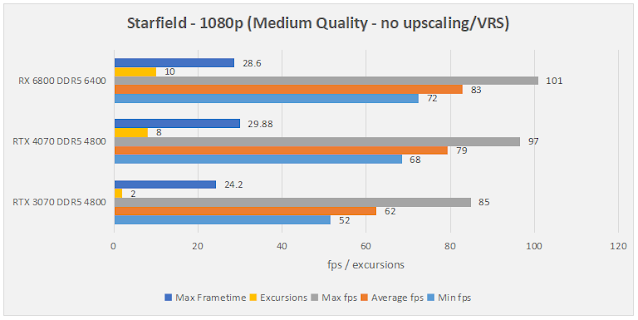

The following sets of performance benchmarks are obtained from System 2 performed in New Atlantis in a section near the archives and Constellation's HQ where I was able to find a path which did not normally incur data loading from disk. This path includes the wooded area, since foliage is known to be challenging for GPUs to render.

|

|

As has been pointed out elsewhere, AMD cards generally perform better than Nvidia's and, though the game does not fully utilise the VRAM quantity available on the card - in fact this is something which potentially feels like a hold-over from the consoles - both my 8 GB RTX 3070 the 12 GB RTX 4070 would utilise around 4.5 GB VRAM with 8 GB system RAM, while the 16 GB RX 6800 would utilise around 6 GB VRAM with 9 GB system RAM.

This low utilisation of memory could explain some of the issues with framerate dips due to excess or unecessary streaming of data from the storage.

One upside we can observe from this data is that changing quality settings really does have a good performance uplift in this game and, at least at native resolution without VRS or upscaling /dynamic resolution, we do not really see that much of a visual degradation for pushing these settings down a couple of notches.

Doing this takes the RTX 3070 from ~40 fps average at Ultra/High settings to ~60 fps average at all medium settings at 1080p and I feel like this is a very playable framerate. As a result, like many other outlets have done, I've come up with some quality settings of my own which I find do not negatively affect the performance from "all medium" quality settings but will enable a slightly better looking game.

|

| The optimised settings I determined to not have too much of an impact on performance... |

|

| No performance loss is noted for the 3070 and a very slight loss is noted for the other two - and is likely within run-to-run variation... |

So, with the broad strokes out of the way, let's get back to that memory speed difference...

What Affects Performance....?

Given that I've mentioned above that the game is highly reliant on streaming of data from the storage, and that there is minimal data retained in system and video memory, you would think that things like CPU speed/power, PCIe speed, and memory speed would all be heavily tied to the general performance of this game. So, let's take a look!

|

| Captured on Systems 1 and 2... |

|

| Captured on System 3... |

At high memory speeds, DDR4 3800 and DDR5 4800 and above, there is no noticeable differnce in performance in the game. I haven't had the time to go through and test the situations when there is "bulk" loading of data from the storage (my time is severely limited compared to professional outlets, after all) but other outlets have shown a difference but it is not clear whether that difference stems from the CPU or the memory subsystem - perhaps I'll explore this in a future blogpost!

Looking at DDR4, there is a difference, mostly in the maximum noted frametimes, with a slight increase in minimum and average fps.

|

Moving onto the effect of the CPU, we can see a noticeable increase in performance between the 12400 and the 5600X, with the former providing a good 5 fps advantage in performance, along with a good reduction in maximum measured frametime. If I get around to that future blogpost I mentioned above, I'll likely explore this across all three test systems with the RTX 4070 (the RX 6800 will not fit in System 1!)...

|

| It seems I am not completely immune to data streaming issues! |

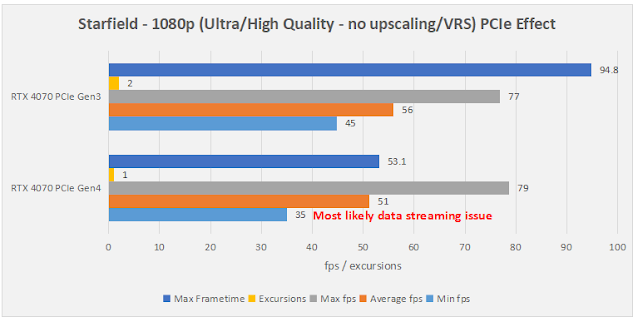

Looking at the effect of PCIe bandwidth, I reduced both the CPU and PCH PCIe gen from 4 to 3 using System 2 and saw no real difference in performance between the two, except potentially in the maximum frametime which might indicate a bottleneck - this would need more testing to ascertain.

Finally, we have the potential effect of driver revisions on the performance - sometimes users don't update their drivers (I'm one of them).

|

|

My testing shows essentially no difference between any tested driver revisions - the AMD results are within margin of error and the Nvidia results are remarkably consistent (aside from maximum recorded frametime). There really is no reason to update the GPU driver to play this game as long as you have one of the recent versions.

Potential Bugs...

One thing I did want to test was Hardware Unboxed's claim* that Ultra shadow settings negatively impact performance on Nvidia's cards moreso than AMD's cards.

*I wasn't able to find it in their bazillion videos they have put out addressing Starfield so can't link it...

|

After my testing, I can confirm this observation - I saw the average fps increase by around 30% for both Nvidia cards when reducing this setting from Ultra to High whereas I only saw a 12% increase for the AMD card. Additionally, the minimum fps increased by 50 - 60% on the Nvidia cards but only around 15% on the AMD card.

To me, this is clearly a manifestation of a potential driver problem, as opposed to the game itself - i.e. the way the Nvidia driver handles this setting is broken. As such, it is not worth keeping at Ultra when using Nvidia hardware - though I've already addressed this in my optimised settings.

Additionally, Alex over at Digital Foundry has seen that having too many CPU cores and/or hyperthreading enabled will actually lessen performance by around 10%!

Now, I have not had the chance to test this but it may help to explain some of my results compared to other outlets which are reporting worse numbers than I am at the same quality settings - though this could also be due to the aforementioned streaming dips and a more challenging GPU testing scenario (though, really, the differences are small!)...

Conclusion...

I've been enjoying my time in the game thus-far and have only encountered two bugs - neither of which were game-breaking, one was a slight inconvenience and the other may have been purposeful but felt like a bug...

It's clear that Starfield requires decent hardware to get running well. However, the three systems I'm using are firmly in the mid-range territory of today's hardware generations. Are many gamers playing on older systems/hardware? Yes! However, I don't think that this should restrict developers from targeting "current" mid-range systems for their medium quality settings and a little tweaking of settings will yield pretty decent performance gains in this title, which is how it used to be in the past!

So, yes, Todd Howard may have been a little insensitive in the way he phrased his answer to that question posed to him in the introduction to this blogpost, but he also isn't wrong, either. A PC with an AMD R5 5500/R5 5600, 16 GB DDR4, a cheap 1 TB SSD and an ~RTX 3070-equivalent card (e.g. an RX 7600 or RX 6700) will let you play at 60 fps at 1080p or at a higher resolution with upscaling.

I don't think that's a huge ask for a new game in late 2023!

Bummer about the BG3 save. :(

ReplyDeleteAgreed, it's not unreasonable to expect someone trying to play this on PC to have a machine at LEAST as performant as a Series X (or realistically, with Windows overhead BS, somewhere around Series X + 25%).

I'm very interested in Alex's findings re HT (Intel) on/off versus SMT (AMD) on/off. As well as e-cores on/off.

Yeah, I would like to test this out. Though of his findings are accurate, i perhaps don't have the equipment to do so.

DeletePreviously, whenever I've tested this based on community observations, it's never planned out but perhaps that was mostly on older CPU architectures and I was really on new ones...