|

Yeah, I know this is getting bothersome and tiring but, as we finally approach the release date of Forspoken - the first game to include DirectStorage as a way of managing data from the storage device on your PC - I've noticed a trend of people posting about the topic in a very uncritical manner.

I don't hate it, I just don't like it...

Look, by now you probably have the feeling that I have some sort of vendetta or family blood feud with DirectStorage (DS for short); I don't! I really don't. But I do have the opinion that these developmental resources could have been better spent elsewhere and that developers could be demanding a larger quantity of system RAM and using more CPU cores instead of requiring more expensive NVMe SSD technology instead of using the already fully taxed GPU to improve data loading. The PC is not a console and shouldn't have console-focussed technologies applied to it because their purposes and strengths are largely very different.

I also do not like how this technology has been presented... with very misleading comparisons and obfuscated improvements (as I detailed last time).

This is, once more, one of those times.

|

| Why all the fuss? |

Avoca-doh's...

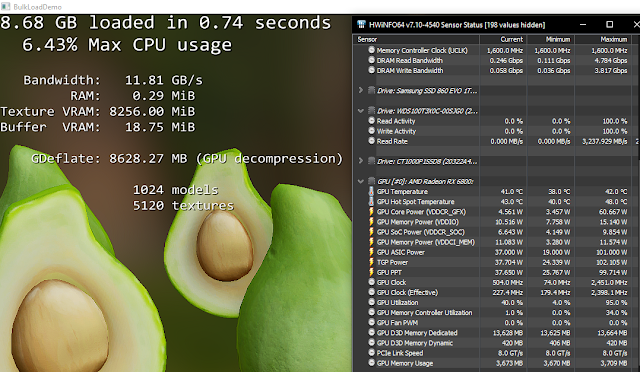

The thing that got me to write about this subject again was the recent resurgence of the Avocado bulk load demo (that was put out last November) in the tech media/tech-affiliated people I have ended up in a circle with on Twitter. Oh, and another follow-up post from Tom's Hardware regarding Arc performance.

The trouble here is that I don't think these numbers mean what people think they mean.

The first, most important caveat is that the bulk load demo is not doing any bulk loading of different data. It's just a demo for how to implement the feature/API calls in a shipping piece of software. There is just a single model and (as far as I can see) two textures for that model which are batch loaded multiple times in a (presumably) single request.

As a result there are several things that stand out to me:

- The whole programme, plus model and textures, clocks in around 30-40 MB, at most.

- Many SSDs use a DRAM read cache. Some do not.

- System RAM is still used in this demo.

So, what is actually being measured here?

It's certainly not moving 8 GB of data into the VRAM (based on my 16 GB buffer). What it is doing is moving the repeatedly requested compressed files into RAM and then shoving them onto the VRAM/GPU for decompression.

|

| Seems my SN750 and RAM are working at the correct bandwidth... but what's with that VRAM value? |

It's easy to show huge bandwidth numbers when you're taking the final uncompressed number. First off, the numbers reported are just theoretical - it's the amount of data that is moved divided by the amount of time to move the compressed data and uncompress it. If we wanted to be more accurate, the values should be labelled "effective" instead of presenting them as an absolute number.

My own calculations put the actual amount of data transferred from the storage device to be around 2.4 GB (taken as an average across three different drives). If you have a smaller VRAM quantity, then the number of textures transferred is adjusted appropriately - so my RTX 3070 sees only 2560 textures transferred, for example.

The problem I have now with people really loving these high numbers, is four-fold:

- The SSD doesn't need to look-up multiple addresses for myriad files spread across the storage media - so the latency and pentalty for doing so is not accounted for here.

- Once read, the DRAM read cache on the SSD will likely be storing these files - they are no longer being read from the disk at this point.

- The data is still being written into system memory before being read to VRAM and then wiped - it's an egregious waste of RAM. The following times the data is read in the subsequently repeating tests could be MUCH faster than it is.

- For multiple of the same object with the same textures, there is no reason or need to even perform this (though, of course, I appreciate this is a concept demo - as I stated above).

What we are essentially seeing here is a scenario which will never play out in a real-world application.

|

| The Crucial P1 performs in-line with its specs relative to the WD750 (above)... |

For points 1 and 2, there are many penalties when accessing large amounts of random data from the storage device. These inefficiencies, such as reaching a DRAM or controller bottleneck, are not accounted for or addressed due to the nature of this demo and, as such, the numbers we're seeing are inflated or "best case scenarios" because of this.

Even with the appreciably worse performance of the DRAM-less SN570, despite the numbers in HWInfo not making any sense* for a smaller amount of data transferred, we observe degradation of performance for each sequential test cycle down to a minimum. That's still better than the crucial P1, but again, this is one of the huge pitfalls of a non-standardised hardware system.

*I double checked in Crystaldiskmark and can see that the drive is behaving normally but the numbers actually match up with Tom'sHardware's findings of a large data transfer - potentially pointing towards a controller limitation and not just a cache issue.

Additionally, on the decompression side of things, virutally no assets will be this efficient in a released game and they will not all be nicely uncompressed in the same timeframe (due to size, complexity or whatever) across the GPU cores in the manner that these textures are. So, we are likely to see sizeable deviations from these idealised "effective bandwidth" numbers.

|

| But the DRAM-less SN570 has relatively terrible performance, in comparison, as the SLC cache fills up... |

Point 3 is the most egregious from my point of view: the data has been copied to RAM - there is no reason to discard it in case it is required again. It's easily provable that RAM has much faster read and write speeds than even the fastest PCIe gen 5 SSDs (and lower latency, to boot!)... in my book, it's a literal crime to focus on moving assets directly from storage to VRAM like this, ignoring one of the cheapest and most performant parts of the PC in the process.

|

| Finally, the SATA-based EVO 860 did an admirable job... but it just isn't designed for batch requests - though at least the DRAM cache made sure its performance was consistent. |

...and your little dog, too!

My mind boggles at people gawping and gaping at the huge (non-real) numbers in this demo meant to help developers implement this function in their software. A little critical thinking would render these test results a little less impressive...

However, even ignoring that, I am again brought back to what I wrote last time:

"The last thing to add to this equation of confusing decision-making is that SSD performance can vary wildly between drives and even for the same drive depending on how full it is! With drives filled to 80% capacity losing up to 30% random read performance for queue depths larger than 64 and 40% sequential read performance for queue depths of less than 4. Games are big, they take up space!"

And the first time:

"The PC gaming experience is floundering because of this hyper-optimised console crap and it's getting really frustating. Requiring an SSD to cover up your lack of using system memory? It's crazy! Once games have standardised 32 GB of RAM, it's there - for EVERY game. You can't guarantee the read throughput of an SN750 black (gen 3) or an MP600 (gen 4) or ANY SSD. In fact, the move to QLC NAND for higher densities completely destroys this concept.

Worse still, taking that degradation into account, you can't even fill up your SSDs! You need to leave them at around 70% (depending on NAND utilisation, DRAM presence, controller design, etc, etc...)."

We can add to those observations DRAM-less SSDs being unable to maintain a consistent performance when under heavy load and, more worryingly, increase of wear on the SSD due to the heavier access - something that Phison wants to mitigate with "special" firmware on specific drives. Which just screams to me "these will be more expensive for no reason"...

|

| Last time I published this comparison, DDR5 wasn't even present. Now? There's even less reason to implement DirectStorage... |

Adding fuel to the fire: DDR5 is now in wide circulation and adopted by both CPU manufacturers. The extra possible bandwidth and, in theory, more dense DIMMs* coupled with the proliferation of CPU cores, even at lower price points** means that the window for DirectStorage to be actually relevant (if it ever could be) should be essentially closed. This is a technology developed with standardised and limited hardware in mind (like the consoles) - a low-end PC will not have a super-duper expensive NVMe drive and instead of pushing developers to require more RAM (a one-time, inexhaustible purchase!) Microsoft is instead trying to implement a technology that is backwards-looking.

Still, I've made what I consider to be a bold prediction at the beginning of this year that new games will begin recommending 32 GB of RAM as standard... let's see if it comes true.

*Though this was a promise that has not come to pass, thus far...

**The i5-13400 is now a 6+4 core part!!

Because, if it doesn't, we will be in a situation where we are pushed to buy much more expensive storage than is necessary, worry about SSD wear, and be made to juggle games around on various drives in order to get the best performance... and I just don't want to see that happen.

No comments:

Post a Comment