|

Ever since I performed the comparative analysis of the 60 compute unit (CU) RX 7800 XT with the RX 6800 one of the background thoughts running around my head has been the question on whether Navi 33 - the monolithic version of RDNA 3 - does or doesn't have an uplift over the equivalent RDNA2 design.

Since I purchased one each of the RX 6650 XT and RX 7600 XT for the PS5/Pro simulations, I now have the tools available to do the full analysis of RDNA 2 versus RDNA 3! So, let's jump in...

Reminiscing...

Last time, I mentioned that reviewers have seen essentially no performance uplift in their testing between the RX 6650 XT and RX 7600 (on the order of around 4% or approx. 3-5 fps). I spoke about my interpretation of the results I had obtained - trying to square them with the reporting performed by various technical outlets on the lacklustre RX 7600 performance relative to the RX 6650 XT - and came to the conclusion that it seemed that the front-end clock scaling was giving a big boost on the Navi 31 and 32 parts, and causing a lack of performance being absent on the Navi 33 parts...

"Additionally, the RX 7600 appears hamstrung by the lack of increased front-end clock - perhaps due to power considerations? - and it is the choice to decouple front-end and shader clocks that seems to me to be the biggest contributor of RDNA 3's architectural uplift as it is this aspect which appears to allow the other architectural improvements to low-level caches and FP32 throughput to really shine."

However, later on, in the comments, I received some challenging thoughts from user kkeubuni speaking about the testing from ComputerBase, who claimed: "RDNA 3 is simply not faster with the same number of execution units without a clock jump" and "Depending on the rounding, RDNA 3 on the Radeon RX 7600 is between 7 and 8 percentage points faster in the course than RDNA 2 on Navi 23".

I replied as follows:

From my perspective, the 6650 XT is an overclocked 6600 XT - with the primary difference being memory clock and TDP. Looking at the steady-state core clock frequencies of reviewed cards over on TechPowerUp, the 6600 XT was already around 2650 MHz using the partner cards. In comparison, the singular tested 6650 XT reached 2702 MHz - a 25 - 50 MHz increase.We would not expect any performance increase in this scenario but we did, in fact, see a 16-20% performance increase between the 6600 XT and 6650XT - a fact acknowledged by ComputerBase (page 2).From what I can see, this performance increase was solely due to the increase in memory frequency and the increase in board power (160 W vs 176 W). We also observe this in my testing above with the 7800XT where I increased the power by 15% and saw an associated 6% uplift in Metro Exodus.The conclusion of this information (for me) is that the 6600 XT/6650 XT core is working at the peak of its frequency curve and will not be efficient as it is pushed further. The other conclusion is that the card is likely power-limited in both configurations.This means that it should, in my opinion, be tested below this core clock frequency to ensure that power draw is not the limiting factor as we are aware that even if the core frequency is reporting the same value, power limitations will reduce performance.

****So, given these data, I do not believe that the RX 7600 has an 8 - 9 % performance gap, clock for clock, over the RX 6650 XT. I think that testing, similar to what I have performed here, for the reasons outlined above, needs to be done to definitely show there is a substantial uplift...

So, that's why we're here today. To prove, using my test methodolgy, how Navi 33 (RX 7600 XT) holds up against Navi 23 (RX 6650 XT) and to test whether my hypothesis that the front-end clockspeed increase can be attributed to the better performance I observed in Navi 32 over Navi 22...

Jumping Straight In...

As with last time, I'll start by exploring the limits of the cards in power and memory scaling - to see what is dictating the performance. The test system remains the same as last time, running on the latest build of Windows 10, but the Adrenaline version has been updated to 24.9.1.

- Intel i5-12400

- Gigabyte B760 Gaming X AX

- Corsair Vengeance DDR5 2x16 GB 6400

- Sapphite Pulse RX 7800 XT

- XFX Speedster SWFT 319 RX 6800

- Sapphire Pulse RX 7600 XT

- Gigabyte RX 6650 XT

One thing I will note before starting is that the RX 7600 XT released after my prior blogpost and we have data showing a more substantial improvement* for that card over the RX 7600/ 6650 XT but I have not seen anyone actually discuss the reasons why. This card increased the VRAM amount whilst keeping the memory bus the same width, and also increased the board power from 165 W to 190 W. This was essentially addressing the same point/problem that I noted above about the RX 6600 - the card was primairly power limited.

*ComputerBase reports 17% raster and 37% for ray tracing over the RX 6650 XT...

|

| The effect of raising the board power limit whilst keeping the core and memory at stock settings... |

As we can see above, simply raising the power limit in Adrenaline on both RDNA 3 cards saw a small but essentially linear increase in performance, though the RX 7600 XT had a shallower gradient than the RX 7800 XT, meaning that it was nearer the performance limit for board power than the Navi 32 card. Both cards saw around a 4% increase - corresponding to a measly 1.5 and 3 fps increase, respectively. Still, that's a ~4% increase on a card that was already 17% faster than the RX 6650 XT, pushing it to almost 18% in total...

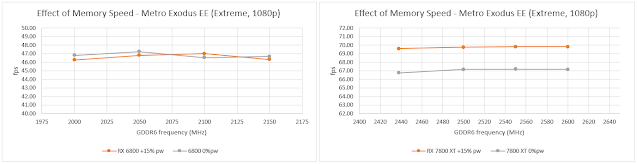

Looking at the scaling of memory frequency, we see a similar situation playing out - neither RDNA 2 card really performs better - in fact, the RX 6800 actually displays a degradation in performance. The 7800 XT also doesn't improve with increased memory speed but the 7600 XT gets another 1-2 fps (or +2%) on the stock frequencies.

|

| The RX 7600 XT is still power-limited, despite its base +15% increase over the RX 7600... |

|

| Neither the RX 6800 or 7800 XT saw any benefits from memory scaling but the latter was also power-limited at stock... |

It's clear that my method of comparing the architectures at a lower frequency is most likely going to be the correct way forward - given that any power limitation will be far above what is required in that testing regime...

|

| As a sanity check: there is no performance difference at 2050 MHz when increasing power and less than 1 fps with memory scaling (not that we'll be increasing bandwidth in the following tests)... |

Now that we've established that the RDNA 3 cards ideally should not be tested at stock for this comparison, let's move onto the actual games whilst controlling the core frequency of each card to approximately 2050 MHz - the same value I settled on last time - with the power limits increased for all cards.

Benchmarking...

The first titles in our test suite, Avatar and Returnal, show essentially identical performance on the Navi 33 and 23 cards; the RX 7600 XT shows a slight bump in average fps in Returnal with ray tracing enabled but this 3 fps increase is not reflecting the much larger 14% increase (10 fps) observed for the higher tier cards.

This result is particularly surprising because we might expect to see an uplift from both the increased VRAM and power limits on the 7600 XT when using the ultra setting in Avatar.

Returnal, on the other hand, uses settings which shouldn't trouble the VRAM on the 6650 XT...

Such a result gives credence to the hypothesis, though it's too early to lean into a conclusion!

| These two titles show no architectural uplift for Navi 33... |

Cyberpunk 2077 gives us a more nuanced result. At 1440p, with RT enabled, the RX 6650 XT struggles with its 8 GB of VRAM - as observed in the minimum fps value. Otherwise, the performance uplift observed for the 7600 XT is outdone by that of the RX 6800 to 7800 XT. There clearly is an effect but one of the problems, here, is the low absolute fps values cause issues with trying to understand the real level of performance uplift: i.e. small numbers mean a single fps has a large effect on percentage improvement.

At this point, for this title, I would say that there is an improvement in performance but it's less than for the Navi 32 die. An effect of the increased frontend clock frequency?

|

| The RX 6650 XT's 8 GB framebuffer displays its inadequacy in Cyberpunk at 1440p... |

Both Hogwart's and Spider-man tell a similar story: gains, but not as much. The one exception is for Spider-man at 1440p with RT enabled. The RX 7800 XT begins to run up against a CPU bottleneck, reducing the overall performance advantage it has over the 6800. However, in this title, the 7600 XT really outshines the 6650 XT in ray tracing, coming out with a clear win (+14%). Unfortunately, this is most likely due to the 6650 XT suffering from a VRAM limitation as the game registers around 7 - 8 GB VRAM usage (per process) on the 16 GB cards at 1440p resolution.

In fact, re-testing at 1080p with ray tracing enabled, we get 81 fps vs 67 fps average for the 7600 XT and 6650 XT, respectively. This increases the gen on gen increase from +14% at 1440p to +22% at 1080p which doesn't really make much sense when the raster performances are 114 fps vs 109 fps average, respectively, which correlates to a +5% gen on gen increase at 1080p compared to a +4% increase at 1440p - a very consistent number!

|

| While the frametime graph (not shown) of Spider-man did not display an outsized number of frametime spikes due to VRAM issues, the game is likely using lower-quality stand-ins during this testing |

Delving deeper into the data monitoring, we can see that when disabling ray tracing, we immedately gain back around 1 GB of VRAM! This is then quickly re-filled by all the assets that the game actually wants in VRAM, though, given that we routinely see 7.3+ GB used on the 7600 XT for the process, with 10 GB total VRAM usage for the system, we know we're actually missing out on around 3 GB of data that's being shuffled back and forth to system memory through the PCIe bus when using the RX 6650 XT.

Given this additional data - we have to discount the large increase I've recorded above for the 7600 XT over the 6650 XT when using RT effects at both resolutions in Spider-man and instead focus on the raster performance.

|

| Switching off RT shows that the game is VRAM-starved, even at 1080p... |

Hogwart's is another graphically demanding title (it's also demanding on the processor as well, but we're not hitting a bottleneck in this test for either the CPU or VRAM). Due to the amount of performance loss from non-RT to RT settings for the 7800 XT and RX 6800, I decided not to bother to test the Navi 23 and 33 parts - the numbers would have just been too low...

|

| It's hard to squre the Starfield performance at 1440p, maybe someone in the comments can... |

Performance in Starfield is very similar for both sets of GPU comparisons. At 1440p, both RDNA 3 cards perform a lot better than their RDNA 2 counterparts at 1080p. The reason is not really clear to me. It could be the extra FP32 throughput in the improved Compute Unit design, though.

On the contrary, Alan Wake 2 tells a similar story to that of Spider-man - a VRAM limit and PCIe traffic when testing at 1080p, High settings creates the impression that the 7600 XT is relatively more performant than the 7800 XT, gen-on-gen. However, if we drop the quality down to the Low setting, we see the gap between N33 and N23 narrow to match the higher tier cards at around +18%.

|

| Low settings are "fairer" on the 8 GB RX 6650 XT... |

Last, but not least, Metro Exodus takes a break from helping to monitor power and memory frequency scaling to be used as an actual benchmark.

In this ray tracing-focussed title, we observe very strong scaling based both on number of CUs as well as architectural improvements. In fact, the 7600 XT outperforms the relative increase in performance compared to the 7800 XT - though there is a very real effect from the small numbers involved, I think it's not enough to explain the actual relative results. N33 appears stronger vs 23 than N32 vs N22...

I have also checked the VRAM usage for the process as I did with Spider-man but Metro: Exodus is a relatively lightweight application and, even if we decided (for whatever reason) that the "Extreme" setting was running over the framebuffer limit for the 6650 XT, it most certainly isn't for the "Ultra" setting - which not only drops the process VRAM amount but also the total used for the card!

Since we see a similar uplift at the Ultra setting, this appears to be a title for which the Navi 33 performs as well, if not better than Navi 32.

|

| Unfortunately the benchmark at high settings broke for the 7600 XT but the other results are valid... |

So wrapping up, in game applications we have some titles where there is no performance improvement for Navi 33, some where there is, but less than observed for Navi 32, and one title (Metro) where the improvement is larger than Navi 32.

Architectural Measurements...

Once again, I'll be taking advantage of the wonderful tests authored by Nemez to perform these microbenchmarks.

|

| These tests measure the competencies of the core architecture, so we'd expect similar results gen on gen... |

Similar to the7800 XT, the 7600 XT also displays the same competencies over its generational predecessor. The 6650 XT wins-out in FP16 Addition and Subtraction, just as the RX 6800 did. There really are no surprises, here, and we shouldn't expect any! These tests do not stress anything in the design of the chip, only assess the CU core's ability to perform work.

The RDNA2 parts consistently perform strongly in Division operations, with the only very slight difference coming out in the Inverse Square Root test - with the RX 6650 XT drawing equal with the RX 7600 XT, whereas the RX 6800 won-out against the RX 7800 XT in FP16...

|

| Finally! A difference! |

Cache and memory bandwidths have the same trends as for the higher tier parts. The larger L0 cache, and L1 cache in the RDNA3 architecture show their hands in both the N32 and N33 parts, and this time, since both N23 and N33 have the same L2 and L3 cache size.

What's interesting, though, is that contrary to N32 vs N22, past 6 MiB sizes, up to 64 MiB, there is nowhere near as large of a split in performance between N23 and N33. Despite having a smaller L3 cache (which is nowhere near being saturated at this point in the test) the bandwidth available to the RX 6800 trails off at around 8 MiB, which corresponds to 2 MiB into the L3. Meanwhile, the greater bandwidth of the RX 7800 XT* chugs through until it suddenly hits a wall at 64 MiB - i.e. the L3 cache size. Whereas the 128 MiB of L3 on the RX 6800 don't manage as well when even partially saturated. AMD really made a big improvement in their interconnect technology and the "fan-out links"...

*AMD likes to espouse the "Up to 2708 GB/s" of the improved Infinity cache...

Thus, the monolithic N33 suffers the same lack of bandwidth with higher data requirements as the prior generation.

|

| The larger L0 and L1 sizes and Infinity Fanout show their mettle in this chart... |

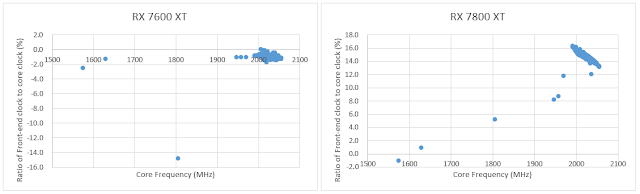

Moving onto the operating frequencies, we reconfirm that the monolithic N33 does not work in the same manner as N32 - the front-end clock frequency appears to still be decoupled from the core frequency* but it actually has a slight deficit! We're looking at a ~1% drop in front-end (2050 MHz) versus the core (2080 MHz)... This is in-line with the results obtained by Chips and Cheese in their testing of Navi 32 vs Navi 33.

*It is at least measured separately - whether this is a real difference between RDNA2 and 3 or not is another question!

With my limited knowledge of GPU design and dependencies, I do have to wonder whether the increased front-end clocks are actually tied to that Infinity Fanout interconnect? Is it just one of the supporting functions which needs to exist to pump the data back and forth?

|

| Core clocks for N23 vs Front-end/Core on N33... Taken in Metro Exodus Enhanced edition... |

|

| Core clocks for N22 vs Front-end/Core on N32... Taken in Metro Exodus Enhanced edition... |

|

| N33 has a slight deficit in front-end frequency compared to core frequency, whereas N32 has a big uplift in the front-end... |

Conclusion...

Taking stock of all these data points is a bit daunting. The added complication of working out precisely when and where the 6650 XT is being limited by its 8 GB VRAM is also a bit of a challenge - though I wasn't going to buy myself an 8 GB RX 7600 just to make the job easier. Screw that!

The microbenchmarks show that the main differences between N22/N32 vs N23/N33 are the improved L3 bandwidth and the front-end frequency in the N32 design. All other architectural differences are common between the two RDNA3 cards.

|

| Discarding tests was painful but had to be done once the limitations became clear... |

Looking at the overall comparison of improvement in gaming, Navi 32 has an overall higher uplift across the majority of titles (when the aforementioned 8 GB isn't getting in the way of testing!). This is typically on the order of around 3-5%, though we do see titles like Hogwarts which benefit to the tune of 10%. In contrast, Metro Exodus doesn't perform like any other title and it's possible that the increases in L0 and L1 cache size has an outsized effect in the N23/N33 comparison...

Going back to the reason for this blogpost, does Navi 33 have less of a performance improvement than Navi 32? I can safely say that the answer is "Yes, in the majority of cases".

Was I correct in thinking that Navi 33 didn't have a performance uplift over Navi 23? "No, but with some caveats".

I was correct in my prediction that the RX 7600 was power limited, like the RX 6600 XT was and that Computerbase was not testing in a correct environment. Additionally, in properly (or as close as is possible) controlled testing conditions, we cannot observe the performance uplift noted in their subsequent testing of the RX 7600 XT of around 17% in raster performance. Therefore, the uplifts observed are not architectural in nature!

If we take an average of the valid performance uplift numbers above, we get an average of 1.13x uplift on N32 and 1.08x uplift on N33 - a 5 % difference between the two RDNA3 designs. It appears that there is a real difference in the performance, though less than I thought and what is quite surprising (at least to me) is that N33 is also very power- and bandwidth-limited, despite being monolithic in design and already having the ceiling of power and memory bandiwdth increased substantially for the RX 7600 XT variant of the chip!

However, taking those considerations mostly out of the picture the performance uplift due to architecture is quite weak - though not as weak as for the RTX 40 series!

RDNA3 feels like a side-step in the architecture's design and I'm struggling to see where AMD are heading for RDNA4. What other levers do they have to pull to wring more performance from the dual compute unit setup? They're already seeing poor power scaling, poor clock scaling, and poor FP32 scaling in this architecture, what's left? Well, I guess there's a plan for that!

I also am a bit pessimistic for Nvidia's next architecture. It feels like we're left waiting for ancillary technologies to catch up to the GPU cores we already have available in order to advance some more. Packaging, memory, and dedicated silicon seem to be the future for consumer GPUs but AMD are dragging their feet on that last point, while the memory makers have been dropping the ball for some time, now, leaving both Nvidia and AMD struggling to outfit their lower tier GPUs with more memory and higher bandwidth. Finally, the advanced packaging that can bring about scaling efficiencies are too power hungry and too expensive to bring to a whole GPU product stack, meaning that - once more - low end and mid-range products are likely to be left out in the cold when it comes to advancements.

To wrap up: RDNA 3's not a bad architecture - there clearly were gains to be had but RDNA 2 was so good that there just wasn't much room for the new architecture to really shine. I, personally, really like both the RX 7600 XT and 7800 XT - though the former is not the best value for money (much like its competition in the same price range).

I was wrong in thinking that there was no performance uplift on the monolithic RDNA3, but there is a difference to be seen and it seems to be most likely linked to the higher front-end frequency and memory bandwidth of the chiplet design.

BUT!

That's it for this series. I'll bring it back for RDNA 4. See you then!

It's always felt like NAVI31/32 chiplets just didn't pan out. "First chiplets GPU" teething pains? I get a feeling as you've outlined is exactly WHY AMD isn't planning to compete "at the high end" with RDNA4+, they put all their "high end" chips into a chiplets basket and it didn't work out, and rather than putting out another underwhelming gen on gen "high end" perf increase for RDNA4 they've just punted and gone back to the drawing board. RDNA4 will probably be monolithic (N43, N44, etc.) only, with no chiplets N41/N42.

ReplyDeleteWhat I'm left wondering is *where* they will go with a monolithic design. Okay, let's say they separate the clock domains for the front end, they can't replicate the infinity fanout bandwidth without having a huge infinity cache on the chip. I don't see them doing that due to die area.

DeleteThey probably can't fix things with RDNA4, I would expect modest gains - maybe 10% with same CU count. Save money and resources and put ensign into RDNA5/UDNA1....