Recently, I've become a bit numb with all the leaks and predictions, stats, and manoeuvrings of the companies in the industry, trying to one-up each other, provide counter-intelligence and increase mindshare. We're in the middle of a storm that I cannot remember being so intense since the end of the 90s or very early 2000s... and even then not in every gaming sector and not in various hardware "genres".

However, what has been slowly coalescing in my mind are what *I* think are the limitations we're going to run into over the next couple of years.

Raytracing...

Yes, RDNA 2.0 and Ampere are meant to be ray-tracing powerhouses but the majority of us are not living in that world. Assuming, that the both Nvidia and AMD bring RT to the whole product stack (and sources point to this perhaps NOT being the case - at least for AMD) then the majority of the consumer base will be buying into the equivalent of the RTX 3060 and RX 6600 XT levels of performance.

If I'm honest, that's probably ray tracing performance on-par with an RTX 2070.

Current demos of games running at 1080p or lower when running with RT enabled show us that there is a seismic shift in terms of what games are demanding of the hardware they will be running on. Yes, playing with RT on or off will have a big impact on your system requirements but this means that it will be a hugely limiting factor, even with the enhancements both next gen graphics card architectures will bring to the table!

If I'm honest, I'm thinking that RT implementations will limit game development more than supporting the current consoles... just my gut feeling after seeing the effects on the demos of Cyberpunk and WD: Legion... If next gen RT isn't 4-5 times better then it's come too early.— Duoae (@Duoae) July 14, 2020

Going into this next console generation, I thought I/O would be the limiting factor. I thought that Microsoft's decision to push developers to support the Xbox One base platform to be the biggest limiting factor because it would encourage low draw distances, small level sizes and sparse level spaces... but I've slowly been realising that either there's a literal 4x performance increase in ray-tracing for both graphics card manufacturers or designing games around ray-tracing is still before its time.

At the end of the day, the "next gen" demos we're seeing are all running in very low resolutions compared to the relative power of the hardware they're running on. Contrast the rumoured 72 CUs included in the high-end RDNA 2.0 die of the next gen AMD cards and you'll see that SONY's 36 CUs and even Microsoft's 52 CUs are relatively low-to-mid range. That puts the Xbox Series X at around 1.08x as powerful as an RTX 2080 Ti in terms of raytracing, if they're all run at the same frequency and it puts the PS5 at around the performance of the RTX 2070 Super* (again, at the same frequency), despite the relatively huge disparity in CU count.

*My count puts it at around 443 whereas Userbenchmark has the Super at around 444 in lighting. This is, I have to say, a very simplistic view of things and not likely wholly representative of the potential experience on one architecture to another...

But what are we looking at here in terms of the effects of those limitations? IMO, we're looking at reduced level sizes, level geometry and lighting effects because ray-tracing takes SO MUCH out of the graphical performance compared to loading times for the same things.

I/O...

You know, so many people are predicting the death of the PC and the hard disk drive (that's an HDD or spinning rust to the cool kids) due to the SSDs in the consoles... But I just don't see it. One reason for this is that the raw SSD speeds of the consoles are not really that impressive! That's 2.4 GB/s max throughput for the SX and 5.5 GB/s for the PS5. However, let's take a look at the interface there - PCIe Gen 3 has a max of around 1 GB/s transfer rate (one way) per lane. Gen 4 has a one-way transfer rate of around double that (2 GB/s) so all NVMe drives operating on 4x PCIe lanes are limited to a maximum transfer rate of 4 GB/s or 8 GB/s.

However, that's not the real limiting factor that I'm thinking of in terms of I/O.

I have not seen a single PCIe Gen 3 NVMe drive manage to reach read speeds of 4 GB/s. One reason for that is overheads in the protocol and synchronous access on the SSDs themselves. The other reason is that the speed of an SSD is primarily limited by its controller.

It doesn't matter whether the NAND chips are "fast", whether they are SLC/MLC/QLC or either of the latter with an SLC cache; whether they are using PCIe Gen 3 or Gen 4 connections to the CPU or chipset. If the integrated on-board controller does not support a given amount of access, everything else is a moot point.

|

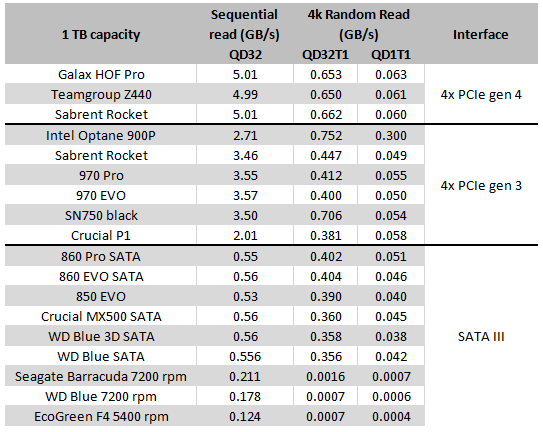

| Comparison of controller performance relative to drive performance... from Anandtech. |

Here you can see that an E12 controller, despite being on an older generation card and interface has an almost equivalent performance to the latest generation in the form of the E19T in pure throughput but a vastly improved performance in terms of random IOPS - which I've previously mentioned are far superior an indication for gaming performance.

Going back to those SSDs in the next gen consoles, we don't know some things about the SSDs in both next gen consoles. For starters, Microsoft have directly compared the performance to the raw throughput of the SSD in the Xbox One and said it comes in at more than 40x the performance. Across the isle, SONY have said their SSD is 100x the performance of the PS4. These numbers are pretty vague and, there's also another layer to add to this discussion: The Xbox One used a SATA II interface, meaning that there was very little overhead for the internal drive that shipped with the console to be swapped out for something faster. The PS4, meanwhile, did use SATA III but its drive didn't really do any better than the one in the XBO, though swapping it out for a SATA SSD could improve its performance!

Looking at the performance of the 5400 rpm drives I listed in my summary table a few entries ago, 100x the performance puts us at around 0.04 GB/s for a Queue Depth of 1 with one thread active for 4k random reads whereas 40x puts us at 0.016 GB/s. This puts the random IOPS performance of the SSD in the PS5 somewhere around the level of a PCIe Gen 3 SSD, even though we know that the sequential read performance is more similar to the Galax HOF Pro. In terms of Microsoft's stated performance uplift, I really can't make head nor tail of it! 40x the performance of the sequential reads puts us right in the ballpark of their "compressed" data transfers but non-sequential reads don't match up to any SSD in the list below.

Since I got burned on Microsoft's poor copy editing last time, I'm going to go with my gut and assume that someone mashed together two separate metrics in adjoining sentences. "It has 2.4 GB/s raw throughput, with up to 4.8 GB/s compressed - more than 40x that of the Xbox One", has got to be the only logical explanation. So that doesn't really help us in terms of random read IOPS. However, given that Phison was linked to the next gen consoles (and we know that SONY went with a custom solution from a different manufacturer) then it seems likely that Microsoft is using one of Phison's controllers listed above, specifically one that has slightly less performance than the E19T but greater than the E13T.

That seems to make sense in terms of overall bandwidth.. so perhaps the SX has random read IOPS of around 400k... which is around 1.5 GB/s in theory... but the Galax HOF Pro is claiming a 750k random read IOPS with 16k, 4k IOPS for a total of 66 MB/s transfer speeds when using the E16 Phison controller. So let's say that, taking that ratio of 400/750k IOPS and actual real-world data, you'd expect around 40 MB/s 4 KB transfer rates for the SX SSD controller. That puts us squarely in the low-end of the PCIe Gen 3 SSD performance space... which, given the disparity between the PS5 raw sequential data transfer rate and the SX's, is not really surprising. Microsoft went with stability and a "standard" instead of going with absolute cutting edge, putting their money into the larger GPU portion of the APU...

Looking at the performance of the 5400 rpm drives I listed in my summary table a few entries ago, 100x the performance puts us at around 0.04 GB/s for a Queue Depth of 1 with one thread active for 4k random reads whereas 40x puts us at 0.016 GB/s. This puts the random IOPS performance of the SSD in the PS5 somewhere around the level of a PCIe Gen 3 SSD, even though we know that the sequential read performance is more similar to the Galax HOF Pro. In terms of Microsoft's stated performance uplift, I really can't make head nor tail of it! 40x the performance of the sequential reads puts us right in the ballpark of their "compressed" data transfers but non-sequential reads don't match up to any SSD in the list below.

Since I got burned on Microsoft's poor copy editing last time, I'm going to go with my gut and assume that someone mashed together two separate metrics in adjoining sentences. "It has 2.4 GB/s raw throughput, with up to 4.8 GB/s compressed - more than 40x that of the Xbox One", has got to be the only logical explanation. So that doesn't really help us in terms of random read IOPS. However, given that Phison was linked to the next gen consoles (and we know that SONY went with a custom solution from a different manufacturer) then it seems likely that Microsoft is using one of Phison's controllers listed above, specifically one that has slightly less performance than the E19T but greater than the E13T.

That seems to make sense in terms of overall bandwidth.. so perhaps the SX has random read IOPS of around 400k... which is around 1.5 GB/s in theory... but the Galax HOF Pro is claiming a 750k random read IOPS with 16k, 4k IOPS for a total of 66 MB/s transfer speeds when using the E16 Phison controller. So let's say that, taking that ratio of 400/750k IOPS and actual real-world data, you'd expect around 40 MB/s 4 KB transfer rates for the SX SSD controller. That puts us squarely in the low-end of the PCIe Gen 3 SSD performance space... which, given the disparity between the PS5 raw sequential data transfer rate and the SX's, is not really surprising. Microsoft went with stability and a "standard" instead of going with absolute cutting edge, putting their money into the larger GPU portion of the APU...

|

| These "real world" Crystaldiskmark values are as close to the expected performance that we can test for... given that they are real-world values. |

So, I've just established that the SSDs in the next gen consoles aren't that special compared to those gen 3 or gen 4 SSDs available for PC. However, what is special is the silicon baked into both next gen console APUs which deal with decompression and RAM optimisation.

At the end of the day, both next gen consoles are starved of system memory*. In order to get around that limitation, and to control the cost of the console, each manufacturer built in specialised decompression units and memory handling silicon into their hardware, allowing for greater effective use of the memory they had available. If you add it up, it puts the SX at an effective amount of around 32 GB RAM and the PS5 around 26 GB - enough for each console to really be able to take advantage of their intended performance envelope.

What this means is that, as I've been saying, PC games won't start requiring SSDs because it's not the transfer speed which is the limitation, it's the lack of hardware required to process all that data. After all, the random IOPS of a SATA III SSD are very similar to those in a PCIe Gen 3 NVMe SSD!

On the memory side of things, I've already covered that I think that 16 - 32 GB RAM will be standard and that graphics memory will rise to a minimum of 8 GB. However, unless the design of CPUs drastically changes, games are going to start to require more cores for processing of data between the storage and the GPU. If you take a look at my prior analysis covering relative performance per year to the console hardware available, you'll see that the recommended "average CPU" for AAA 1080p gaming has been increasing - despite the relatively weak Jaguar cores in the current gen consoles from approximately 2x to 3x over the course of this generation.

This isn't to say that we'll need 16/32 C/T for gaming, no it's likely we'll need 6/12 as a minimum and 8/16 as a recommended standard to handle the constant loading given that PC gamers will most probably have a lot of uncompressed data to shift around.

There is some comfort in that Microsoft's SFS is also in DirectX 12 Ultimate but that only helps on alleviating part of the problem. SFS still requires processing of data - it introduces latency in order for it to work and, ideally, it is designed to enable "memory poor" systems to work more efficiently, with the trade-off being increased latency. For the Series X, some of that processing power that enables SFS is in dedicated silicon on the APU, on a desktop PC, that function needs to be handled by the CPU... hence, more cores.

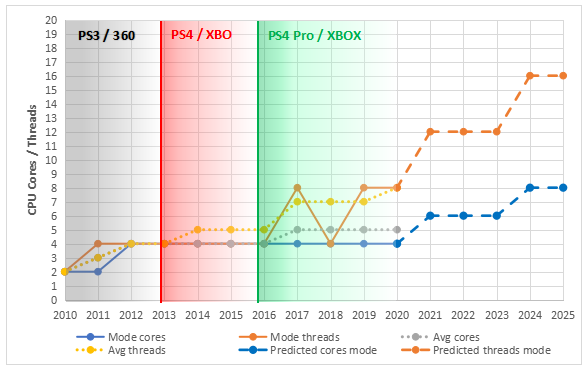

Looking at the number of cores and threads in the "recommended" specifications per year across the games I looked at in my study shows a general upward trend. So, not only is general CPU performance increasing year-on-year, the number of cores/threads has also been increasing - though at a slower rate than CPU performance for gaming, given that the single core performance increase in requirements has been linear or almost linear, with a steeper gradient.

If it's not obvious by now, my read on how things will go in the future is that a lot of the performance of the next gen consoles will be mitigated through compromises and brute force on the PC - as has been the way for decades, now. So, not really a big prediction! Importantly, though, the amount of performance on PC is relative to the amount of performance in the consoles: given that the next gen consoles are effectively equivalent to the high-end of current PC technology, combined with custom beyond current technology, there is no way that I can see that less than 8 cores, 16 threads will be recommended in the coming couple of years.

It's important to remember that my extrapolations are based on prior trends. The next gen consoles are ready to upend the current status quo...

At the end of the day, both next gen consoles are starved of system memory*. In order to get around that limitation, and to control the cost of the console, each manufacturer built in specialised decompression units and memory handling silicon into their hardware, allowing for greater effective use of the memory they had available. If you add it up, it puts the SX at an effective amount of around 32 GB RAM and the PS5 around 26 GB - enough for each console to really be able to take advantage of their intended performance envelope.

*For good reason too - GDDR6 is the most expensive portion of the consoles' respective budgets per unit measured (i.e., per GB, per core, etc)Added to this is the fact that the consoles have a lot of direct memory access hardware, data doesn't need to be shifted between the CPU and GPU, just the memory address pointers. That cuts down on a lot of latency and memory bandwidth usage.

What this means is that, as I've been saying, PC games won't start requiring SSDs because it's not the transfer speed which is the limitation, it's the lack of hardware required to process all that data. After all, the random IOPS of a SATA III SSD are very similar to those in a PCIe Gen 3 NVMe SSD!

On the memory side of things, I've already covered that I think that 16 - 32 GB RAM will be standard and that graphics memory will rise to a minimum of 8 GB. However, unless the design of CPUs drastically changes, games are going to start to require more cores for processing of data between the storage and the GPU. If you take a look at my prior analysis covering relative performance per year to the console hardware available, you'll see that the recommended "average CPU" for AAA 1080p gaming has been increasing - despite the relatively weak Jaguar cores in the current gen consoles from approximately 2x to 3x over the course of this generation.

|

| We currently need around 3x the performance of a console CPU to run games at 1080p compared to the Xbox One X |

This isn't to say that we'll need 16/32 C/T for gaming, no it's likely we'll need 6/12 as a minimum and 8/16 as a recommended standard to handle the constant loading given that PC gamers will most probably have a lot of uncompressed data to shift around.

There is some comfort in that Microsoft's SFS is also in DirectX 12 Ultimate but that only helps on alleviating part of the problem. SFS still requires processing of data - it introduces latency in order for it to work and, ideally, it is designed to enable "memory poor" systems to work more efficiently, with the trade-off being increased latency. For the Series X, some of that processing power that enables SFS is in dedicated silicon on the APU, on a desktop PC, that function needs to be handled by the CPU... hence, more cores.

Looking at the number of cores and threads in the "recommended" specifications per year across the games I looked at in my study shows a general upward trend. So, not only is general CPU performance increasing year-on-year, the number of cores/threads has also been increasing - though at a slower rate than CPU performance for gaming, given that the single core performance increase in requirements has been linear or almost linear, with a steeper gradient.

|

| These are the averaged and mode no. of cores and threads per year from the games I chose to use in my analysis... along with predicted mode cores & threads. |

If it's not obvious by now, my read on how things will go in the future is that a lot of the performance of the next gen consoles will be mitigated through compromises and brute force on the PC - as has been the way for decades, now. So, not really a big prediction! Importantly, though, the amount of performance on PC is relative to the amount of performance in the consoles: given that the next gen consoles are effectively equivalent to the high-end of current PC technology, combined with custom beyond current technology, there is no way that I can see that less than 8 cores, 16 threads will be recommended in the coming couple of years.

It's important to remember that my extrapolations are based on prior trends. The next gen consoles are ready to upend the current status quo...

Storage...

People are worried about how much space next gen games will take on the consoles... and even to some extent on the PC. However, this isn't going to be as much of an issue on console as it will be on PC . The consoles have really optimised compression engines.

What that means is that a typical Series X title will be about 50% of its size compared to being stored on PC and a typical PS5 title will be 60% the size it would be on PC. The second point of the hardware decompression engines (after helping to mitigate the lack of RAM in the next gen consoles) is to enable developers (and the system builders) to optimise install space on devices which are storage-limited.

|

| These are some of the games with the largest install sizes on PC... although when I made this comparison, Modern Warfare had not broken the 200 GB install size! |

PC doesn't have this issue - storage is plentiful and relatively cheap on PC.

What is likely to happen is that, for PC, games may be stored in a compressed state on the server and then decompressed upon installation (and this is the case, currently). From there, as discussed above, the extra cores on the CPU would be used to move data around. I estimate that this would be, on average,

*UPDATE: I erred when I wrote 2-3, it was a typo! I've adjusted the following values to match this correction...This does mean that we should expect that install sizes will continue to balloon on PC - but conversely not on consoles! Consoles will be able to keep the majority of their data in a compressed state in order to optimise the relatively small SSD capacities. Again, as for the RAM, we're effectively getting a 2 TB drive for the SX and a 1.3 TB drive in the PS5.

[UPDATE] However, there is the possibility that developers transition over this trend of streaming compressed data and, if this is the case, then more cores will be exponentially better performance.

One of the big problems for cross-platform games has always been "which lowest common denominator do we target". Well, next gen games have two competing restrictions - compression (or not) and data streaming. It's one thing to design a game around a standard such as SATA II or SATA III with a certain amount of RAM. It's another to try and design a game space based on data transfer speed and number of available computing resources.

This *isn't* the same as just putting the settings of a game at a higher graphical output. Yes, you can lower graphical settings when the game starts to choke. You can't change the whole data transfer/streaming code in an engine for every different environment! It's not feasible...

One thing I'm not certain on - and which hasn't been talked about at all - is whether those decompression units have associated compression engines in the silicon as well. This would be really good for the consoles in that, in the case of the SX for example, the contents of the RAM could be offloaded to the SSD and stored in a relatively small footprint. For PS5, it's less clear whether the feature would be beneficial given that we don't have any information about the general user experience on SONY's console.

Conclusion...

I've been gaming since the mid-1980s but I don't think I've ever lived through a time where all areas of computer technology are advancing as quickly (or as competitively) as they are right now. I think there are some hurdles to clear over the coming years with regards to the issues I've talked about in this post and it really depends on individual developers how these things pan out... Saying that, though, I think we are likely on the edge of a jump in requirements to play games on PC. Many people are saying "don't buy now!" and I think that it is smart to listen to them...

At the end of the day, next gen games will be able to be played on PC, the PC platform will continue to evolve and adapt but I think there will be a period of pain whereby the PCs we've built over the last two year period will age horribly.

At the end of the day, next gen games will be able to be played on PC, the PC platform will continue to evolve and adapt but I think there will be a period of pain whereby the PCs we've built over the last two year period will age horribly.

No comments:

Post a Comment