|

Xbox Experience...

|

| This is much clearer than the previous renders... |

Xbox Series X was exhibited at the Xperion e-arena in Saturn (Germany) pic.twitter.com/xiMvia1FnX

— Mr..Keema (@KeemaMr) August 17, 2020

And one of the reasons this may be the case is that this video is also the first confirmation that recording of gameplay is being carried over from the current generation to the SSD generation of consoles. This is, though, a fear come true for me.

Series X Architecture...

Constant recording will take up some of that precious bandwidth that is supplied to the SSD. Each SSD in the platform has a maximum rated sequential read bandwidth of 2.4 GB/s but adding a constant write overhead onto the controller in the SSD is going to limit the random read access times - the one thing that we know is actually really important for general gaming performance!!!

This means that the random read IOPS for the XSX are going to be super important, more important than I initially anticipated. Sure, you can "quick suspend" a game to the SSD from RAM but the reason why it's so slow (as I previously dissected) might be because it's having to fight for bandwidth from the recording stream! That theoretical 2.4 GB/s sequential access might never be able to actully exist in a system that has a constant load on the controller whilst writing. This is something to watch for!

However, what this will precipitate in is a constant amount of extra heat emantating from the SSD... It also means that wear-levelling on the XSX SSD is going to be amazingly important... I actually can't conceive how they are going to do it because there is already the portion of the SSD reserved for the OS and as the SSD fills up with games and apps, the usable portion of the NAND is going to be reduced dramatically.* Sure, they can fit more than twice as much on the drive per game than previously was possible in the current generation of consoles due to BCPack and other compression technologies but we're looking at a concentrated amount of wear over a limited number of NAND cells. In my opinion, this is not a good thing.

*This is also a potential problem for the PS5 as well...

|

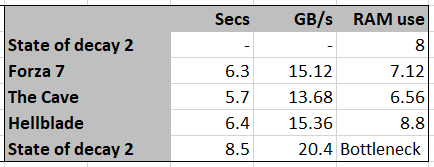

| At the time I collected this data from the press releases back at the beginning of this year, I thought that these times didn't make any sense... |

If I were a developer over at Microsoft, I'd be thinking about how to provision recording in the OS: can the user specify where the data is going? Could they specify an external SATA drive or the external (easily replaceable) SSD? Or can they even turn the feature off to save on drive wear? This is something I'm very interested in finding out!

|

| Note that this combination of hardware on the MSP saves the work of 2+ Zen 2 cores... I'll get back to this later. |

Aside from this, there are a number of other interesting tidbits gleaned from the presentation:

Microsoft's Hotchips presentation has confirmed that only 2 PCIe Gen 4 lanes will be provisioned to each SSD slot but this isn't a problem for the design of the console as that provides 4 GB/s total bandwidth for each. However, MS stated that there are 8x PCIe gen 4 lanes running from the chipset. That's the 2x SSDs + Southbridge and...? It seems like we're missing some lanes here.

The Southbridge has 3x USB 3(x??) ports, the SATA III interface for the Blu Ray drive as well as some internal interconnects. Assuming USB 3.2 Gen 2x1, that's 3x 1.25 GB/s + 0.6 GB/s + various sundries that need to run through the APU to the SSDs, that's about 5 GB/s required at a maximum which could be covered by 2-3 PCIe Gen 4 lanes. I mean, it's possible that MS have included USB 3.2 Gen 2x2 instead, pushing the requirements up to 8 GB/s combined total bandwidth which would require 4 PCIe Gen 4 lanes but it seems rather unnecessary and unlikely given that it seems inconceivable that a user would be utilising 3 external drives that require that much data at the same time.

Of course, if Microsoft were to potentially release a VR headset or partner with one of the existing vendors specialising in that area then they'd potentially require the bandwidth of a single USB 3 Gen 2x2 connection (covering the required DisplayPort 1.2, 17.28 Gbps rate) as well as a normal USB 3.0 (500 MB/s) connection which combined might add up to 3 GB/s... still leaving another 1 GB/s on the table from a 2 PCIe lane allocation.

Honestly, I'm stumped on this one. Any which way I currently count it, the Southbridge doesn't really require 8 GB/s access to the SoC. Though with a next gen* VR setup and an external drive, we're looking at, perhaps 5.7 GB/s of simultaneous data transfer.

*Something akin to a doubling of visual throughput...

|

| This block diagram is almost comprehensive... |

Honestly, one of the biggest things to come out of Digital Foundry's coverage of the Series X reveal was the information that there was 76 MB of SRAM on the APU. Originally, I had assumed that the Renoir CPU cores would be utilised for the consoles but after that exposé I did some simple mathematics to add up the amount of SRAM on a desktop 3700X and an RX 5700, adjusted for the extra CUs in the Series X:

"Going through the numbers, that actually sounds like quiet a large cache supply... I re-checked my calculations from the comments section last time and found I had over-estimated the graphics cache sizes by an order of magnitude, so let's lay it all out: For comparison, the 3700X has a total of 37 MB cache (512 KB L1, 4 MB L2 & 32 MB L3), the 4800H has a total of 12 MB cache (512 KB L1, 4 MB L2 & 8 MB L3). An RX 5700 has a total of 5.2 MB cache (576 KB L0, 512 KB L1 & 4 MB L2).

Adding those up gives us 42 MB cache for a PS5 and 44 MB cache for an Xbox Series X if we just combine a 3700X with a RX 5700 for the PS5 and increasing the cache size for the SX through a simple ratio of 56/40 CU (7.3 MB, total). That's actually much lower than I expected and a little surprising given Digital Foundry's supposition that the L3 cache would be cut down like the mobile Zen 2 parts are."

Here, Microsoft have confirmed that a Renoir-style CPU design was used, with only 4 MB L3 cache used per CCX... The L2 on the GPU has been increased to 5 MB meaning that my calculation now comes at around 21 MB cache in total across the CPU and GPU... (44 - 32 + 8 + 1), assuming that the L0 and L1 GPU counts stay the same. Either way, even if the amount of SRAM in each CU increases by a small margin, we're not finding a stray 55 MB of SRAM there.... So where IS it?!

Yes, some of it will be in the audio block and the MSP for decoding/decrypting, etc. but I'm at a loss as to where the remainder is. Has MS held another surprise back from us?

|

| Ahh, the good old controversial memory diagram I made... with various modes of access described. |

|

| A diagram depicting a Raven Ridge APU data flow - but the same principles also apply to Zen 2 APUs - Source. |

|

| The decoding hardware will allow compressed data to be stored on the SSD instead of uncompressed data, allowing for smaller install sizes... |

As I mentioned in that blogpost, it'll take 1-2 CPU cores for a PC to enable the same sorts of compression savings if the developer chooses to go down this route (see the "SOP Specs" slide high above in this blogpost). That led me to state that I believe 8 core CPUs are a desirable minimum for "next gen" gaming on PC. However, the alternative is that developers choose to forego this space-saving mechanism and instead just allow ballooning install sizes - something I also talked about. Now, certain other people are beginning to worry about this. However, I predict that everything will be okay and users will continue to be able to use HDDs in all but the most extreme cases ;).

One thing that I had also mentioned is the slight increase to latency this decompression has on the system. This shouldn't be a problem in practice but it means that developers need to ensure enough time before requesting an asset from the SSD to its display on-screen to ensure that the latency added through decryption and decompression is taken into account.

Speaking with other people over on twitter, this new information on the composition of RDNA 2.0-style DCUs appears to back up claims from anonymous sources that, despite the "big" difference in GPU resources between the two consoles, overall performance when using RT will be similar. The reason for this is that the PS5's higher clock may allow it to perform the ray calculations and then move onto the rendering of the scene. Whereas the SX may be able to perform both in a more parallel fashion but, of course, with reduced CUs available for rendering the scene.

|

| Dr. Ian Cutress states that the CUs are 25% better perf/clock than the previous gen (I guess One X), which matches up nicely with analysis performed by Digital Foundry over at Eurogamer... |

Series S rumoured hardware...

The Series S has been confirmed through the leak of a next gen controller that was (probably) erroneously sold early. This does make me wonder if the next gen consoles have been delayed from their planned release dates as we're now seeing supporting documentation begin to leak which would have had preset release dates and it's possible that not every vendor got the memo (or some mistakes were made in communication within a specific organisation). I, myself, had predicted around September for the release of the Series X but current leaks place it to launch in November.

But that's really beside the point.

The point is that the leaked documents obtained by the Verge confirm some of what I had predicted: that the S has the same CPU and clockspeed as the X, 7.5 GB game usable RAM (10 GB in total) but the GPU is where we deviate from each other. The Verge was pretty confident that there's 20 CU's in the APU, cut down from 22, leading to approximately 4 TFLOPS of compute performance. It confirmed that the console is primarily a 1080p console with a possible output of 1440p*.

*It must be said that the 1440p resolution referenced here is likely the same as "8K" for Series X... it's possible... but not going to be utilised by many, if any games. It's purely linked to HDMI throughput...

Now we have further information from the author of the previous The Verge stories, Tom Warren, who stated that those 20 CUs would be clocked at 1.550 GHz. This gives us a figure of 3.968 TFLOPS. So let's take a look at the potential performance of this thing.

First off, I had disagreed with this sort of power envelope in the comments section last time for several reasons: the first of which is that we're not comparing like with like between the SX and the SS. But before I get into that let me lay out the raw performance of this device.

An RX 5500 XT is a decent performer at high/ultra quality settings in games at 1080p, outputting between 20-60 fps across a range of games. That's quite a range in quality there and, suffice it to say, the 22 compute units of the 5500 XT are running at a reasonably high 1.717 GHz for the game clock. Just to lay it out in cold, hard numbers - the RX 5500 XT gets a Passmark score of 8904. We know that RDNA 2 doesn't have more performance per clock than RDNA 1 because the numbers Digital Foundry got between Polaris and RDNA 1 match up with the numbers quoted by Microsoft between RDNA 2 and Xbox One X, about 25%*. This means we can do a direct translation of performance per CU, per clock.

*Whenever performance of RDNA 2.0 compared to 1.0 has been spoken about, it's always been in the context of power efficiency.

Theoretical Series S GPU Passmark score = 8904 / 22 * 20 / 1717 * 1550 = 7307

Let me put that into perspective - a GTX 1650 (GDDR5) is able to get a score of 7852 and comparing those two cards on Userbenchmark shows a 28% performance uplift to the RX 5500 XT. This theoretical GPU is weaker than a card that is only just able to reach 60-ish fps in current gen games. I can see it managing 30 fps with no problem, though... If we want to put it another way, the RX 480/580 is considered the best value 1080p card in general. It's a 2016 release that has been optimised over time from the RX 4XX series of cards. Yet this card scores 8741 in Passmark. I don't know about you but that does not impress me with regards to the XSS...

At 60 fps I find it hard to beleive that the Series S will be outputting next gen games at a native 1080p using high quality presets. If anything, VRS (variable rate shading) will likely be used to get a decent frame rate at good quality settings.

|

| Er... I thought Ray Tracing was the future?! |

Moving on to Ray Tracing gives me pause though... Since the BVH calculations are intrinsically linked to the amount of hardware resources availible to perform them and since all current real-time RT is using de-noising technology, in my opinion, a linear reduction in dual compute units (DCU) does not mean a linear decrease in RT game performance.

The reasons for this are two-fold:

- The RT calculations utilise the same resources as the rendering pipeline - they cannot entirely occur at the same time

- RT calculations are independent of output resolution

I've seen quite a few people state that RT calculations (and, thus, performance) are linked to the native resolution of the rendered scene but, and perhaps this is where my limited understanding of the mathemetics comes into play, I cannot see how this can be accurate. As far as I understand things, reducing the native output resolution coming from the graphics card (both Nvidia and RDNA 2) results in more resources available for the ray tracing to be performed because the scene is less graphically intense (not RT: rasterisation, triangle drawing, texture effects, alpha, lighting etc.).

However, the ray tracing calculations themselves are not reduced in complexity - these are geometric intersections of a volume. No matter the resolution, the angles and ratios of a triangles' sides* do not alter. i.e. This doesn't change based on whether you're natively outputting 540p or 4K...

*You can insert any geometric shape or line here.

So, coming back to this proposed Series S GPU... I am wondering if the XSS will drop ray tracing support for all but the least graphically demanding games or whether they will perform it but utilise AMD's DLSS-a-like... the one feature that was not mentioned in the presentation*.

This is a potentially worrying omission.

*[UPDATE] I forgot to mention the other possible solution here. I've said this elsewhere (I don't think on this blog, though) that the LocalRay tech from Israeli company, Adshir, could be used for the Series S. This has been hinted at in RedGamingTech's interview as well as the interview with VentureBeat.

|

| This seems more like it... but not great performance... |

Microsoft have previously listed DirectML as a way to enhance game AI and improving visual quality (I presume this is referring to AI upscaling) but looking at the numbers, they are terribly weak. 24 TFLOPS on the GPU and 0.97 TOPS on the CPU. Sounds impressive until you compare it to the RTX 2060 - the least of the RTX cards: 51.6 TFLOPS.

At this point, I think it's clear that AMD do not have a direct competitior to Nvidia's DLSS technology. Yes, they may be able to do something similar but we're talking about something that's less than half the performance of the weakest, last gen card on Nvidia's side. It isn't likely to happen.

Without machine learning upsampling, both the next gen Xbox consoles will not perform as well on the screen as they possibly could. You could also say this about Sony's console too but there have been rumoured possibilities based on a patent for that platform, though this speculation appears to be potentially misplaced*. As far as I'm aware, there are no such rumours for Microsoft.

*Listening to RedGamingTech's analysis and reading through a bit of the patent, I can see that this appears to be technology that is applicable to providing randomisation of assets "for free" instead of relating to resolution upscaling and reconstruction or inference of visual information where there is less or none available. If you would like me to elaborate, leave a comment.

The final rumour we've heard about the XSS is that it will have no disc drive.

This *IS* a distinct possibility but I'm actually quite skeptical. I think that Microsoft learned a few lessons from the Xbox One S all digital edition. First, is that the market for cheap and all digital do not overlap: One the one hand you want someone who cares intensely about value for money but these people will not balk at the higher price of digital offerings on the Xbox store.

Secondly, these theoretical people will also not likely have access to high speed broadband for repeated game installs after they have to uninstall games from their small storage space.

Honestly, I don't know what's wrong with Microsoft and Sony.

|

| It's funny how everyone expects the digital edition to be cheaper (including Sony!)... economics be damned! |

Yes, the disc drive results in a higher BOM (Bill Of Materials).... but you know what? The inverse is actually true when it comes to market share! The people with money don't care about discs and internet bandwidth/caps. In reality, despite what seems obvious, having no disc drive with a large SSD/secondary storage in the console is more appropriate for the low high-end model. If the design were up to me, I'd have the high-end model with no disc drive and a 2 TB storage for the SX... and the promise of pre-loading of game data to be able to play either on day 1 or 24-48 hours before. You'd be paying for exclusivity.

The low-end model would have the smaller 0.5 TB storage and a disc drive with the added bonus of easy data-shifting to an external USB 3.0 drive for games you weren't using, similar to Steam's change directory feature. (This feature would also be available on the high-end model too).

Conclusion...

I think we've seen enough of the Xbox Series X to know what it's capable of. In my opinion, given the extended release time frame of the graphics card vendors over the last 6 years, what many people (including myself) were initially surprised to think were very high-end systems are actually relatively modest in the graphical sense. We have the equivalent of 2-year old high-end graphical technology in the XSX and 2-year old mid-range* in the PS5 that are about to be eclipsed by hardware that's up to 30-50% more performant per tier. We also have a 1.5 year old CPU (well, less than that if you take it specifically from the APU range: 4800H) that's about to be eclipsed by Zen 3 architectures, with the expectation of a 15-20% core-to-core lift in performance.

That puts both next gen consoles at being equivalent to low-to-mid-range offerings on PC from Nvidia. in its 30 series cards. That's not great! Especially when you consider that DLSS 3.0 is on its way and will trump anything before it! Nvidia have the money, they have the influence with developers, they still have the marketshare and they have the foresight to see that "the pixel is dead",

*From my prior calculations, the PS5's overall performance is around a 2070 Super when ray tracing. Of course, my definition of "mid-range" literally means middle of the range of products (so not including GTX models)...

Moving onto the Series S, I'm a little worred that it's going to be underpowered for its intended purpose if the rumoured specs are true. I do not believe 20 RDNA 2 CUs are adequate to render native 1080p on next gen games with RT enabled. That wouldn't be a problem if AMD had a DLSS-a-like, but there is none currently rumoured or announced. We'll have to wait and see.

If the Series S is limited to traditional lighting implementations without ray tracing then, in my opinion, the case for its existence is considerably weaker. Yes, they had to drop the One X due to its cost and I think that the Series S can be cheaper per unit... but it would still be a disappointment.

If I'm honest, this is the way I would lean, in a logical sense: I could see that the reason that Microsoft haven't announced Series S officially is because it lacks RT. This would save on-die space (and thus cost) and would play into Microsoft's downplaying of RT in their presenation at Hotchips. It would also preclude cut-down SX dies also being used in the system as a cost-saving measure, which I thought could be a possibility based on the MS product hierarchy (server/XSX/XSS/Surface) but let's see about that...

I hope it's not the case because a lack of RT would push me away from purchasing a Series S (something I would seriously be considering), instead focusing on low-to-mid range RT on PC...

No comments:

Post a Comment