|

I've been eagerly anticipating the release of the first DirectStorage title, mostly to see whether my prediction and/or understanding of the tech was correct or not. However, it seems like this particular DirectStorage implementation, much like Forsaken, itself, is a bit of a disappointment...

In the end, I did not splash out on the main game, given the very mixed reception and absolutely jam-packed release schedule for the first quarter of the year - I chose to spend my limited money on other titles that I actually might want to play/test, instead.

Luckily for me, Sqaure Enix released a PC demo and, while I am not entirely sure that everything is exactly the same between it and the main release, it is what I am able to test in this scenario. Perhaps the conclusions I will draw are limited because of it but I do sort of question whether there will be large codebase or engine optimisations available for the main release that are not part of the demo... But, let's see.

What this is, and what it isn't...

The specific DirectStorage implementation on PC included in Forspoken is version 1.1 compatible... but the actual featureset used in the game appears only applicable to the version 1.0 of the API - i.e. there is no GPU decompression of assets - only CPU. So, the reported "version" of the .dll is not relevant, here.

I am testing the loading of a save game which is not the latest autosave point. This is important because the game pre-loads the last autosave, meaning that when a user presses "continue" on the title screen, they will be put into the gameworld instantaneously. I haven't and have never made this mistake.

Additionally, I am testing the performance of a run through the world, across an area that requires streaming of new world data. Both tests are taken as an average of three repeats.

The testing has been performed across two systems:

- Ryzen 5 5600X, RX 6800 (undervolted, overclocked, power limited), 32 GB 3200 Mbps DDR4

- Coupled with a Western Digital SN750 1 TB; Crucial P1 1 TB; Samsung 860 SATA 1 TB

- Intel i5-12400, RTX 3070 (undervolted, overclocked, power limited), 16 GB 3800 Mbps DDR4

- Coupled with a Western Digital SN 570 1 TB

Why are my graphics cards undervolted and power limited? Because I like saving money and power, and I can see that there is a decent amount of potential headroom in modern GPUs when heat is not a problem*. The point is - neither card is performing worse than the stock, out of the box performance.

*The article covering my foray into optimising the AMD RX 6800 is still coming...

As pointed out, in the introduction, I am testing the demo - not the main release. I do not expect there to be huge differences in the actual code and optimisations very close to release between the two, but I cannot discount it. So, some of the comparisons with reported numbers from other commentators may be suspect. However, the numbers I am presenting myself will be internally comparable.

|

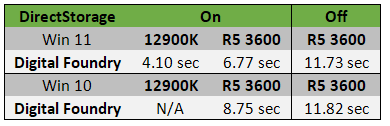

| Loading comparison provided by Digital Foundry... |

Loading times...

I had previously noted that Forspoken did not appear to be a good choice for the display of the benefits of DirectStorage (DS). It appears that the game is very heavily optimised in terms of I/O, resulting in minimal impact of the technology. I have also pointed out that DirectStorage is very dependent on the quality of the storage medium being utilised by the player - SSDs are not created equal - with DRAM, controller, and NAND all having an impact on the performance of the device.

In the case of a DS implementation utilising the CPU to decompress data, this should also mean that the performance level of that CPU will also have an effect on the loading performance of the software in question... and, in the case where a GPU is utilised to decompress the data, I expect the performance level of that GPU will have an effect as well - though this is yet to be shown in a real-world example.

|

| PC world performed the above tests on the game... tested with a 13900KF + RTX 4090 + 32 GB DDR5 5200 Mbps. |

|

| Techtesters performed the above tests on the game... tested with a 3900K + RTX 4090 + 32GB DDR5, at max settings, 4K resolution. |

The problem I have had, post-launch, is that various sources of commentary/analysis have not produced very consistent results. Mostly, purely because the storage devices they are all testing are completely different!

I would LOVE for someone with more access to disposable (i.e. their personal data is not on the particular drives in question) hardware to actually test the scaling of a specific drive across multiple CPUs and, when the implementation is ready - testing across multiple GPUs.

However, saying all of that... I do believe that we can join some dots here... Looking at the available (and VERY sparse) data, we have the following conclusions:

|

| Taken from the above-linked video from Digital Foundry... |

We can see that using the same hardware, the DirectStorage 1.0 implementation (utilising CPU decompression) indeed has a scaling with regards to loading times when less powerful CPUs are installed in the system.

However, my own testing shows that the effect of the storage device is of much greater importance than the relative power of the CPU. A 12400 is considered to be more performant than the 5600X. In fact, my own testing corroborated this during my testing of Spider-Man. However, I have to report some strange results in this regard:

|

| My testing, performed on a R5 5600X + RX 6800... and i5-12400 + RTX 3070 - both on Win 10. |

Yes, the slower drives perform worse, in general, but (and this was repeatable across multiple tests) the DRAM-less WD SN 570 performed worse with DS

Ignoring this one aspect, it seems that the performance of the drive has more of a weight on the loading performance than the performance of the CPU - though it does also have a role to play in this tale.

Secondly, I did not see any real improvement with regards to DirectStorage being on or off. Even with the SATA drive, DS only had a difference of around 0.5 seconds. I would hypothesise that this might be due to the fact that the DirectStorage flag doesn't work in the demo - considering that Digital Foundry showed a tangible difference between Win 10 and Win 11 environments in the released game - but I can see a slight, repeatable difference between the flag turned on and off... meaning that it is doing something.

This is a point I am unsure of and I really need someone with better access to hardware to really test.

|

| From the Techtesters video linked above... |

Gameplay Performance...

This is where things get weird. While I had always predicted that shifting a load such as this onto the GPU would reduce graphics performance, I never thought that we would get a reduced performance from such a scenario on the CPU - after all, modern CPUs have a lot of parallelised scheduling that is hardly utilised by the majority of games (Forspoken included) so, there should be no equivalent reduction in game performance.

However, despite the erroneous claim that enabling DS reduced performance by around 10% on high end hardware.... Techtesters have also published that they observed a very (and I mean incredibly) slight reduction in average framerate and 1% low framerate during their manual in-game benchmarking. But, like my strange, repeatable results - they must be reported as-is, without any real understanding of why they are observed.

From my side, in Windows 10 and using the Demo, I see no reduction in average performance when using slower storage devices, though there is an increase in the maximum frametime spikes (which correspond to minimum fps) as we proceed to the worse devices:

|

However, I would not ascribe this to DirectStorage, rather the fact that the engine/game is designed to expect data to be present for processing at any given point... and the slower devices are less likely to be able to provide that data in a timely manner. Alternatively, as seen in the table below, we can see the effect of bringing the RX 6800 back to stock settings, resulting in worse 10%, 5%, 1%, and minimum framerate values - which would indicate a GPU bottleneck... which seems strange, and so I would presume that this is actually some CPU bottleneck or inefficiency in transfer of data to the VRAM (despite the larger quantity on the 6800 compared with the 3070).

In fact, I find the allocation and use of VRAM to be quite strange in this title - with 11+ GB utilised by the game when running on the RX 6800 but only 6+ GB on the RTX 3070... I encountered no places in my short testing where stutters occurred due to running out of VRAM, so I wonder if it is possible that the 6800 might run into issues with respect to data management between RAM and VRAM at inopportune times... which can be alleviated by increasing the memory frequency.

Additionally, I see that even the system with the SN570 (which gave very strange results) performed just as well as the AMD system - though it appears that the i5-12400 grants a slightly better maximum frametime when the system is CPU-constrained.

|

Conclusion...

All-in-all, the impression I have is that this title is mostly CPU-bound and that adding the additional overhead of Directstorage decompression and batch loading is actually not helping it, at all. In the meantime, I am looking forward to future implementations of DS, in order to properly analyse them and how they affect performance scaling on various system components. Of course, storage will always be a bottleneck... which is why I am primarily against the use of this technology on PC in the first place...

No comments:

Post a Comment