|

Previously, I've looked at the performance uplift of RDNA 3 over RDNA 2 - both mid-range and low-end variants of the architeture - by performing testing at iso-clock frequencies. I love testing these things so have picked up the RX 9060 XT to pair against the prior gen RX 7600 XT. Considering that RDNA 4 has been shown to be a pretty big upgrade over RDNA 3, I'm expecting some interesting results.

Setting up...

As per previous instalments in this series let's get started with setting up the premise and background of this testing. For starters, I'm using the following system for testing:

- OS Windows 10

- Intel i5-14600KF

- Gigabyte B760 Gaming A AX

- Patriot Viper DDR5 7200 MT/s

- Sapphire Pulse RX 7600 XT

- Sapphire Pulse RX 7800 XT

- Sapphire Pulse RX 9060 XT

Now, you may notice a trend in the manufacturer of the GPUs but that's purely a price-conscious thing. These cards have always been at (or closest to) the MSRP in my region and, because I'm spending my own money, these are the cards I've picked up. I've also found that Sapphire cards are generally well-built and designed.

In the previous instalments, I noted that you shouldn't be testing GPUs near their power limits or at stock if you really want to see the generational improvement: you need to be in a non-power limited region of the voltage/frequency curve on each architecture. However, the RX 9060 XT presents a bit of an issue in this regard. As ComputerBase mentioned in their testing, you can't downclock the RDNA 4 cards low enough, nor the RDNA 3 cards high enough into an overlapping zone.

Actually, this isn't entirely true. RDNA 4 effectively has no controls. Seriously, this is the worst software and most locked-down GPU generation I've ever experienced. It's very poor for a consumer to not be able to tweak the settings on the GPU and we've seen a regression in this aspect each generation from AMD since RDNA 1. RDNA 4 culminates in the almost complete loss of contrrol of the product you've purchased. Want to set a negative core frequency offset? The RDNA 4 card will ignore it. Or, sometimes it won't... Oh, and what frequency is it offsetting from? Who knows!

Want to increase the core frequency offset? The card will crash. Hard. So, AMD, what exactly is your card doing? It will ignore user settings when it's convenient for you and then apply them unstably when it's not convenient for the user?

This is just poor design and very poor user control options. Give me granularity. Hell, I'd even settle for RDNA 3-level of control...

|

| What even is this..? Let me set aspecific core frequency, please, AMD! |

*Ahem*

Anyway, as I was saying, this inability to set the same core and memory frequencies is not entirely true. It can be done - I'm guessing that I just happen to have better silicon quality compared to the card ComputerBase was using for my RX 7600 XT. So, for most (not all) of the testing, the difference in operating is a few megahertz plus or minus around 2880 MHz on the core - which is a negligible difference.

There are some titles (CounterStrike 2 and Spider-man: Remastered) for which the RX 9060 XT just ignores the user-set controls and boosts up to the mid-2900s. There's nothing I can do about it and, as we will see, setting a negative power limit is a bad idea on RDNA 4.

This does now introduce a caveat to the testing. I know that the RX 7600 XT, even with it's maximum +15% power limit is likely power-limited in some or all of the testing scenarios we are about to see. So, keep this in mind when looking at the results.

Similarly, the RX 7800 XT is struggling here. It's a MASSIVELY power-limited product and even with the +15% power limit enabled, and appropriate frequency controls set, it just cannot boost to the required 2880 MHz target that I've set. In most titles, it's hovering around 2450 - 2600 MHz but it does come close in those CS2 and Spider-man benchmarks I noted above.

Moving onto the memory frequency - my RX 7600 XT can easily manage 2500 MHz - very close to the minimum 2518 MHz we have on the RX 9060 XT. The RX 7800 XT can also go much higher (due to its superior quality GDDR6 modules) but I kept it at 2518 MHz to match the 9060 XT.

|

| Obviously, the main comparison is going to be between the N33 and N44 parts, but the N32 (RX 7800 XT) can be an interesting partner for this testing... |

In terms of the hardware, the RX 9060 XT is the successor to the RX 7600 XT and this will be our main comparison. The two big differences we have to assess are the architectural improvements within the compute unit (CU) which are mainly focussed on ray tracing and I/O management and the larger L2 cache.

RT has been vastly improved and we're essentially looking at doubling of base performance (ignoring other optimisations that have been made). For I/O considerations things are more complicated: the L1 cache has been turned into a buffer and, if I understand the leaks correctly (along with the actual reporting) this, combined with the out-of-order data request management in the CU itself, allows for the simplification of instructions, and improved parallelisation of workloads on the dual FP32 units per CU that RDNA 3 failed to fully utilise in practice.*

*I'm not an expert, so don't take my word for it!

In practice, this appears to have required a larger L2 cache (256 bytes per WGP - Work Group Processor [or Dual Compute Unit/Compute Unit pair]*) which would make sense as more data now needs to be replicated between the L2 and L3 for each WGP to work on without increasing latency through immediate accesses to L3. Again, if my understanding is correct, this actually brings RDNA memory hierarchy management closer to that of Nvidia's where primary cache flushes at the WGP/SM level happen automatically instead of through a necessary instruction.

Chips and Cheese refer to it as a coalescing buffer. This optimisation potentially saves a lot** of energy and may improve latency and system management overhead (as I alluded to above).

*And you thought that Nvidia's pronunciation of Ti was an issue within the company! AMD said, "Hold my beer..."

**Relatively speaking!

And that's essentially the large changes, covered. There's also the process node improvement (TSMC N6 to N4P) which also increases energy efficiency and maximum clockspeed - and we'll see this difference on display later on in this blogpost. But, for now, let's move onto testing out the RDNA 4 part to see what it can (or can't) do...

Winding down...

As I pointed out before, in order to accurately assess the architectural uplifts we shouldn't be testing GPUs at or near their power limit. However, RDNA 4's lack of controls have forced us into a position of doing exactly that. Previously, I showed that some RDNA 2 and 3 products were operating in that region but we need to determine what is happening for the RX 9060 XT at stock settings.

| The RX 9060 XT is, much like it's RDNA 2 and 3 counterparts, power and memory bandwidth-limited... |

First off, I tried to use my stalwart testing platform - Metro Exodus: Enhanced Edition to see the power and memory scaling of the hardware. Unfortunately, this game is pretty broken for RDNA 4. So, I had to drop that and, instead turn to a more modern, but still complete, hardware workout: Avatar: Frontiers of Pandora. This title also includes a standardised built-in benchmark and provides a full-workout for all the hardware on the chip.

Using this new standard, we are able to see that there is some headroom available to the RX 9060 XT but not a lot. So, as with previous testing, we should opt to reduce the target core frequency to have a power headpace. This would also reduce memory bandwidth requirements, limiting the impact of the memory bottleneck that could occur - however small.

| Further tweaking through undervolting and increasing the available power resulted in around a 2% uplift - but these settings were not reliably stable... |

One thing that I have now added to this pre-testing assessment is a low hardware block utilisation, high throughput workload. For that, I could have chosen any e-sport title but I'm most familiar with CounterStrike 2.

For this type of workload, there is essentially no power limitation (due to low utilisation of the hardware resources!) but we do see a 4% uplift from memory scaling. With a -50 mV adjustment on the core, the 2900 MHz memory test was unstable and actually resulted in a large regression to 259 fps, below the stock performance - so, user beware!

What I like about this test is that it shows us the performance of the part at high refresh rate - a completely different paradigm to that experienced in Avatar.

|

| A 4% uplift from memory scaling in CS2 is not bad... |

The main problem, from my testing, is that the RX 9060 XT cannot overclock the core frequency - or at least mine cannot*. Even a +100 or +200 MHz frequency limit (note: it's a boost limit, not a hard setting!) will result in instability and random graphics driver crashes. I feel like this is unintended behaviour since the user is not forcing the graphics card to boost higher, it should be boosting based on available thermal, voltage and power headroom. So, this seems like a bug at the time of testing - I will have to see if it gets fixed at some point in the future...

So, the main take-away from all of this is that, unfortunately, all cards I will be testing will not be in the optimal configuration for this analysis - we would expect higher numbers for the RX 7800 XT, slightly higher numbers for the RX 7600 XT and, finally, very slightly higher numbers for the RX 9060 XT, despite the uncontrolled downclock, due to its memory bandwidth bottleneck...

*I'm sure people will tell you that you can - but how thoroughly have they tested? On what types of games and engines?

Iso-Clock Benchmarking...

I guess we have to start somewhere and it seems I've gone through my tests in an alphabetical order this time, so hang onto your hats for this wild ride of iso-clock* testing!

*As much as I was able!

What's important to note about the test graphs is that I'm charting the "average moving fps", the "differential frametime" (aka dF) - which is an indicator of relative smoothness of experience, and the "maximum frametime".

The way you can interpret these numbers is that the average fps is telling you the user experience across the entire test - and is not purely an average of frametimes in the test (which is wrong), we then have the maximum frametime, which corresponds to the worst "stutter" in the test period (i.e. higher is worse!) and finally we have the differential frametime which tells you the number of times that the performance of the card deviated from the average over a certain limit. This limit is currently set at 3 standard deviations from the mean (where I wasn't using an in-game benchmark and not testing the results directly - i.e. Avatar and Returnal). What the dF shows you is how many times the frametime was resulting in a big enough positive sequential frametime deviation that the user might notice and feel.

The only confounding factor here is that a higher framerate will generally result in a higher dF number if the same "process performance" is achieved compared with a lower framerate GPU result. So, if you have a higher fps and a higher dF, it doesn't mean that your result is worse, it just means that it's less stable but not terribly so!

Give me feedback in the comments!

One last thing. I've performed the majority of these tests at 1080p and that's mostly due to the quality settings I'm testing against (I really want to challenge the RT performance of the 9060 XT) and also due to the general capability of the GPUs on show. While the 7800 XT is clearly a 1440p card, the other two are struggling even at 1080p in many cases...

| *RX 7800 XT only achieved 2450 MHz in AW2... |

First off, let's look at Alan Wake 2. We can see here that the 9060 XT is performing well above its weight class at around 31 fps compared to 22 fps for the 7600 XT at essentially the same core and memory frequency. That's a pretty decent uplift, gen-on-gen. What that uplift doesn't do, is beat the -400 MHz core frequency that the RX 7800 XT is operating at. It clocks in at 37 fps showing that the wider compute really helps here. Additionally, I am pretty sure that the N32 part (7800 XT) has a greater bandwidth to the L3 cache as well as the GDDR6 - almost double, in fact!

Avatar shared a similar but even more impressive story - the gap between the 7600 XT and the 9060 XT reduced somewhat while it increased between the 7800 XT and the 9060 XT at Ultra settings. Meanwhile, at Low settings, the distance between the 7800 XT and 9060 XT widened to 33% but decreased from the 9060 XT to the 7600 XT to just 18% and I feel pretty confident that this behaviour is down to the memory bandwidth available as the processing power drops off at lower settings.

|

| *RX 7800 XT achieved 2855 MHz in CS2 and 2456 MHz in Hogwarts... |

CounterStrike 2 is the first, and only, game where there's a table flipped: not only does the 7600 XT beat the 9060 XT in average fps but it also has a lower max frametime and dF value - indicating that when tested at iso-frequency, the RDNA3 part provides a better and smoother experience!

Obviously, the 7800 XT blasts the other two GPUs out of the water. It doesn't even need to be said. The very large dF value might give you pause. However, the MUCH lower max frametime tells you that these deviations are smaller, if more frequent than on the other two GPUs. i.e. The RX 7800 XT is a beast at e-sports titles... and in this test, it is able to reach and maintain 2855 MHz throughout the test.

This is really the first sign of weakness for the 9060 XT and, in my opinion, points to another weakness in that card - the lack of dedicated (or perhaps more accurately, managed) L1 cache.

I believe that this performance deficit can only be explained through added latency caused by having to travel out to L2 or L3 when on the 7600 XT, the game can work more closely to the CU in L1...

What's interesting is that no one else I've seen reporting on the RDNA4 architecture has covered anything similar to this*...

*Of course, last night, eTeknix did so just to spite me! (though not iso-clock testing)

We'll come back to this later.

Hogwarts shows an impressive lead over the other two cards for the RX 9060 XT at 47.8 fps, just inching past the 7800 XT at 46.9 fps. However, it's not a happy win for the new contender because we can see that the smoothness of the result is pretty poor compared to the RX 7800 XT with more sequential frametime deviations despite a slightly lower max frametime...

|

| *RX 7800 XT achieved 2567 MHz in Indy and 2696 MHz in R&C... |

Indiana Jones brings us back to the status quo, with the 7600 XT really struggling to play the game in a smooth fashion, even if the average is north of 60. We can see that as for Hogwarts, the ray tracing chops of the 9060 XT are gnawing at the workload but the experience is a little uneven in comparison to the 7800 XT. This may be a driver thing but it may also be a memory bandwidth issue.

If we take a look at the frametime plots for each configuration on the 9060 XT and 7800 XT we can see spikes throughout the test run. The spikes actually reduce on the 7800 XT when the memory is running at stock, instead of 2518 Mhz - which may indicate that the overclocked configuration is unstable in this title - it's able to push a faster frame but then instantly falls behind.

This game probably needs to work with an fps cap to be running more smoothly - at least on this selection of cards.

|

| Frame-pacing is quite bad in this title and on these cards. On a VRR display, I mostly don't feel it but some of the big drops below 16.6 ms are felt even with that... |

Ratchet and Clamk, meanwhile, is another upset - this time in favour of the 9060 XT. It's essentially almost matching the 7800 XT, if not quite in terms of smoothness... but it's very close. Insomniac's engine really likes RDNA architectures but the improvements on display here show that the way RT and data structures are managed in the console have probably reflected well on RDNA4 and probably 5 - resulting in performance which is beyond other titles in this analysis.

These titles are therefore statistical outliers and should be considered so...

|

| *RX 7800 XT achieved 2813 MHz in Spider-man... |

Returnal brings us back to normality.

The 9060 XT is better than the 7600 XT but not the 7800 XT. What's interesting is that the maximum frametime is better on the 9060 XT than the other GPUs.

For Spider-man: Remastered, we're reflecting the results of Insomniac's engine: the 9060 XT is beating the 7800 XT in average fps and maximum frametime but matching in smoothness.

So, overall, the RX 9060 XT is around 10% better than the RX 7600 XT in an iso-clock configuration if we ignore the outliers of the Insomniac engine games. If we take those biased games into account, it's a 16% gen-on-gen architectural increase.

In comparison, the RX 7800 XT is 36 % better architectural increase (ignoring the Insomniac outliers) and 30% better with the outliers included.

This is bad...

Architectural Measurements...

Once again, I'll be taking advantage of the wonderful tests authored by Nemez to perform these microbenchmarks. Maybe one day I will have the time to actually be able to build the evolutionary descendant version of these tests from Chips and Cheese...

|

| These tests measure the competencies of the core architecture, so we'd expect similar results gen on gen per resource unit... |

In an iso-frequency test, the RX 9060 XT has a slight archiectural improvement over the RX 7600 XT in all tests. Of course, the RX 7800 XT, having 1.875x more compute resources is around 1.8x the RX 7600 XT and 1.7x the RX 9060 XT - which is completely in line with expectations because these things do not scale linearly...(and we also know that the RX 7800 XT is power limited and may not even been able to maintain the required core frequency during these tests).

|

Moving onto more complicated calculations, we had previously observed a big difference between RDNA 2 and RDNA 3 when comparing the RX 7600 XT to the RX 6650 XT. Here, though, the architectures are pretty much of a muchness! We do see the 7600 XT eke out a victory over the 9060 XT in FP16 inverse square root result.... which I'm sure is really useful.

Now, after the pretty boring calculation results the cache and memory bandwidth results are quite the interesting story!

|

| The larger L2 cache in the 9060 XT makes a big difference... |

The 7800 XT still massively wins in small data transfers but the 9060 XT matches and beats it in two areas in the graph - around 80 - 128 KiB and 1 - 8 MiB. For the former, I am wondering if the previously covered buffer between the compute unit and L2, paired withat that wider and faster L2 cache, is the reason. The subsequent drop-off after 128 MiB makes sense given that (I believe) the buffer is still 128 MiB in size (as was the L1 cache in RDNA 3) but what really confuses me, is the plateau between 1 - 8 MiB.

I don't really have a good mental model as to what's going on, there. This result really blasts away the prior gen equivalent 7600 XT and the much stronger 7800 XT. I could understand if this plateau occurred up to 4 MiB (the size of the L2 cache) but continuing up to 8 MiB is a little weird. Or, perhaps it's not... The 7600 XT also has a plateau from 2 MiB (L2 cache size) to 4 MiB (the 7800 XT, has no such plateau until double the L2 cache size).

What is quite shocking is the abrupt drop-off after that plateau.

The other RDNA cards I've tested show a more gradual decline in bandwidth but once the 9060 XT is done, it's done! And this will perhaps explain the CounterStrike 2 performance we're getting to, next...

|

Looking at the vector and scalar cache latencies, we see that the RDNA 4 architecture suffers a latency hit WAY earlier than encountered for RDNA 3. What's happening here is that once the caches on the comupte unit are exceeded, the (former L1) buffer does nothing for latency and instead everything is predicated on the performance of the L2 - which is why we see it flat until the L2 is exceeded. Latency then takes another relatively large hit (compared to RDNA 3) when heading out to L3 cache, and once again when fetching to the VRAM.

This also ties in with the poor performance we will discuss for CounterStrike 2...

|

| Yes, this is a little broken for the RDNA4 part past 16 MiB... Ignore it. |

E-sports failure...

Let's circle back and take a look at that CounterStrike 2 result where the RX 7600 XT beats the RX 9060 XT under iso-clock conditions...

|

| Now back to stock settings, we investigate the scaling in CS2... |

Unfortunately, as I mentioned previously, you can't reliably push the upper clock limit higher in the Radeon Adrenalin software - it just crashes the driver. I did manage to capture a couple of minor increments (between crashes!) and you can see the results of those in the above blue chart. What we can observe is that we get a 1% (5 fps) increase in average fps for a 200 MHz core increase. Additionally, we see a regression in performance if we switch the VRAM to the "tighter" timings in the Adrenalin software*.

*I recommend not using this on RDNA 4, the looser timings appear to be better for the memory system, in general from anecdotal testing...

I already showed memory scaling earlier in this post. A a 4% gain is nothing to write home about for 400 MHz extra memory speed, so, most likely there's a bottleneck elsewhere in the architecture but is is more related to data management than core speeds and memory timings.

What is interesting is the scaling between settings on the three cards:

|

| Performance ratio and GPU utilisation... (Stock hardware) |

At low setttings, we can assume that we're at a CPU limit as the performance of all three cards is pretty identical. Moving up to medium settings, the 9060 XT is crushing it - no effect from scaling! The other two cards scale, with the smaller cache and compute 7600 XT suffering a bit more.

However, we move up to the high settings and the 9060 XT just crashes and burns. Now, I'd like to explain this by the %GPU utilisation - both the 7600 XT and 9060 XT have reached their limit but the 7800 XT is very close in utilisation, as well, but does not suffer this drop-off in performance.

I've said before that GPU utilisation isn't a number that you can blindly trust* and, yes, this is another of those occasions. I know for a fact that different workloads will present as 100% utilisaiton, despite not "utilising" the entire GPU. Hence why I test different types of games in my initial scaling tests when investigating an architecture and "where" I should be testing in terms of frequency, power and memory limits.

*In fact, I'm at the point where we can't just point to any simplistic metric as a real and trusted measure ...

You see, the 7800 XT climbs 15% utilisation from medium to high whereas the 9060 XT climbs 10% and the 7600 XT climbs just 4%. This indicates to me that the cards with the smaller L2/L3 cache are spending more time "utilised" in data management. It's the same VRAM capacity conversation that everyone's having, only applied to memory subsystems in the GPU. The 9060 XT's larger 4MB L2 saves it until it doesn't once the bandwidth to L3 has to be saturated.

|

| CounterStrike 2 settings comparison... |

Looking at the differences in quality settings, we can see that moving from medium to high has a large quality difference. If we take a look at the reason for the drop by manually changing each individual setting, we can see that the biggest offenders are the move from 2x to 4x MSAA, and turing off upscaling.

Now, I'm not the most knowledgeable person when it comes to rendering technologies. Upscaling I understand - we're talking about rendering at a lower resolution and scaling up to the native resolution (typically*) of the monitor you're outputting to. MSAA (MultiSample Anti-Aliasing) I'm less confident on, but if I understand correctly, it's a way of sub-pixel sampling which optimises for the quantity of triangles present within the sampled locations of the pixel. From what the wiki article says, it seems that this is a very intensive process in terms of bandwidth and fillrate.

*We could render to a higher resolution and then allow a downscale to the native resolution. I do sometimes do this - for example, on 1080p screens...

We know the 9060 XT has a higher latency for memory operations and if that 8 MiB limit is breached (seen above) then bandwidth falls off a cliff. In my understanding, that's what's happening - the higher resolution requires more data and more pixels to be assessed and the 4x MSAA is essentially doubling that work from the lower setting. Individually, each of these is pushing up against those data and bandwidth limits but combined destroy the RDNA 4 part.

|

| Trying to see what the cause of the precipitous performance penalty is... (Stock hardware) |

So, here's what all this data is pointing towards: RDNA 4 doesn't have a massive Compute Unit architectural uplift over RDNA 3 - with the huge caveat that ray tracing improvements are quite large. We can see from the micro benchmarks that the 9060 XT is only minimally faster than the 7600 XT. Where the big change is coming is the cache hierarchy and it seems that this is both a positive and a negative - depending on the situation.

Where the RDNA 4 part is partially rescued is the fact that, like RDNA 2, RDNA 4 has another clockspeed bump - we're typically in the range of 400 - 600 MHz higher core frequency compared to the 7600 XT...

Stock Benchmarking...

Taking that into consideration, let's re-look at those iso-clock tests, now at the stock hardware configurations.

In Alan Wake 2, both RDNA 3 cards regress slightly due to the lower clocks but the RDNA 4 card doesn't improve much as the core frequency only raises by around 250 MHz - this game is pretty hard on RDNA cards, in general!

Avatar is pretty much the same story. The RDNA 4 part just doesn't perform better. In both of these tests, I think we're bumping up against a bandwidth or power limitation - though I have tested these configurations with the power raised as high as it will go and the RDNA4 part does not perform better.

|

Counterstrike sees a small ~25 fps uplift for around a 470 MHz core clock increase. Hogwarts does see a slight increase for both 9060 XT and 7800 XT, with a small regression for the 7600 XT. It's possible that the 7800 XT was a little unstable with the increased memory speed and so that could explain the worse result in the "iso-clock" configuration.

|

Indiana Jones has a slight improvement for the 9060 XT and an associated decrease for the 7600 XT - due to the changes in core clock speed on both cards: +340 MHz and -155 MHz respectively.

Ratchet and Clank has a pathetic 5 fps increase for 400 MHz core increase on the 9060 XT, while the other two cards drop 1-2 fps for their -180 MHz and -80 MHz core clock regressions.

|

Returnal doesn't see much change at all. Similarly, we get a 4 fps boost for the 9060 XT and effectively the same performance for the other two cards.

And, finally, Spider-man showed some very strange results - which I can only assume to be a driver issue on the latest Adrenalin drivers because both the 9060 XT and the 7600 XT performed worse than when I was manually setting clock limits. So, let's just leave that title aside, for the time being...

Conclusion...

It's very clear that RDNA 4 has a heavy focus on ray tracing (finally?) but isn't that impressive in other aspects compared to the prior generation. A lack of memory and cache bandwidth can't overcome the limitations when a workload overwhelms them.

When I first started testing the RX 9060 XT, it felt weird. There was something off about it. Performance is not bad but also not good. Performing slightly worse than the 54 Compute Unit RX 7700 XT is impressive in its own way but terrible when you consider that we didn't really get much of a generational uplift from 2020 to 2023 (from both Nvidia and AMD!) and the fact that the RX 7800 XT either matches the 9060 XT, or blasts it out of the water is an indictment on the over-segmentation of the GPU tiers within each generation. AMD need to be offering more CU per price point, not less.

Sure, the RX 9070 XT seems like a more impressive part (unfortunately, no simialr comparison can be made, there!) but it's not priced like a mid-range product. It's priced like a high-end part.

I don't dislike the 9060 XT but I also think it's priced too high. I bought mine for, essentially MSRP at €375*. It's merely okay at that price because the RX 7700 XT was available at €400 at points over the last year and a half. Sure, there's a decent uplift compared to the RX 7600 XT - but I bought that card for €320. When you factor in the price increase, you are only getting ~25% more performance in RT workloads and +10% more performance in rasterised workloads for your money.

That's not great.

*You can now get them for around €360 - 370...

Here's my final thoughts: If you want to play the latest RT triple-A games at higher settings, you should be buying a more expensive GPU - the RX 9060 XT just doesn't have the grunt to pull that off. If you want to play rasterised games, then the 9060 XT is the cheapest 16 GB graphics card you can buy, and in that sense, it's worth it.

However, if you want to play high fps e-sports titles, don't upgrade from any GPU within the last generation or two. You won't see a real benefit from RDNA 4 unless you're looking to spend above €600...

[Update 29/08/2025]

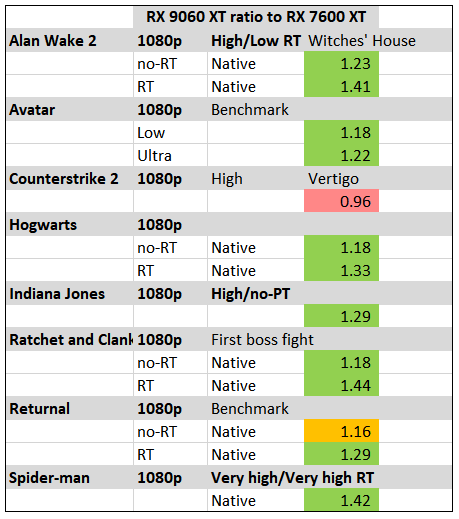

I realised now that I forgot to add the uplift summary as I have previously done. So, here it is!

|

| Compared to the uplift of RDNA3, RDNA4 fixes a lot of things that were holding the architecture back... (Performed at iso-clock settings) |

I actually performed some extra non-RT tests which I've included above and, as you can see, the doubling of the RT throughput in each DCU is where the majority of the gen-on-gen performance uplift comes from. The averaged non-RT performance uplift comes to 1.15x, whereas the averaged RT performance uplift comes to 1.34x. Looking at the micro benchmark results, we see an average of 1.05x uplift over RDNA3.

All of these combined results imply that the majority of the remaining performance uplift lies with the improvements to the data management in the cache hierarchy.

No comments:

Post a Comment