|

| Literally what happens... |

Summer is here and temperatures are on the way up. I live in a hot climate, meaning that an average daily temperature from May to September is in the mid-thirties degrees celsius, if not higher. I don't have AC in the computer room and, even if I did, it doesn't make much sense to purposely heat up the room only to spend more money on cooling that extra heat to a comfortable level.

This situation is why I care so much about airflow in cases because in a hot environment it really matters how much quantity of already warm air you must pass through the case in order to cool components that are, relatively, less hot (though still hotter) than that air compared to the scenario in a cooler climate.

But there is something else you can do to improve component temperatures that will help in not only this situation, but also as a general principle. Let's do a quick dive into whether undervolting, underclocking, and power limiting your components (specifically the graphics card) is worth trading performance for...

Heat, huh, what is it good for...?

Let's first define what we what to avoid or to get out of this process. At the end of the day, we need to look at what we're trying to avoid, so we can quantify our benefits versus the drawbacks. The two items on today's list are:

- Heat

- Noise

Let me start with noise and get back to heat. Noise is annoying and it's distracting - especially when there are constant pitch changes or there is a constant high-pitch whine. When fans on components operate at high speeds, they emit these noises that can be very distracting - especially if they change speed (i.e. the fans ramp up or down in revolutions per minute - rpm).

Even though I play games wearing headphones, I don't have them so loud that I can't hear high pitched fan sounds or fan speed ramps when the components get hot. So, ideally, I (and I'm pretty sure most people - even if they're not wearing headphones) like to minimise these disturbing sounds during gaming/general PC use.

Heating up...

Getting back to heat: Electrical components are designed to work hot. Yes, they can be pushed further if they are kept cool but many semiconductor computer components, like SSDs, actually need to be slightly warm in order to operate efficiently... but too warm and they stop operating properly.

|

| Curve showing the general trend of resistivity vs. temperature for semiconductors... |

As per my understanding of semiconductor theory, CPUs and GPUs work under these same constraints:

At low temperatures (we're talking sub-zero celsius here), the resistance in the circuits will increase to such an extent that more power* is required to operate the circuit. Conversely, their operation also gets less efficient as temperatures increase past ambient which, ironically, means that more current/voltage needs to be applied in order to keep working at the same frequency because the thermal noise level will exponentially increase, causing errors during calculations due to false/masked signals.

This situation will also result in a thermal runaway*** because pumping more voltage into a chip will increase the power used, which will result in more heat dissipation because switching of the transistors generates heat through leakage and draining through switching**.

*I.e. you need more current to force enough electrons across the band gap into the conduction band so that a signal can be made. Since, Voltage = IR, voltage must also be increased if either current or resistance are increased.... and obviously it increases if both are increased. Since Power = IV, then the amount of power consumed also increases.**Which is why liquid nitrogen overclockers have to push up their voltage so high. Even though they're operating under conditions where the resistance of the various semiconductor parts of the chip is higher, this value pales in comparison to the required heat dissipation created by increasing clock speed which, in turn, results in much more waste heat through leakage and draining of the transistors.

***Modern processors have a lot of in-built protections... but you could literally burn your chip to a non-functioning crisp through thermal runaway in the early days. I suppose it's still technically possible but I believe the probability of meeting those conditions is very low... What happens nowadays is that the chips will dynamically lower frequency until the thermal load is considered "acceptable" by some internal hardware or software logic.

Of course, this then comes back to what enthusiasts like to call "the silicon lottery" because the quality of the silicon (i.e. how many contaminants and defects it has - which will affect leakage, resistance and other aspects) has an effect on how much clock frequency can be applied at any given voltage. And THIS is where the variability of the advice in this blogpost will come into play.

One more thing to note before we get onto business: overclocking and over-volting and allowing your components to run at high temperatures can reduce the life expectancy of those components. This occurs through silicon aging and it is why I do not, and will never, suggest or promote overclocking in this day and age*.

*The primary reason being that the manufacturers (AMD, Intel, Nvidia) are binning their chips so agressively these days that there is practically no headroom in the particular designs you buy compared to the early days of computing when you could easily overclock silicon that was manufactured on a mature node. Seriously, the silicon fabs (TSMC, Intel, Global Foundries, etc.) have gotten so good at their processes and working with their clients to identify the sweet spot and upper limits of chip capabilities that the end-user does not need to perform overclocking. Your chip is most likley working near it's upper limit in terms of performance per watt. In some cases, beyond what is desirable. That's why I am making this article... the only way is down.

Backing up a bit to summarise: heat reduces efficiency, reduces performance, and also will shorten the lifespan of the technology you are using. Unfortunately, not everyone will be able to achieve the same levels of noise/heat/performance benefits that I will but I don't believe that this should stop you from trying your hand at this because you will mostly benefit unless you are running at 144+ Hz monitor where you require super high framerates for competitive gaming... But then, that sort of person wouldn't even BE here in the first place for this sort of content (at least I don't think they would be!?)...

System Setup...

One of the first things I do when building a system is to go into the BIOS and work out a nice quiet fan curve for the case fans and CPU fan and I tie this to the CPU temperature - NOT the case temperature, which is usually the default for case fans*. The reason for this is that it doesn't actually matter what the temperature in the case is, the temperature of the components (VRMs, RAM, CPU, GPU and storage) is what we really care about. If the temperature in the case is 40 C but our CPU or GPU is 100 C then we're not really helping the situation.

Either the cooling solution or fan curve on the component in question is insuffient for the job, or the fan curve on the case fans is not increasing dramatically because the system temperature does not really reflect the component hot zone temperature - which is the reality in most situations.

*This may not be the most optimal solution, but it works for me and I think it works for 99% of use cases.

However, you don't need to ramp up the fans to 100 % and keep them there, that's just asking for trouble... You'll get statistically fewer fan failures when running at lower speeds and also, most importantly, less noise to disrupt your gaming sessions.

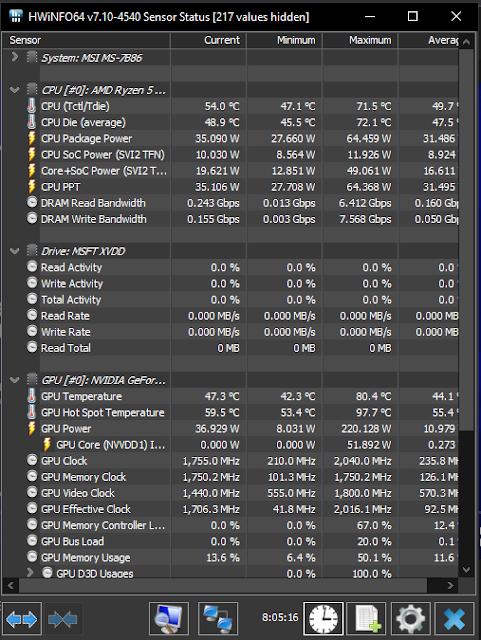

Beyond that, I install MSI Afterburner, RivatunerStatistics server (which should come installed with Afterburner) and HWInfo for my system monitoring needs. If I was going with an AMD graphics card I could also use AMD's Adrenalin and Ryzen Master to do things within the Microsoft Windows environment... but since I have an R5 5600X, I don't feel I really need to do any optimising on the CPU side of things.

|

| There's a decent amount of setup required with these programmes to cut out all the chaff but once you do, you have an easy-to-digest readout of your system sensors... |

There are many tutorials regarding undervolting both CPU and GPU. The ones in the links are two of the better ones I've encountered... but I advise you to read around the subject before proceeding to mess around with the power/voltage/frequency settings on your hardware! Especially if you wish to do some of these things in the BIOS as opposed to a Windows compatible programme.

The whole process isn't difficult but understanding the many nuances is not a simple thing and you can make a mistake that, while it won't hurt your hardware, might leave your computer in a situation you preferred it had not been in until you discover that you misunderstood the variables.

Anyway, on with the show!

All's fair in love and 'ware...

I'm going to tell you up front that I'm very happy with my experience of undervolting and this is why I would suggest everyone do it to some extent, especially if you're playing singleplayer games with a monitor that has a ≤ 120 Hz display. Personally, I think that technology has advanced to the point that, at stock settings, longevity is generally no longer an issue. However, as I said above, both noise and heat are a consternation that needs to be addressed on a case-by-case basis...

My System:

My CPU is pretty efficient and the rest of my system is drawing minimal amounts of power so I worked on undervolting and power limiting my GPU.

Methodology...

I checked several applications* at 1080p native resolution with the maximum available settings in all cases. I could have gone to 1440p since I tend to use super resolution (downscaling - DSR in Nvidia's case) for many titles to achieve better non-aliased visuals. This isn't a technical benchmark that measures what each component is capable of in terms of absolute performance, this is a benchmark that is comparing relative performance within given user constraints.

While I appreciate and understand reviewers' penchant for testing at 720p and lower in CPU tests, and various flavours of GPU stress testing, these situations are purely theoretical and have no real impact on an everyday user's experience. At the end of the day, technical reviews (if performed correctly) are mostly scientific and as I said, although I enjoy them, they shouldn't really hold too much weight in the end-user's decision-making process during purchasing because their results will never reflect the reality experienced by users.

*The following applications were used as standardished benchmarks: Unigine Heaven, Unigine Superposition, Metro Exodus:Enhanced Edition, Total War: Troy, Arkham Knight, Assassin's Creed Valhalla. I don't really own any racing games so I didn't benchmark one of those but they don't tend to be reflective of hard to run engines or titles...

I will compare the stock results with the results from a 50% power limit and with an associated undervolt, performed as described here. I managed to achieve a stable 875 mV from 975 mV for a frequency of 1920 MHz which gave zero stability issues for more than a month.

I don't really have much of an idea whether this is a small undervolt or a large one (my gut points me towards the former) but I will say that going down to 850 mV gave me some issues.

|

| The settings of my power limit, temp limit and undervolt. The faint curve is the factory stock voltage/frequency curve... |

Results of the tests...

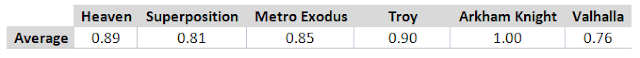

Looking over the results, I achieve a geometric mean of 0.87x compared to stock for a 50% reduction in power usage, 15 C lower temp, 33% lower fan rpm, and a maximum frequency at around 0.82x on my particular RTX 3070. This doesn't appear to be anything particularly special so I do not believe I have a "golden sample" on my hands. However, the big takeaway here is that I'm saving a lot of energy and using the fans a lot less than at stock...

|

| Relative performance of 50% power limit and with an undervolt of 875 mV compared to stock settings... on the average of the min, max and mean fps results |

|

| All individual relative results from the testing... |

|

| Comparison between the parameters of the card between the two tested settings... |

Power matters...

Additionally, I performed more testing using the Unigine Superposition benchmark, with the undervolt active but power limits scaling to see how the GA104 die scales with power, temperature and voltage.

It's clear to me that, at stock, my RTX 3070 is throttling due to temperature. I wanted to double check the results so I retested the stock settings to see if I was getting good data and the data showed that at around 80 degrees C and above, the GPU begins throttling the core frequency. It doesn't have a huge effect on performance compared to the undervolt (we're talking about 49.7 fps versus 51.0 fps) but the effect is there.

Additionally, at fan speeds above 82%, the fan noise became distractingly problematic. That's at a power limit of 85%, where the card is drawing around 187 W.

|

| Performance scaling of the GPU core with power under a -100 mV offset, compared to stock settings... |

Now, I didn't keep track of the CPU or system temperatures under this testing but this benchmark does not stress the CPU in any meaningful manner so I doubt the information would be useful.

However, we do observe that at 75% of the power limit (165 W), we're effectively getting the same performance as we do at stock settings (220 W) which, is just mindblowing to me. People love to rail against Ampere and how poor it is at energy efficiency but as I've said from the start, RDNA2 is no angel when it comes to efficiency.

If I compare this specific benchmark to the theoretical performance of the RX 6700 XT (as provided by TechPowerUp), we are talking about the RTX 3070 matching the performance of the RX 6700 XT at around a 55% power limit - which corresponds to 121 W. If you undervolt your GPU a little and power limit it, as I have done, you get the approximate performance of AMD's equivalent.

I think that's really impressive and just goes to show that neither RDNA2 or Ampere are more or less efficient than each other because we're talking about and comparing specific products. You can take an RTX 3080 and downclock, undervolt and power limit it until it's the performance of the RX 6500 XT but if it's sold at €1000 then, obviously, the RX 6500 XT is the better buy...

This is why those discussions about efficiency of architectures are all nonsense because it depends on the particular SKU and price point target. Any graphics card AIB can push their particular variant well past its efficiency curve and essentially "ruin" the card's performance per watt. Hell, as I said above, the original manufacturers/designers are doing just that... So, you, as the consumer really have very little idea about how efficient or inefficient any particular architecture really is unless you manage to abstract the testing to a level where it no longer reflects reality.

|

| Stock voltage settings increase power consumption and reduce performance... |

There are a couple of more points we can take from the above information. First off, we can see a clearly linear power scaling between 50 - 90 % of the power limit of the card. It's only once we get past that point where we see adverse temperature and frequency effects with the applied undervolt.

Of course, I know from my initial testing that lower than 875 mV was not stable at 1920 MHz and so I doubt I would be able to scale further than this by increasing the voltage in tandem with decreasing the power limit. Just for a final test to set my mind at ease, I set the graphics card to stock settings and reduced the power limit to 90%.

Working with these settings, I achieved the same performance as I could at an 80% power limit with the undervolt... for 21 W less power consumption. Which, funnily enough, shows that performance in this application for Ampere does scale with clock frequency contrary to my prior findings. It just doesn't scale with GPU resources along with clock frequency, like RDNA2 does.

|

| At stock settings, with a 90% power limit, we get similar performance to an 80 power limit with a -100 mV undervolt... with 12% more power draw. |

Conclusion...

AMD have pushed their RDNA2 architectures to their limit and, quite frankly, Nvidia is doing the same - and that's okay! That indicates that there is competition in the marketplace from a performance perspective.

This isn't fantastic from an end-user's point of view because the two companies are also pushing the power limits of their particular architectures when they don't actually need to do so in order to achieve increased gen-on-gen performance.

The issue we now have is that neither AMD or Nvidia want to give you their top-of-the-line products at lower price points (despite them being perfectly respectable in terms of making a profit per unit). So, in order to keep realising these profits, AMD and Nvidia are in a death spiral of getting their smallest possible silicon to clock as high as possible and push as much power as possible in order to make the most profit possible.

Many analysts and commentators are saying that AMD are doing better in the performance per Watt and die-size areas... but when I look at the actual numbers, I don't see how that's the case. The RTX 3070 is 392 mm^2, consumes 220 W and has approx. 10-15% more performance than the RX 6700 XT at 335 mm^2 and 220 W. And yet Nvidia has 2.28x the FP32 shaders in their architecture along with "dead" silicon for non-ray tracing and upsampling performance in the shape of RT and Tensor cores.

That's ~17% extra die area for vastly more resources than RDNA2 has and it was on a less dense process node as well...

Unfortunately, this is all a bit moot because Nvidia's cards have a price premium over AMD's and so the comparison falls a bit flat. However, going back to the reason for this whole post in the first place, I believe I have acheived all of my goals:

- My GPU is very quiet and does not distract me when playing games or using the PC.

- The power consumption of my GPU is significantly reduced and performance in games is only minimally affected for my use case.

|

| Relative performance vs. Power limit on an undervolted RTX 3070... |

I think, if I really wanted to, or needed to, I could increase the power limit to 60 - 65 % of the stock, keeping this current undervolt. That would get me a nice performance boost of around 10 % whilst still keeping my power use, temps and fan speed all relatively low. Given that I game at 75 Hz at 1080p - 1440p at high to maximum settings, in general, I do not currently foresee any issue with the amount of lost performance from the undervolting and power limiting.

As I said aboave, I am happy with the results of this exercise and feel confident in encouraging others to do similar things for their own gaming needs.

No comments:

Post a Comment