So the reviews for the RX 6700 XT are finally online and it's time for me to take a quick look back at my predictions and see how they panned out...

Eh... I was relatively close...

Let's see what I wrote previously:

"Now AMD find themselves in the same situation that Nvidia did and simultaneously in a worse situation that they have put themselves into - they don't have a lot of extra performance they can put into this SKU (around 15-20% at 2.3 GHz and who knows the yields at that frequency!), and they already charged $400 for that card over one year ago and they are facing a $400 RTX 3060 Ti that is more powerful than an RTX 2080 Super.

AMD charged too much for the RX 5700 XT* and as a result, despite adding lackluster ray tracing performance**, they have to go lower. They can't afford not to because the RTX 3060 and 3060 Ti are waiting to kneecap this SKU and the probable RX 6700. Don't get me wrong, around RTX 2080 raster performance for $300 would be great but you're not really getting a very RT capable card for that price - for which an RTX 3060 will most likely be had."

And...

"It's clear that these comparisons to the RTX 2080 Super and the RTX 3070 are not on equal ground - AMD have clearly implemented SAM on the RX 6700 XT to get these numbers and that would be fine, except for the fact that they do not disclose it! [...]

Taking those into account and we're now looking at ~70 fps in AC:Valhalla, ~80 fps in Borderlands 3, 61 fps in Dirt 5, ~80 fps in Gears 5, ~110 fps in Hitman 3 and ~58 fps in WDL... and that's assuming that the only undisclosed performance feature is SAM."

It was later revealed by third parties that those benchmark numbers at the reveal presentation were with SAM enabled but that should have been stated front and centre right next to the comparisons. Anyway, how are my estimated numbers?

AC Valhalla: 67.2 fps - Overestimated

Dirt 5: 91 - 96 fps - Doesn't correlate with the results presented by AMD... the results are not making sense

Gears 5: 107.7 fps - Doesn't correlate with the results presented by AMD

Hitman 3: 100.4 fps - Underestimated

On balance, I overestimated the amount of fps lost through SAM and I didn't find benchmarks for Dirt 5 and Gears 5 which matched a similar sort of performance envelope but this proves my point - benchmarking with SAM gives unpredictable results; different resolutions, different game engines, etc. Therefore, this feature should not be used for comparisons unless all cards in the comparison support the feature.

I've seen some reviewers and many commentators saying that cards should be reviewed with a "best case scenario" but you can't do that because you cannot compare products in that situation because this new feature is not a standard. In the same way no one was calling for DLSS to be compared as standard with Radeon cards, no one should be calling for this situation either.

|

| These numbers assume 100% scaling efficiency which, you will see, is not the case... |

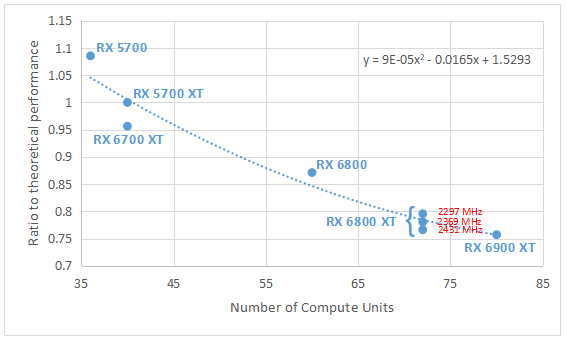

Going back to my calculations, I predicted 15-20% more performance than an RX 5700 XT at 2.3 GHz to match an RTX 2080. However, there are two factors here that confound these results: the released RX 6700 XT cards are averaging 2.55 GHz as a core clock - which is a bit faster than I was calculating for but also there appears to have been a discrepancy between the way I was calculating the relative performance between the Turing architecture and the RDNA architectures when there are fewer compute units in the mix. My calculations were pretty accurate for the RX 6900 XT, 6800 XT and 6800 but it seems the comparison broke down for the RX 6700 XT with the RTX 2080.

The comparison with the RX 5700 XT on the other hand is spot on for these new releases and I'll get to that right now.

Actual numbers...

Now we have actual benchmarked results to look at I can extend my prior analysis and it turns out that AMD were able to push this card much harder than I thought they could or would.... but it's not all good news.

|

| The efficiency of the RX 6700 XT is pretty good at the clock speed AMD have pushed the card out with... |

AMD have managed to get 96% of the efficiency per CU/clock frequency of the RX 5700 XT which is very impressive but, given the improvements in performance per Watt that were reportedly made to the RDNA 2 architecture, getting 1.3x the performance of last generations' card for that has come at the cost of power draw.

You see, theoretically, at 2500 MHz, this part should be getting 1.42x the performance of the 5700 XT and, as I had predicted at 2300 MHz it should be getting *this* performance - the performance we currently observe. In the above chart showing the RX 6800 XT variants what happens when you start pushing RDNA 2 above 2.3 GHz, you may gain a few extra fps but you've hit the efficiency wall where power begins its exponential curve and thermal output begins limiting the performance of the silicon, which then funnels back into power draw as the efficiency of moving electrons through the silicon decreases, further increasing thermal output.

|

| Performance (in averaged fps over the tested games) to the RX 5700 XT against the power draw to achieve that performance... with the RTX 30 series cards put in for reference. (Raw numbers from Techpowerup) |

We can see that for 2-8% increase in power requirements, AMD are getting 1.3x the performance. Quite a far cry from 1.5x the perf per Watt improvements they've been talking about, though of course AMD did not state at which frequency this improvement was achieved or if compute resources are identical. It is interesting to note that the RX 6800 fulfills this criteria with 1.55x the performance for a 6% increase in power draw, though... that really is the standout card in AMD's lineup, IMO.

Scott Herkelmann mentioned that they're expecting partner cards with almost a 3.0 GHz gaming frequency but I can't imagine the power draw on those cards and the price associated with the cooling solution required to keep the temperature in check!

What I have seen is a bunch of partner cards with practically zero overclock (maybe 40 MHz, max?) which, understandably doesn't result in any improvement in performance (1-2 fps, which is probably within the margins of error for most game benchmarking).

|

| Extrapolating the clock speed to 2.9 - 3.0 GHz shows us that we're expecting efficiency to drop to at least 0.86 - 0.88 of that observed... to give around RX 6800-level performance and efficiency. |

What is slightly concerning, though perhaps more of a theoretical curiosity than anything else, is the power spikes that were observed by TechPowerUp during the non-gaming Furmark stress test. I don't know if there could be any gaming scenarios where these sorts of stresses could momentarily be realised on the cards but it's clear that there are the potential for power spikes much higher than one might normally expect for a card of this position in the stack. We all heard the tales of the RTX 3080 and RTX 3090 cards spiking super high and causing crashes but I think that these cards could have the potential to do the same given the fact that they're more likely to be paired with lower-end power supplies...

Conclusion...

It's at least edifying to see other outlets coming to the same conclusions that I was making months ago. RDNA 2 has no IPC improvement over RDNA 1 and AMD's only option was to go with higher clock speed and manage the thermals more appropriately. What's disappointing to see is that people still aren't making the connection that AMD could have had the performance crown last generation (at the cost of power) if they had released a 72 CU version of RDNA 1.

They're also not seeing the writing on the wall for this implementation of RDNA - AMD can't usefully push the clocks any further on this process node and they can't reduce power performance (usefully) more than they have with the infinity cache and narrower bus widths. There is also no real power/performance benefit for scaling out to ever larger numbers of CUs on a monolithic design as well (the performance penalty and difficulty in feeding them with data will nullify a significant portion of any theoretical gains).

However, AMD do have a couple of options on the table here:

- Multi-die (chiplet) SKUs via their xGMI/Infinity link architecture

- Drop their SKUs down a level or two in their stack

The first option has been mooted many times over the last year as being a core feature of RDNA 3 - double up on compute units by doubling the dies on the GPU - essentially a new form of Crossfire... However, this isn't without its issues... especially in terms of scheduling and driver support.

The second option is something they can easily do because of how profitable their small monolithic dies are to produce and given how high their yields are: AMD have been stuck at 36/40 CU for mid-range for a long time, maybe it's time for Radeon's Zen moment - bring more compute resources to each product in the stack.

|

| RDNA 1 had 5 DCUs per Shader Array, RDNA 2 switched that up to 10 but reduced backend/frontend resources that supported them... |

RDNA 2 improved upon the architectural efficiency of RDNA 1 by reducing the supporting infrastructure for a larger number of Workgroup Processors (WGPs) and this allowed a reduction in die area to allow for the implementation of the infinity cache (which takes up a decent chunk of die area). RDNA 3 could increase the number of WGPs per Shader Array once more, bringing different quantities of compute units to each tier of graphics card in AMD's stack. An RX 7700 XT with 60 CU (or a cut-down 54-56 CU for yield purposes...) would effectively enable AMD to shift performance up by 25 - 30% within the same tier without having to increase clock speed or re-engineer their entire architecture (or drivers for an MCM design).

Meanwhile, it's impressive how performant the RTX 30 series is considering the inferior nature of the process node they are using. The RTX 3070 and 3060 Ti (both using the same die, with the lower SKU cut-down) show impressive performance per Watt compared to the RX 6700 XT and we can see that the 3070 gets an extra 8% performance over the 6700 XT in the same power envelope (with no hint of 100W potential for spikes) and it can easily be undervolted/power limited whilst boosting the memory speed in order to improve the efficiency of the card itself.*

*For the record, I believe that the 6700 XT will also be more efficient with a slight underclock/power limit but I'm not certain how boosting memory frequency will affect the performance given the presence of the infinity cache... I'm looking forward to reviews with that aspect explored!

All in all, this has been a successful launch from AMD (IMO) though somewhat marred from the price and misrepresentation of which Nvidia card it is supposed to be competing against. I've seen multiple outlets stating that it's a 3070 competitor when it sits firmly between the 3070 and 3060 Ti (averaging-out the games) with SAM enabled whilst still losing on ray tracing and DLSS tasks... Without SAM enabled, it draws closer to the 3060 Ti in more games than not.

No comments:

Post a Comment