|

AMD have recently released the much-awaited 3D stacked processor: the Ryzen 7 5800X3D and the opinions from various commentators and pundits have been flying left and right. "It beats Intel on efficiency and price/performance." "Is it worth it? It's hard to recommend."; "No overclocking is bad!"; "It's the best!"; "It's not the best"; "It's a dead-end platform..."

Everything that has been said can be made as a useful point of discussion, except for that last one. And I'm here to tell you why.

The end of the road...

The concept of buying into a "dead-end platform" didn't really exist in the middle ages* of PC gaming (i.e. 2010 to 2019). Prior to that, then-current last year's products were dead-end platforms in many cases, with games often refusing even to run at playable framerates because the advancement in available computing power was literally through the roof.

This was especially applicable to GPUs. From my own experience, I had four PCs in the period of 2000 to 2009 but purchased at least five GPUs for those PCs in the same time frame.

Many people have been mentioning that the stagnation over the 2010-2020 period was not typical and it certainly hasn't been. I had a single PC and two graphics cards last me the entire period and really only felt the lack of resources in 2017-2019 when games* using newer instruction sets were being released with increasing regularity.

*That I wanted to play...

So, in the context framed by those observations, it's strange to see that discussion about dead-end platforms is really a conversation about being unable to upgrade components or lacking newer features as opposed to the classical meaning of getting a system that was essentially out of date and lacking in performance or compatibility.

The future is now...

Given that summary: that architectures and systems are remaining relevant for longer, I thought that the whole discussion about "dead-end" platforms would be a moot point entirely. Buying a Ryzen 5000 CPU with a B550/X570 motherboard isn't a risk or a negative - the platform is relevant now and will continue to be into the intermediate future.

What sort of argument can be made that any sort of average consumer that is buying "workload appropriate"* hardware is making a wrong decision?

*i.e. Appropriate for their use case (don't buy an R9 for gaming, etc)

Yes, we can get into the nitty gritty about whether the 12100/12400 and associated platforms are better buys than the 5500/5600 are, but they're essentially equivalent in terms of what they offer to a consumer that is looking at spending at these specific price points. Higher end system choices are less clear - perhaps more motivated by application specific performance.

I.e., any consumer looking at the low end, isn't likely to be pairing high-end components with such a platform and thus will not see any benefit or negative from using sub-optimal pairings.

Worse still, we're not really expecting huge increases in performance in either the Intel 13th gen or AMD 6000/7000 series releases in the context of gaming. A Ryzen 7 5800X3D is likely to remain competitive for gaming over the next 4 years, as is a 12600K for example, and anyone gaming at any resolution at higher graphical settings and/or at 120 fps or lower will not see a material benefit from faster chips, even in a 12400/5600 platform.

Once again, we find ourselves in a period where CPUs are not taxed compared to GPU capability and, really, that's also a discussion that needs to be addressed...

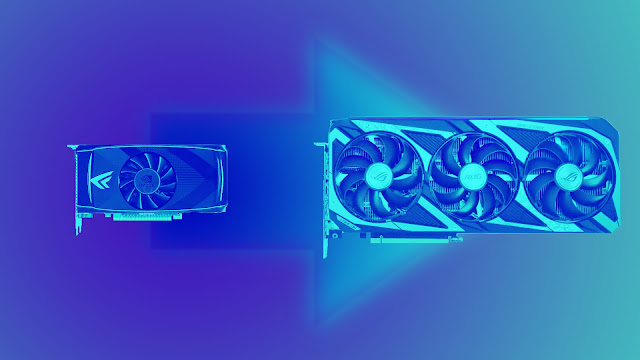

|

| We've come a long way in the last decade but advancements are slowing down as prices are increasing... |

GPUs used vs. new...

Even now, there are many advocates suggesting to buy graphics cards from 2016 or older in an effort to circumvent having to pay more for modern GPUs (which, I agree, are overpriced). The problem here is that many consumers do not have the skill or knowledge to assess and/or clean/refurbush cards that have been running for the better part of 6 years.

At no point in computing history would we have been sitting down and advocating getting such old hardware, with the exception of buying retro hardware for a retro PC build. And that's the thing, people buying a retro build know what they are getting into (or at least should do).

With all the cryptomining going on over the last half a decade, GPUs have had more chance to have been running hot, long hours and in suboptimal conditions, outside of the heavy task of gaming itself.

Who is going to disassemble each GPU and check if the thermal paste/pads are good or not? Or if the temps are okay? Who is going to notice if the GPU that was purchased is the correct die or has a mining BIOS attached? Who is going to provide support for these now ancient cards when they might begin to die over the next year or two?

Surely, we are now expecting a lot of these cards to start to die through natural attrition due to normal wear and tear over the coming months and years. This is not like tech from the mid-2000s that ran for a few years and then was replaced by a newer card - the majority of these cards have been running constantly for the entirety of their lifespan in gaming systems, if not in some part in mining rigs.

Quite frankly, I wouldn't be recommending anyone newish to the hobby to be spending up to a couple of hundred euros on six-year-old tech... nor would I be doing so to people who can't afford a mistake. Yes, sometimes marketplaces like ebay will help you out - but that's not a given and it's not a given that such a site has a presence in your country or that a failure will happen within a return period.

Yes, people who are more veteran in the PC space will be fine, but they don't need advice from the likes of me or any techtuber...

Really and truly, buying such old hardware is only being considered for today's mainstream users because Nvidia and AMD have stretched out their release cadence at the lower tiers AND reduced how much performance gains there are per generation meaning that at the lower end of the spectrum, you're not really getting much extra performance six years later from your GTX 1060 or GTX 1070 for similar pricing.

What you ARE getting is newer hardware that's potentially under warranty, been used far less, or treated worse for a shorter period... and statistically more likely to work for more years into the future in any case, regardless of those prior points.

|

| We've seen the compression of card performance per gen over the period 2010 to 2022: Slower releases, higher-end performance per tier... |

The end result...

I know this post is coming a little late - it's existed in some form for more than two months and I just haven't had the time or energy to get to it.

It's clear to me that discussing today's platform choices in terms of whether they are dead-ends or not is not productive nor reflective of the reality of platform choice at this time - especially at the low to mid ranges. At high end, it might have an impact, though I'm struggling to imagine how...

Most likely, if you have a very demanding application, you are likely to just go the whole hog and upgrade the system in a couple of years. So, worries about PCIe gen 4/5 and DDR5 compatibility are very unlikely to have a real world impact on a gaming system that isn't running at an unrealistically low resolution in order to test a CPU architecture or system bottleneck.

This logic can also be extended to GPUs as well... minimum performance requirements of games have really stagnated (though recommended requirements are still steadily increasing each year). However, this metric pertains to performance numbers, not to the age of the GPU in question. If I had the choice, I'd buy newer, which means don't consider the GTX 10 series or RX 400 series: get the GTX 16 / RTX 20 / 30 series, or the RX 5000 / 6000 series at a similar performance point. Keep in mind price, of couse, and try not to overpay but buying "old" now when options are opening up for newer cards and prices are falling every month? I cannot get behind that message...

In summary, every "platform choice" is good... very little on the CPU/mobo/RAM side of the equation will really mean anything to you or the general gaming consumer. GPU still remains an issue but, hopefully, with the mining crash, over supply, and next gen parts all coming together, that issue might abate a little further...

No comments:

Post a Comment