Last year, I asked Scott Herkelman over on PC World's The Full Nerd show if it even made sense to scale RDNA 2 down to the bottom of the stack.

On Wednesday, AMD released the RX 6500 XT to almost universal criticism...

The reason I asked the question is because I had done the work, looking at the scaling of the RDNA and RDNA 2 architectures (and continue to do so!) and it was obvious to see that they scale very linearly with clock frequency and quantity of compute units (CUs), so it raised the question in my mind whether it even made sense to spend the silicon and engineering effort to create the dies for lower tier products.

Instead, I figured that it would have been better from a business and supply standpoint to continue producing cards like the RX 570/580 as the low-end option, coming from a different, cheaper, older production node - reserving the expensive silicon produced on the more advanced nodes for the more modern and expensive products.

Duoae:

"Does it make sense to scale RDNA 2 down past 6700 level? In the past AMD said it would go across the whole product stack eventually but for the low-end cards, does it make sense?"

Scott:

"Can't comment on unannounced products. You can expect more products in this RDNA 2 family set, soon. Our goal is to bring it from the top, all the way down, to as low as we can."

So, effectively, I perhaps didn't ask the question in as clear a way as possible (we are limited by the number of characters in youtube comments and SuperChats, after all) but Scott definitely hedged his response... and I don't blame him. Nobody wants to answer that type of question! So, maybe I was a little naive for even asking in the first place. (I'm not a journalist, after all!)

|

| The frequency of the RX 6500 XT is pushed so hard, its efficiency is very low. It's operating way outside of the optimal range for the RDNA 2 architecture... |

However, I think my concerns have been validated with the majority response from the tech press to the release of the RX 6500 XT - a card that has been almost universally panned.

While much has been made of the lack of encoding hardware, I think that the issue is really the performance to price ratio. We're looking at a card that is priced at RX 5600 XT costs while having the performance between the RX 470 and RX 480.

But we'll get onto pricing later.

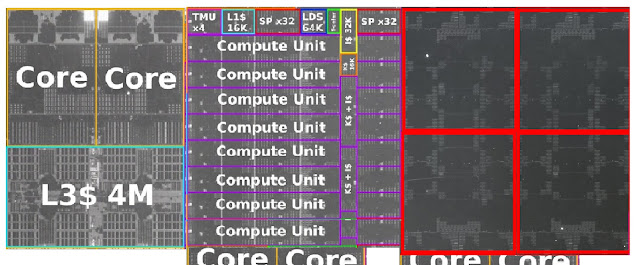

Looking back at performance, yes, an RDNA CU* is around 1.5x (Digital Foundry) the performance of a GCN CU which means that AMD can use fewer of them to obtain the same performance as their older products. Of course, this increase in performance isn't free - it comes at the cost of die space as two GCN CUs take up less space than a single RDNA 2 DCU.**

At high quantities of compute units, this is fine - RDNA/2 really push ahead. At lower numbers of compute units, though... the maths becomes a bit murky, especially because of the RDNA architecture's reliance on pairing up those compute units; you don't get uneven numbers of compute units... which means that you need to design a product with a minimum 2 extra CU to disable for good yields. Vega/GCN have no such limitation.

So, if we're talking about die area, AMD's not much better off from their older architecture when using lower numbers of CU in a product. Hence why I've not been that bullish on the inclusion of RDNA 2 in any APU formats because you can fit fewer graphics cores in the same die area, which means that - yes, despite running at higher clock frequencies, the performance uplift for the product is not that great in comparison to prior products.

*And given the lack of increase in IPC (instructions per clock) between RDNA and RDNA 2, we're seeing the same for RDNA 2, minus decreases in efficiency for working above the ideal frequency of the architecture or across "wider" cards with increased CU counts.

**Although never replicated on the same production node, GCN has a lot of die shape/size similarities to Vega, which was produced on the 7 nm process node at TSMC.

|

| As per my calculations working from the Vega APUs using a Zen 2 core complex as a reference, 9 Vega CUs occupy 91% the area of 8 RDNA/2 CUs... |

This can be seen (or will be seen) with the RDNA 2 APUs that will release soonish, so I look forward to seeing those comparisons, especially between a Vega 11 CU and an RDNA 2, 6-8 CU.

What RDNA 2 does have in abundance over GCN is power savings and workable clock speed range.

You'll notice that the RX 6500 XT has one of the highest guaranteed clock frequencies of any GPU on the market. You'll also note the relative lack of power savings compared to its larger siblings*. Well, that ~200MHz increase is likely down to the move from the 7nm process node to the 6nm node, meaning that the power increase to achieve that frequency is much reduced... but still placing the card into the range needing additional power plugs and... also spiking to a whopping 167 W!!

I have brought this up before but it seems the wider press haven't latched onto this like they did with the Geforce RTX 30 series cards' power spiking behaviour but, higher clocked RDNA 2 cards can spike REALLY high.

AMD had to prioritise the frequency over power savings in order to make this card have any sort of performance that was worth having that wouldn't be laughed at... and yet that still happened!

I do feel for the position AMD are in but, much like the tarot card, The Hanged Man, they are in a position they have put themselves in.

The company would have known the relative paucity of performance at lower numbers of CU and, in fact, the evidence appears to be that they never intended to release this chip as a standalone GPU product but saw the opportunity to corner a segment of the market that was not being addressed with a product that would still net them a profit.

Here's where I add my own speculation onto things - Why repurpose a product destined for use in a thin and light laptop as a standalone desktop GPU?

I'm left wondering if some plans AMD had for their combined CPU/GPU laptop products being sold to laptop makers just weren't picked up. I.e. AMD are and have been trying to break Intel and Nvidia's stranglehold on the laptop market for the last couple of years but they're really not appearing to make much of a dent in it.

What if AMD had designed this die for that purpose but laptop makers just weren't interested in such a product because prior products either weren't available in enough quantity or didn't sell through to consumers..?

Anyway, enough speculation.

Getting back to the cost of the product. Mooreslawisdead had a very interesting breakdown of the costs that he thinks go into this product. However, I really do wonder about the information that MLID has been given...

First off, shipping is not 6x the cost of pre-pandemic levels. I work in an industry that ships items from India and China; we're talking tons of product which, yes, is more dense than packaged graphics cards but you'd think we'd have an inkling as to what is happening RE: shipping costs. From my interactions with our supply chain, we're looking at large increases if we need to do expedited shipping but normal shipping isn't anywhere near 6x the pre-pandemic cost. Yes, we have a lot of delays and holdups... but those are not costing us more in terms of shipping cost or material cost.

The guys over on the Techonomics podcast came to the same conclusion that I did. Shipping costs are up but there are ways you can ameliorate that! Reduce the size of the packaging, for instance. Though, given that AIBs are creating RX 6500 XTs with a triple fan, dual slot cooler when the product certainly doesn't require one means that they won't be reaping the rewards of reduced packaging size... but wait, wouldn't that be fiscally irresponsible on the part of AIBs? Wouldn't they be losing a TONNE of money by doing that with these 6x shipping cost increases?

The obvious answer is a logical, "no". Since shipping costs are nowhere near 6x.

That AIBs can afford to push out a $300-ish product with a triple fan, dual slot cooler means that they're just raking in profit, not giving some sort of unselfish bonus to gamers...

Look, I'm sympathetic to the argument surrounding inflation, increased shipping costs and component costs. I've made those same arguments in the past! Those are all factors that should be accounted for to some extent. However, the percentage of increase we're seeing for graphics cards do not reflect the rest of the market.

Literally every other component in a PC has not increased by more than 10%.

That means, motherboards, CPUs, RAM (excluding DDR5 because of rarity), power supplies, SSDs/HDDs and cases do not cost 40+% more than their list price. In fact, many are cheaper. That points to supply and demand being the primary cause of increased pricing (as we're seeing with DDR5).

And I should note that all of those components cover all the arguments that we can apply to graphics cards. Increased component costs? Memory, storage and motherboards use those same components and PCBs. Increased wafer costs? CPUs use more and larger (total) die area than cards like the 6500 XT and also rely on more complicated substrates to produce. PC cases have a vertiable lack of density per product (worse than GPUs, I'd argue) and they need to be shipped in the same manner... but aren't increasing in any appreciable manner.

That means the three arguments that actually account for the observed increase in price are:

- A lack of supply compared to demand

- The ability to use the product to create money (Cryptocurrency)

- Price gouging from the manufacturers and AIBs

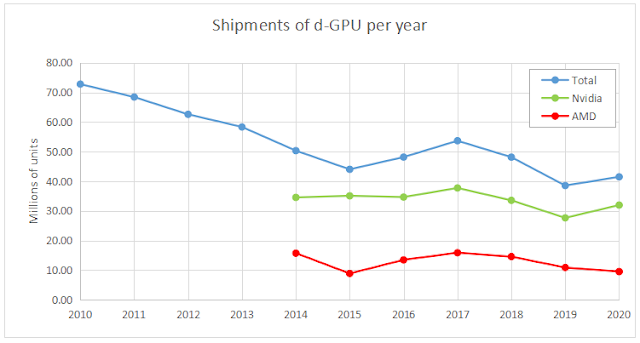

I've covered the lack of supply before... and I also gave a date by when I expect that supply will catch up with demand (2023), leading to a land of plenty, if not affordable, GPUs...

The world of cryptocurrency is out of my remit. I have no real financial savvy, unfortunately, as I would otherwise turn my prognosticating power towards evil, erhm, I mean, my own financial gain. But, joking aside, I have no real experience with trying to predict monetary market fluctuations and/or trends. I have delved into it a little but I've discovered that I am preposterously underprepared and lacking in experience! So I won't gurgitate any of my stupidity on this blog...

I've also covered what we can do to fix what I consider to be the scourge of the AIBs on consumers - pairing over-engineered coolers on lower-tier cards, etc.... AIBs really are the worst enemy of both consumers and the manufacturers... but it seems like I'm one of the few people actually calling this behaviour out.

|

| Intel's Arc seems to be the best, last hope for good pricing and availability for consumers in 2022... |

Conclusion...

Ultimately, AMD made the decision years ago to drop manufacturing of the GCN architecture cards at cheaper, less advanced process nodes in favour of having an all-in position on advanced nodes. To be fair, thus far we have not seen this sort of segmentation based on process node within the graphics card industry. However, it is my speculation that this is precisely what we'll see going forward.

With spiralling costs due to increased material, production and shipping values, GPU manufacturers will likely have to split their generational improvements between different architectures and/or manufacturing nodes in order for them to make financial, and logically performant sense.

This generation's RX 6500 XT makes no sense on either front but next generation's RX 7500 XT could be based on a higher-CU count die, with lower production costs on an older node (e.g. 6 or 7 nm) compared with the state of the art 5 nm production node RX 7900 XT.

What is interesting is that we've seen this behaviour before whereby AMD and/or Nvidia have basically kept the same GPUs and rebadged them as next generation parts with slight optimisations. However, I believe that we'll mostly be seeing increased per SKU capability (i.e. increased CU) as opposed to increased frequency as we've already observed that RDNA architectures are not benefitting from hugely increased frequencies - despite being able to achieve them through overclocking.

As for the RX 6500 XT, it is a card born of desperation to maximise profits and regain market share as much as it is a vehicle to satiate desperation for gaming hardware...

No comments:

Post a Comment