Last time, I looked at the potential performance of the RX 6000 series cards before we knew any firm numbers. Then I went on to briefly look at the confirmation of those suppositions after we got actual benchmark numbers and found the RX 6000 series to be almost linearly scaling at a set frequency with additional DCUs - which is really impressive! This time, I want to look at the place the RX 6700 and 6700 XT have in the market and how that lines up with what people were expecting from them coming into this new generation.

This post was inspired by Tom over at Moore's Law is Dead and his video covering the potential performance and price of the RX 6700 XT compared to the RTX 3060 Ti.

Performance uplifts...

Back in my original article, I had made the claim that there really isn't any performance per clock uplift per compute unit between RDNA 1 and RDNA 2, justifying that the expected improvement in achievable frequencies (from various leaks over the last year) for the silicon would bring much of the performance uplift than we would expect. Looking back, I was pretty close to performance at release with an estimated average operating frequency of the RX 6900 XT of around 1975 MHz versus an official game clock of 2015 MHz.

I made this claim based, in part, on the released information from the Xbox Series X Hotchips presentation slides, showing that the GPU pipeline was not significantly changed with respect to the compute unit layout to that featured in RDNA 1. Further to this, I took a look at the Xbox Series X die shot and compared the size, shape and layout of the dual compute units (DCUs) to those included in Navi 10 (RX 5700 XT) from a helpfully annotated die shot.

|

| You can see the same structural design for the CUs, with a different design for the raster/primitive units which apear to be merged more with those green-highlighted CUs... |

From this information, it seems to me that there are not significant differences to the compute units between the two architectures and, unless there's some other sub-surface structure to the RT portion of the compute units, it actually confuses me that there was no RT functionality enabled on RDNA 1. I know the rumours are that RT wasn't ready for RDNA 1 and that the architecture was a half-way solution but I wonder if that was nothing to do with the compute units themselves and more to do with the supporting hardware such as the changes to the primitive units and the infinity cache system which allows for faster data transfer to/from the CUs - which appears to be important for RT performance.

The other piece of information that backed up my supposition, post release, is that benchmark performance in rasterised gaming (i.e. not ray tracing) is showing an almost linear relationship with CU increase (when clock frequency is normalised) when I look at the %ratio between the various cards with the RX 5700 XT as the reference performance.

|

| It's clear that past the 2.2 GHz clock speed, real world performance increases are hard to come by, though I'm sure you could shift those synthetic benchmark results up by a few hundred points... |

Please take note that the performance of the RX 5700 had to be extrapolated from multiple sources as most reviewers are not bothering to update ther RX 5700 benchmark results. It'd be really nice if someone with access to a card could do a comparison between the 5700 and 6800 or 6800 XT...

What we can see from this data is that the RX 5700 and RX 6800 both perform better than theory and this is probably due to the fact that both cards only have a large difference in disabled compute units and not the rest of the supporting infrastructure. The 5700 still has the same L2 cache and memory bandwidth, and a higher ratio of ROP/CU than the 5700 XT (1.78), whilst the 6800 has the same L2 and L3 caches, memory bandwidth, and a slightly lower ROP/CU ratio (1.6).

The real question I have is why are AMD not pushing their cards harder?

At any rate, comparing the performance of the RX 5700, RX 6800 and RX 6800 XT with the RX 5700 XT shows us that we have an almost linear performance with increasing CU counts - which is really impressive!

|

| The scaling of performance per CU for normalised frequency and CU perf to RX 5700 XT levels is pretty much linear... |

The real question I have is why are AMD not pushing their cards harder?

It's clear from various overclocking videos online and reviews that the RX 6800 and RX 6800 XT are both able to reach and surpass their guaranteed game clock with some reports that the cards consistently hold at around or above their boost frequency of 2250 MHz. Partner cards are even better. However, there appears to be a huge drop-off in terms of performance per MHz gained past the rated 2250 MHz frequency with a difference of just a few frames per second if you look over the performance across a slew of games in those TechPowerUp reviews. We're talking around 2 fps for 100 Mhz and 5-8 fps for 200 Mhz, depending on the resolution you're testing at.

In fact, it was mentioned by Mark Cerny that past a certain frequency ceiling, error rates were hurting performance and so SONY settled on around 2.23 GHz for their GPU frequency in the PS5. We are also observing this for desktop RDNA 2 chips sent to reviewers, though I do wonder if the reviewers are getting "golden" samples which are able to clock WAY above the rated frequencies with some reliability in order to drive demand.

At any rate, the power efficiency/performance curve probably looks pretty horrible and there's not much benefit in application performance increases to hitting 2.4 to 3.0 GHz from the stock frequencies (aside from being able to output a large amount of heat). GamersNexus were seeting a 2-4 fps difference for 2400-2500 MHz with memory overclocking, further validating the above table's conclusions.

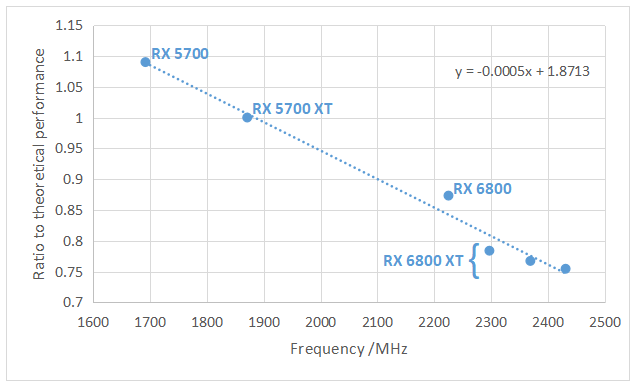

When I plotted the ratio of the real world performance to the theoretical performance normalised to the performance of an RX 5700 XT, against frequency*, you can see this drop off, quite clearly. The three cards of the RX 6800 XT at various frequencies show how the efficiency curve at a given number of compute units in the architecture affects the performance near that upper frequency boundary around 2.3 GHz.

Obviously, you normally wouldn't compare across architectures but I think the difference between RDNA 1 and RDNA 2 is small enough that we can do this as long as we are aware that these are not "gospel" truth but approximations.

*Yes, that was as confusing to write as it was to read...

|

| The RX 5700 data is least reliable as I could not find a single source for comparison and had to extrapolate performance relative to the Eurogamer results... |

So that's really the simple answer and it was given to us back in March 2020 - RDNA 2 doesn't scale past 2.3 GHz. But what does this mean for Navi 22? Aka, the RX 6700 XT...

Damned if you do and damned if you don't...

Nvidia got a lot of flack from providing no increase for rasterisation performance in their RTX 20 series cards over the prior 10 series generation. Instead they opted to focus on RT and tensor core performance and software. Now, with the RTX 30 series, they've pushed forward with performance in all arenas, giving people a reason to upgrade. However, people have been missing something from the RX 6000 series launch, at least from what I've seen: As I laid out above, AMD just pulled the same move as Nvidia did for the RTX 20 series.

Yes, nobody seems to have noticed it because AMD didn't make a die large enough to be equivalent to the RX 6900 XT but let's look at the facts: The RX 5700 XT was as fast as an RTX 2070. If AMD had made an 80 CU die like they've done this generation, they would have won last generation's performance crown because their 72 CU, RX 6800 XT with no better performance per clock is beating an RTX 2080 Ti/3070 by around 30%...

|

| Taken from Techpowerup, you can see that a theoretical 80 CU RDNA 1 card would have demolished the RTX 2080 Ti... |

Yes, that theoretical card would have been power hungry and it would have been expensive to produce and it would have been inefficient but it would have won. But it wouldn't have had ray tracing and it wouldn't have brought in the money for AMD, who needed to focus their energies elsewhere at the time (i.e. Zen 2 and the two upcoming next gen consoles). So they scrapped it.

Though, here's the problem AMD now face with the RX 6700 XT - it isn't any better than the RX 5700 XT, clock for clock and AMD have a hard limit on the highest clock speed their RDNA 2 architecture can usefully be increased to, 2.3 GHz.

Now AMD find themselves in the same situation that Nvidia did and simultaneously in a worse situation that they have put themselves into - they don't have a lot of extra performance they can put into this SKU (around 15-20% at 2.3 GHz and who knows the yields at that frequency!), and they already charged $400 for that card over one year ago and they are facing a $400 RTX 3060 Ti that is more powerful than an RTX 2080 Super.

AMD charged too much for the RX 5700 XT* and as a result, despite adding lackluster ray tracing performance**, they have to go lower. They can't afford not to because the RTX 3060 and 3060 Ti are waiting to kneecap this SKU and the probable RX 6700. Don't get me wrong, around RTX 2080 raster performance for $300 would be great but you're not really getting a very RT capable card for that price - for which an RTX 3060 will most likely be had.

*In fact, they had to lower the price before release because the RTX 2070 was more powerful at a similar price point with ray tracing features...

**With 40 CUs, the RT performance will be quite low considering RT performance on the RX 6800 with 60 CUs...

So how can AMD compete? They can't... and so they can only do one thing: don't release RDNA 2 in the lower SKUs.

The rumours have been going on about an RX 5000 series refresh for a long time now. Is it possible that Navi 22 is just the Navi 10 refresh? If the core optimisations of RDNA 2 are provided to the RDNA 1 architecture, minus RT, then you get an improvement in energy efficiency (perf per Watt), improved clock frequencies and smaller die size because the infinity cache is no longer needed because the bandwidth and data structures required to support RT are no longer necessary. That could account for the leaked, very minor improvements from a TGP of 225 W to 186 - 211 W as they are considerably further away from 2x the performance per Watt we are expecting from RDNA 2...

That way AMD can drop prices for a chip that provides around RTX 2080 performance for about $250-300 when Nvidia are struggling to get the RTX 3060 out at $300 and fewer people mind the lack of RT features at that price point. However, it's not the first time that we've seen non-existent SKUs being tested and/or leaked and RDNA 1 was meant to have a bigger die released.

This does fly in the face of numerous leaks and benchmarks which put the RX 5700 XT with 96 or 64 MB of infinity cache that RedGamingTech has been privvy to. It also flies in the face of the fact that AMD themselves have stated that RDNA 2 will exist across their whole lineup, from top to bottom.... though they did place a huge caveat on that statement: "over time". Which, basically can mean anything! Further to this, WCCFTech have not linked any amount of infinity cache to the SKU, potentially backing up the idea that this is a card lineup lacking RT.

However, there is one more problem of AMD's own making - they have a massive hole in their hierarchy! Their RDNA 2 cards currently stop at 60 CU and $680, their RX 6700 XT cards would go for less than half that price if they're at $300. They have no mid-range and there's no product that they appear to have to fill it with!

This is where I'm at a complete loss with regards to AMD's plan.

|

| She cannae take moar power, cap'n... |

If it wasn't for that little girl and that damned dog...

So, Nvidia had a generation where they didn't improve raster performance, instead opting for RT and AI features, following that with the performance uplift in all three. AMD did the inverse and provided the raster performance in the RX 5000 series that Nvidia has provided this generation and are now focussing on the RT/AI implementations in the RX 6000 series.

The problem I'm seeing is that AMD's current architecture is at a dead-end. It's at the end-point - it won't scale any further. For RDNA 3 they need to re-architecture around RT and AI, they can't just scale outwards as we can see that for increasing CUs you don't get linear performance uplifts and programmers find it more difficult to utilise that many resources instead of faster resources, anyway. There've been rumours that RDNA 3 will be chiplet-based and that makes sense to me as you'd have one chiplet focussed on RT calc, one on AI and one on raster/texture/shader.

Nvidia are also facing a similar but less severe problem - they'll likely have to go chiplet-based as well (or at least 3D stacking) but their RT and AI cores are not removing resources from their CUDA cores, they're just stopping Nvidia from implementing more CUDA within the same die area. Nvidia can also make parallel gains in their CUDA, RT and Tensor cores independently, whilst also improving the supporting infrastructure without any one of them affecting the others. AMD's architecture has at least their compute and RT utilising the same silicon, meaning that if you make adjustments to one, you intrinsically affect the other - you may even end up removing performance from one when trying to improve the other...

The way I'm reading things at this point in time is that Nvidia have won this generation because AMD haven't provided (and can't provide) the number of cards that are required for the market and they do not have efficient die/SKU-scaling/product segmentation like Nvidia have managed.

I have to admit, I'm also part of the problem. Instead of waiting for an RX 6800, I caved in and bought an RTX 3070.What could I do? I wanted the upgrade, I needed the upgrade, and I couldn't wait for the upgrade. AMD dropped the ball on its way to my hands...

No comments:

Post a Comment