|

| This year, I'm on time... |

Snow is falling, winds are blowing, and it's that time to look back over the year and trend the night away! 2023 was a bit of a disappointment but I've got a good feeling about this year*...

*Okay, let's be honest, I've already seen the data!

In Brief...

I'm not going to rehash the ins and outs of how and why I do the trending, you can visit any of the prior years' assessments for that. What's important to note is that this isn't a definitive measurement, there are caveats and limitations on the data gathered.

As usual, all data is available, here. And with that, on with the show!

Safety Dance...

If last year felt like a regression or a disappointment, 2024 is a welcome return to form. Way back in 2020, when I began this series, the latest generation of consoles hit and in retrospect, it's now possible to see that in years where console hardware increments itself, there are spec bumps across the board.

Previously, I had operated under the assumption that required specifications for games worked along a sort of "long-tail" approach. Whereby, hardware is released and developers predict future performance of future hardware releases from when they start development. However, I'm coming around on this assumption. Sure, it still occurs - it's only natural to predict - but it is becoming apparent in this trending data that developers "react" to hardware launches in real-time, too*.

*Though this didn't appear to be a trend when the PS4 and Xbox One released...

We'll come back to that point later but I wanted to pre-emptively frame these analyses with that thought in mind!

|

Last year, I was predicting around the 10600K-level of performance for both CPU single and multi-core performance in game requirements in 2025. What we've seen from this year is a large uptick in those predictions, with the single core performance exceeding a 10600K - which is around that of an i9-9900K - and multi-core performance matching that of a Ryzen 7 3700X.

The big takeaway from this is that games are getting more cognisant of multi-threading and taking advantage of those threads, even if it feels slow from a consumer perspective. UE5 is a particular driver of this trend and as games that have adopted that technology are released, we will see the multi-core performance requirements increase further.

|

What we can see is that there is not as large of a correlation between multi-core performance and hardware releases, as there is with the single-core performance. That's pretty interesting but, I think, speaks more to the dominance of single-thread throughput in computing applications, in general. Yes, more threads help, but they're not the driving force in the need to upgrade your PC hardware. Something that Hardware Unboxed has been relaying in excruciating directness for a long time... and something that I've also devled into.

Saying that, we can trend the requirements for cores/threads on the CPU and I was obviously predicting way too hard back in 2020, where I thought the rise of the multicore CPU would push things further than it has in reality. In this reality, we are still able to purchase six core CPUs at the low end of the spectrum and we haven't yet migrated to eight cores as the sort of minimum generational product like we did when we jettisoned four cores and adopted six...

Both my predictions for the mode of cores and threads are beyond what developers are requiring: further giving evidence against that argument mentioned above - overall CPU performance matters. The big development we see in 2024 is that the average cores and threads have once again risen above the modes of those respective metrics. This indicates that there is an upward trend, it's just not large enough to affect the value in a discete sense. Again, that's a large jump for the average thread increase.

|

Looking at this data, I think we might be able to predict that the time of the six core CPU is coming to a close within the next 2 years. We may even see AMD's next generation not even have a six core part. Intel has already made this jump as of the Arrow Lake (Core Ultra) generation of products, though it seems less necessary on AMD's part since they still have a performance advantage in gaming...

Getting back to that point about developers responding to generational console hardware increments, there is a clear lull in the year before the release of new hardware and then a jump in the performance the year of release; 2016, 2020, and 2024. Prior to this, at least in my trending data, there is no correlation. We can even observe a "double hump" in 2016 - 2017 to cover the staggered release of both the Pro and One X.

I'm left wondering whether the scalability of modern game engines has resulted in a situation where developers are able to cram in more things towards the end of development when the new console hardware is shown (or disclosed) to them or whether this is a huge coincidence?

All, hail to the king...

|

| The 'needle' has barely moved between 2023 and 2024... |

GPU performance requirements show no such correlation and that may be because GPU performance still has not actually stagnated due to various reasons and because the requirements for GPU performance are also not stagnating. This is an important distinction to make!

GPU performance (or perhaps more accurately, perceptual GPU performance) has been steadily advancing through a combination of more powerful architectural designs, smaller process nodes, and the increasing use of upscaling and machine learning techniques.

On the other hand, the requirements of GPU performance are also not stagnating. This is through a combination of ever-expanding graphical feature sets (e.g. ray tracing, DX12), GPU compute, and, lastly (and finally) a resolution shift.

Back in 2021, I looked at the relative value of GPUs throughout the years. I compared value within generations, between generations, and within the same class. However, one of the salient points I made was this:

"Graphics cards and their performance are really relics of their time: without games to push them and without the popular resolutions of the day being present, their performance and price ratios are meaningless."

I noted that we had a pretty static resolution target for around ten years of 1024x768 from around 2000 to 2011, which was followed by 1920x1080 in around 2010-2011*. 1080p has pretty much been the main focus of both reviewer's and consumers until this year, 2024. Yes, many benchmarking sites and reviewers also include 1440p and 4K data in their summaries but all GPUs released have their baseline testing set at 1080p to this day.

*I'm speaking about the benchmarking scene, here. Not what's in the most adventurous and well-heeled consumers' hands!

However, in 2023 the VRAM question really took off in consumers' minds and was answered by reviewers in increasing frequency. Yes, these questions existed before. But, let's say it wasn't as big of a deal as consistently as it became (which is fair enough!).

The reason this is important is because, aside from increased pressure by improved graphical features and texture detail, it is now becoming apparent that we are in the midst of the real shift to a resolution of 2560x1440 being the baseline target for consumers. Yes, monitors of decent quality have finally become available at a low-enough price that 1080p monitors are no longer even really a consideration except for the most ultra-budget cases. This is going to put a large amount of increased pressure on GPU manufacturers for increased VRAM capacity.

|

This is interesting for two reasons: 1) VRAM manufacturers are still dragging their feet on both faster and higher capacity GDDR modules; and 2) the rumoured low and mid-range RTX 50 series cards are still supposedly shipping with 8 and 12 GB of VRAM, respectively, next year. Though, I am hesitant to buy-in on that RTX 5060 rumour - which is mostly based on leaks relating to the laptop configuration. I'm hoping that the desktop RTX 5060 will use 24 Gb modules which would grant a total of 12 GB VRAM - matching that of the rumoured RTX 5070, though with a narrower memory bandwidth.

However, we are already seeing that at 1440p, 12 GB equipped GPUs are the recommended configuration for modern, demanding titles and this is only likely to accelerate as we go forward. But I'm getting ahead of myself - we'll address VRAM trending later in this article.

Getting back to actual performance numbers, the main problem with moving to a new resolution standard/target is that the GPUs are really not ready to handle it - let alone at logical price points! We had this same pain back in the transition to 1080p, so, it's not unexpected but it is a little disappointing. Both Nvidia and AMD have known for a long time that 1440p was "coming" but neither have provisioned for it in their low-end cards. Intel's newly released B580 is the first card in that segment to actually do so...

So, we're probably looking forward to these low-end cards from Nvidia (if not AMD, as well) becoming sub-60 fps cards at native resolution* in 1440p in demanding titles.

*Line up and make your arguments in the comments below...

|

| This data is generalised and shows approximate performance for a demanding game at point of release for each card... |

Returning from my pontificating, GPU performance is right on track with where it was last year and only slightly off (reduced) from the predictions in 2020, where I had marked 2024 being the year requiring RTX 2080 Super levels of performance, we're now expecting that in 2025.

There's not much to analyse, here, other than to note that GPU requirements inexorably increase year after year in a rather nice curve...

Rock around the Christmas Tree...

|

| Like Mt. Everest pushing through the clouds... |

This is where things get a little exciting for me... sad as that may seem.

Last year, I lamented the stagnation we were seeing in the RAM requirements of games and, if you look at that chart above, you'll see that we've been fannying around with 8 and 16 GB of system memory since 2016, with 12 GB giving a good show for all its uneven glory! But, wait, what's this? Doth mine eyes deceiveth me?!

Okay, I made a choice in this presentation of data for third place for two reasons: 1) you can't buy a sensible configuration of 12 GB of RAM! and 2) if we're looking to increase from 16 GB, why would we be looking back at a lower quantity of RAM?

The fact of the matter is that we have suddenly, jarringly, observed a rather large uptick in system memory requirements in 2024. It's something that I've been calling for, for years and now it's finally coming to pass, I feel a tear welling from the corner of my eye. Almost 11 % of the games I tracked this year called for 32 GB of RAM - that's a bigger jump than when we first saw 16 GB requirements back in 2014/2014 of around 3 - 4 %.

I'm pretty happy it's finally happening...

|

However, the story surrounding VRAM isn't as pretty and, as I noted previously, developers are stuck between a rock and a hard place because of the lacklustre provisions from GPU manufacturers. The 8 GB requirement sits at a whopping 86 % of the tracked games and this is the highest proportion that any VRAM requirement has risen to. Previously, the highest requested was a 1 GB framebuffer back in 2013 with 57 %...

However, seeing a small jump in 3rd place to 12 GB shows that the tide is turning and both AMD and Nvidia need to get onboard with providing more VRAM to consumers at the low-end in their product stacks.

|

| Yes, yes! I could have chosen 12 or 24 GB of RAM in the graphs above... So, sue me! |

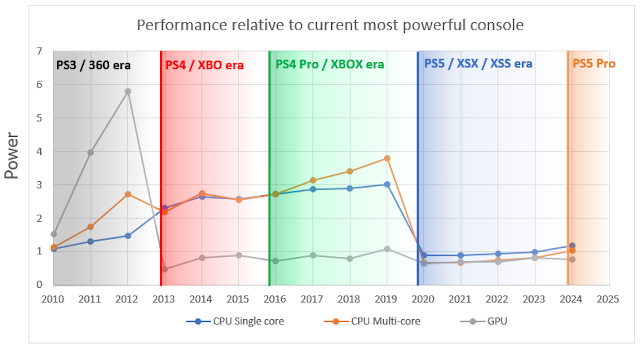

Console Comparisons...

We can also take a look at the relative performance increase of the required PC specification for CPU in the year of each console hardware launch.

We're seeing the single- and multi-core performance nearing a similar delta that was observed during the transition to the PS4 Pro and Xbox One X. We're not quite there, yet but we're likely to close the gap in the next year.

In terms of trending there is something that I'm not sure I've pointed out before but it's that we were getting a new piece of console hardware every time we reached 1.5x the performance in the year of the last hardware release. Since we have no real CPU increase in the current gen, that pattern doesn't appear to be holding for the release of the PS5 Pro...

|

| CPU performance, relative to the current most performant console (not including current year of release)... |

On the other hand, the same comparison between the performance increase relative to that of the average GPU requirement in the year of each console hardware release shows a continued downward trend as the GPU performance gained per generation at the low-end becomes smaller and smaller...

Believe it or not, but this data flies in the face of gamers claiming that system requirements are "getting out of hand" or "unoptimised games!". The fact is that developers are requiring less and less from gamers in terms of GPU performance as time goes on... (Not that they can actually demand more since gamers can't buy more performance - but that's another discussion!)

|

| You're getting less and less from GPU manufacturers over the years, so developers are requiring less and less from you... |

Another interesting angle is the comparison with the current most powerful console. In 2024, that's the PS5 Pro... What's interesting here is that the PS5 Pro hasn't provided any real performance uplift over the base PS5 in the CPU department, meaning that the delta in CPU performance is going to keep increasing until the next gen consoles release. At the moment, that delta is very small and it makes sense given that the CPU in the current generation of consoles is more equivalent to a desktop part than any before it since the mid-2000s.

The comparison with the GPU requirements relative to the PS5 Pro obviously take a dive but I'm anticipating a similarly quick recovery like we saw after the introduction of the PS4 Pro - games are getting more graphically demanding and, despite the consoles being held back by both the Xbox Series S and "lower than desktop low" settings, recommended requirements on the PC are still increasing.

|

| This chart isn't surprising but it does show how 'good' and 'balanced' the current generation of consoles really was in a technological sense... |

Wrapping Up...

Well, that's it - once again! I hope you found this installment of the yearly trending interesting and perhaps even enlightening!

One thing I want to add over the coming weeks and months is new predictions for the next few years. Yes, we still haven't reached the cut-off period but I think it'll be interesting to derive the future afresh, given all the data we currently have amassed.

While I didn't show it above, the RAM yearly requirements prediction was that 3rd place would be 32GB and second place would be 24 GB. Those predictions are reversed, which actually makes sense given RAM configurations and prices as we currently know them. Meanwhile, my VRAM predictions are now a little off: in 2024, I was predicting 10 GB would be 2nd most required while it remains at 6 GB... So, there's some tweaking to perform!

2 comments:

Great writeup, and can't argue with any of your main points.

And to ME, the key takeaway for 2025 is this: "Both Nvidia and AMD have known for a long time that 1440p was "coming" but neither have provisioned for it in their low-end cards." 1440p panels are so cheap now, there's very little value to be had sticking to 1080p the price delta is so small. GPU's need to keep up. B580 being the trend-setter here, will be very interested how it fares if NV/AMD price-comparable 2025 cards are still stuck on 8GB.

Yeah, the problem is going to be volume and cost to Intel: as far as I understand it, they're losing money (or not making money) on Battlemage and it doesn't appear to be a high volume product so they're really not holding the other two manufacturers' feet to the fire...

Post a Comment