|

It's time for the yearly round-up of game recommended system specifications trending data!

First up, I want to pay tribute to the person who helped inspire me to begin this yearly endeavour. It was his catalogging of the games released over a ten year period on Steam that jump-started this whole thing. He will be missed...

However, games keep being released and keep requiring more demanding hardware... so my sisyphean task remains.

Covering the basics...

I have to quickly go over some things before relating the data - which can be found here. I have refreshed the data from the current Geekbench and Passmark databases as there has been a significant amount of drift over the last year as these benchmarking programmes have evolved. Similarly, Userbenchmark (whose actual data I never had a problem with, and found lined up quite nicely with other benchmarking services) has also evolved in how they apply their biases/assumptions, etc. However, updating all the numbers for just two benchmarks was enough work. So, if I get around to it, I will update the Userbenchmark data at a later point.

The games that are included in the assessment are hand-picked by myself, so there is an inherent bias there to this data. However, I am picking games that I feel are popular for whatever reason (compeitive, cultural, etc), require some amount of hardware to run (i.e. not a game that can run in a browser), or are representative of the latest challenges placed on PC gaming rigs.

Since a LOT of high profile games were delayed from a 2022 release to, well, just about now I think the relative increase this year has been quite low compared to what it should or could have been. Looking at the first few games released in 2023, if they indicate any sort of trend, we are looking at a generational leap in system requirements as the inter-generational period finally ends...

Buckle-up! The 2023 is shaping up to be a wild ride...

Boy, don't hurt your brain...

|

| Last year, we saw a drop in the trend, this drop is observed once again... (Grey line was the fit from 2021) |

As noted above, the delay of some of 2022's most anticipated titles into 2023 has severely reduced the single core requirements for games released this year. We do see an increase in requirements, at least - unlike last year were we observed a slight regression. However, we are still looking at single core performance of greater than an Intel 10600K/10700K.

|

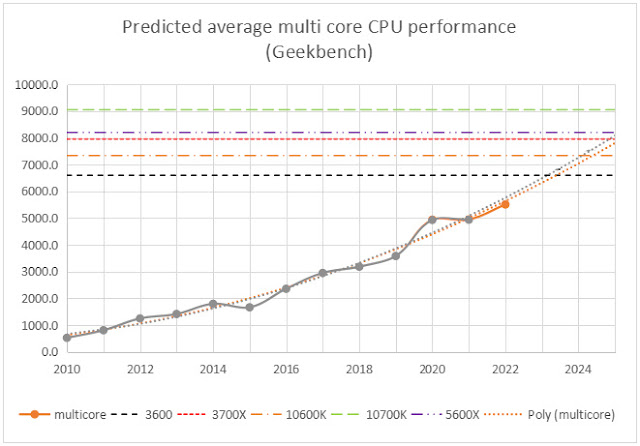

| We also see an associated drop in multicore predictions, too but the jump in actual requirements this year was much larger, here... (Grey line was the fit from 2021) |

Multicore sees a smaller decrease in predicted average recommended requirements in 2025 but we're still looking at around the performance of a Ryzen 3700X.

I haven't included any performance thresholds for the latest generations of CPUs but the short of it is that they pretty much all blow the included CPUs out of the water. Anything Intel 12th gen or newer will absolutely smoke these parts.

The one caveat here is the Ryzen 7 5800X3D. On paper, it has worse single core performance than the 5600X and worse multicore score than the 10700K.

Due to the way the cache is configured on this chip, there are no standardised synthetic benchmarks, that I'm aware of, that will accurately capture its real-world performance in games. Going forward, these parts with vastly inflated cache sizes, will have non-standard scaling in comparison with other chips in the same stack or even between generations.

The only data point that I am able to see that reflects the real-world performance, that is available in a large standardised database is the memory latency figures provided by Userbenchmark. The performance difference, in those games that can take advantage of the 3D cache, is approximately equal to the difference in the memory latency test.

It's something that I need to look into, especially as more parts are released (from both AMD and Intel) that incorporate more on-die cache. There is a possibility that, somewhere down the road (in 2025 or so...) that I would need to apply a scaling factor based on this metric...

Brawn for the beast...

|

| Predicted GPU requirements also decreased, somewhat... |

So, the good news is that CPUs are cheap, performant, and plentiful. Even the lower-end of both current product stacks is far beyond what is expected to be required for the average recommended system requirements in 2025. The problem, here, is the GPU situation...

GPUs are expensive, lacking in performance*, and still somehow not that plentiful in supply. Sure, we had a glut of cheaper sales in September and October, but those appear to have dried up. Yes! Graphics settings scale decently well on most games... but it's not an absolute truth. We're talking about the recommended system requirements for games at 1080p! Not some outlandish scenario!

*Except at the high-end, where we're still making huge strides each generation...

Added to the absolute cost of getting a certain level of graphics performance in your computer, we have issues, further down the stack with VRAM quantities - but I'll get to that next.

And the memories bring back you...

|

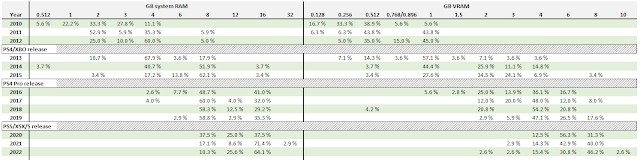

| The required amount of system memory is increasing... |

Given the vastly divergent memory design paradigms of the current gen consoles and the PC, we haven't observed any unexpected movement in system memory requirements over the last three years. Yes, we were in the inter-generational period, where games were still catering for the last gen consoles, so it's not surprising that requirements haven't shot up (though I have been advocating for it).

But, there is a small, interesting movement in the second most required specs - from 8 to 12 GB of RAM. That's interesting on two levels - one, because there is basically no recommended way to build a PC with 12 GB of RAM... and two, because it gives me a message that developers want to push things further but are trying to boil the frog, so to speak.

Yes, system requirements will have to go up over time as games get more demanding... but any system that has 12 GB of RAM will actually, most likely, have 16 GB. But, somehow, a quarter of the games I polled are requesting only 12 GB.

I can see this from the perspective of developers, as well: some gamers often have backlashes at system requirements that appear too high... the problem being that gamers have gotten used to games not advancing in requirements due to the lacklustre PS4/Xbox One and PS3/Xbox 360 console generations*. They do not remember the times where you upgraded your GPU every year or two years... So, maybe, there's a logic to slowly increasing specs so that they can be pointed back towards, later, when more demanding games come out.

*In terms of hardware performance...

The other side of things is what worries me slightly. Are developers stating system requirements as an absolute requirement for the game to run or are they factoring in system overhead, too? If it's the latter - are there developers out there that state 16 GB of RAM as a requirement, not including OS and other background tasks overhead? I sincerely doubt it, but I had to explore the thought...

|

| ... and video memory is increasing at a faster rate. |

VRAM requirements are a bit more shocking - mostly because the advancement in VRAM quantities on low-end graphics cards has been incredibly slow since 2016. Not only has the recommended amount jumped to 8 GB but this is the first year where a game's recommended system requirements listed GPUs with a minimum of 10 GB VRAM*.

*Often two GPUs are listed in requirements and I will take the VRAM quantity from the card with the lower amount... in this case, only the RTX 3080 was listed, so maybe it's not that representative.

Going back to the GPU trending analysis, we're in a bit of a strange transition period: graphics card performance requirements are increasing at a steady pace but actual, on-card VRAM quantities are stagnating because of the need to pair chips with a certain bus width and the need of the GPU chip designers to limit the bus width on lower-end cards to avoid larger costs because the memory controllers/interfaces take up large portions of the GPU silicon die.

Taking into account that APIs like DirectStorage could increase VRAM requirements, along with general increases due to higher graphical fidelity and implementations of ray tracing, there appears to be a movement towards requiring higher VRAM quantities, as standard. However, up until the current generation of cards, GDDR6 module capacities are limited to 8 Gb (1 GB) and 16 Gb (2 GB). This means that virtually all low-end and mid-range cards (ignoring the anachronistic RTX 3060 12 GB) are not suitable for higher graphical settings at 1080p, going forward, due to their typical 4-8 GB framebuffers.

Thankfully, AMD's mid-range RX 6700, and XT, jumped to 10 and 12 GB respectively, meaning that these provide enough performance to meet the expected requirements in just a couple of years for newer, triple A releases. Owners of even the similarly powerful RTX 3070 will likely have to limit some of their graphical settings in order to maintain performance - as I had to do for Deathloop.

|

| Percentage data for each RAM configuration per year saw a first for the VRAM requirements... |

Now, while this is a potential issue for owners of current and prior generation cards, there is a potential solution on the horizon: GDDR6W. This DDR technology allows for modules of up to 32 Gb (4 GB) capacities, meaning that larger capacity modules could be paired with more anaemic memory setups - as is found on lower-end cards.

The issue with this is that it is not certain which memory technology will be applied for the next generation graphics chips. The high-end dies (used for the most expensive graphics cards) will mostly benefit from higher bandwidths - meaning that GDDR7 seems like the more obvious choice for those future releases since it will increase speed up to 36 Gbps from GDDR6's current 16-18 Gbps. (Though, Samsung also announced 24 Gbps speed GDDR6 late last year). Neither GDDR7 or this faster GDDR6 are confirmed to come in higher capacity modules, meaning that if either technology is chosen, the VRAM drought on lower-end cards might not change, unless cards such as the RTX 4050 increase their memory bus to 192 bit but, given that the RTX 4070 Ti is already at a 192 bit bus width, this seems unlikely.

All in all, I think that memory technology and interfaces look like they will be the most interesting facet of PC gaming over the next few years...

Prediction, prediction foretold...

|

| I was quite close for RAM requirements, this year... |

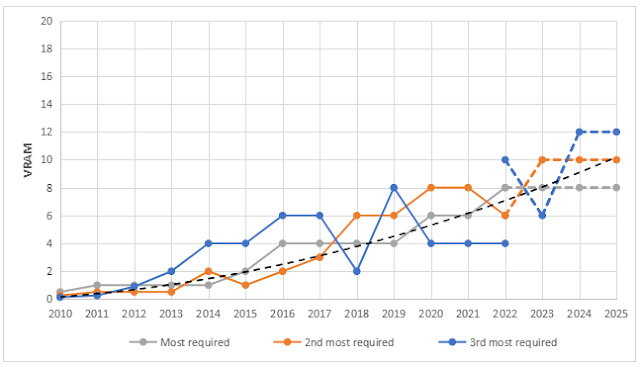

Just like the CPU and GPU prediction graphs, I also draw up RAM and VRAM capacity prediction graphs too. The difference, here, is that I am the one predicting the curve and most required, secondmost required and thirdmost required quantities because, unlike CPU and GPU performance - these are discrete quantities and not a scalable number. You either have 8 or 16 GB - you can't have 14 GB.

These predictions are, essentially, everything I believed would come to pass, way back in 2020...

So, how am I doing? Well, the most required 16 GB is correct but, to my surprise, 12 GB has overtaken 8 GB RAM as the secondmost required by games in 2022. Things look like they're advancing faster that I thought.

Looking forward, though, I think I was likely over-ambitious to expect 24 GB quantities being sceondmost required next year, despite 24 GB DDR5 modules being announced.

|

| I was more bullish for VRAM predictions... |

While I was mostly accurate for the first two required VRAM quantities, I was over-optimistic for the thirdmost required quantity. 10 GB VRAM may become commonly stated as a recommended requirement one day, but when only the most expensive graphics cards have framebuffers of sufficient size to accomodate that, this seems like an improbable outcome - even for next years' secondmost required prediction.

|

| From the graph, I am FAR away from reality... |

I also took a swing of trying to predict the number of cores most required from games, too. The graph, above, looks like I got things pretty incorrect. However, looking a the raw data, we're almost at my predictions: 12 games had 4 cores and 11 games had 6 cores, while 10 games had 8 threads and 9 games had 12 threads required.

What we are seeing, though, is an increase in the average cores and threads required to run games. Sure, these don't actually correspond to an actual processor you can buy (at least I'm not aware of any 6 core 10 thread SKUs!) but they do indicate a trend of increasing CPU resources being required.

So, next year, I am expecting to be correct on this one.

Wrapping up...

|

| I think that this graphic shows how underpowered the prior generations of console CPUs were... |

We're now moving into the third year of this current generation of consoles and we're only now, in 2023, really beginning to get ports of the games that are exclusive to this generation of hardware.

I really don't have an issue with the expense and performance of either processors or system memory - as gamers, we appear to be in good stead. Graphics cards are a different story and, despite their hugely increasing expense, I am left wondering if we are really getting enough value for our money.

In the here and now, we're doing okay. An RX 6600 or an RTX 3060 8 GB will play 1080p games at the recommended settings now but, in just two short years, will they be able to?

Even mid-to-high end cards like the RTX 3070 and 3070 Ti lie on that cusp of being functionally hindered by their VRAM capacity, whereas the AMD equivalents all have sufficient memory to last - just as we saw back in 2016 with the RX 400 series releases.

In the past, I've advocated for spending a lot (and keeping your GPU for years) or spending as little as possible (and upgrading more often).

I think that advice still stands.

Next time, I want to update the cost of buying a computer that meets the current averaged recommended system requirements for gaming, covering 2020 to 2022. I'm also working on a blogpost covering the power scaling of the RX 6800 as I would like to see how the efficient chip of RDNA 2 compares to the efficient chip of Ampere - the RTX 3070.

No comments:

Post a Comment