|

Most outlets at this time of year are looking back at their favourite games of 2020 or the best specific pieces of gaming hardware (e.g. monitors or cases). I haven't played enough games (and more specifically, new games) this year to make the endeavour worth it. I don't own enough different pieces of hardware that I can review any releases within the last ten years, let alone this year!

What I can do, though, is follow up on the hardware trending articles I put out during this year in an attempt to plot where we've come from in terms of recommended requirements in gaming and where we're heading.

Just to briefly remind everyone, once more - these are based on the official "recommended requirements" for each "major" game released within a year*. This list is not exhaustive and is heavily biased towards the "big" games that have a lot of money spent on them with large numbers of people working on them. In my opinion, this bias in the data is important because it is these games that appeal to and reach large swathes of the audience and thus the requirements to be able to play these games have a big impact on the overall industry as a whole, comparatively speaking.

*Just going through the list, I've noticed that several E-sports titles (and Fortnite) are not included. That's something I can remedy in the future but the requirements of those titles will not overly change the data discussed below.

That is not to say that indie and smaller games do not have impacts on the gaming industry, just that they tend to not have much of an impact on hardware requirements or the need to purchase new hardware in order to continue to enjoy gaming.

- Recommended Requirements are those chosen by the developers and usually correspond to a performance target of 30 frames per second on medium/normal graphical settings at a resolution of 1920x1080.

- A major game is one defined as AA or AAA (aka, big budget, graphically intensive games) and/or hugely popular games. These are a bit subjective and are chosen by myself.

At any rate, you can take a look at the raw numbers yourself, over here.

CPU Performance...

|

| Comparison between mid-year results (grey) and end-of-year for 2020... |

It's telling that, based on only six months additional game releases, that both the single and multi-core requirements have increased, with both performance trends switching from a linear to a polynomial fit in the process. I could fit the performance to a linear curve as well but I do not believe that it will scale in this manner over the next five years - but let's see.

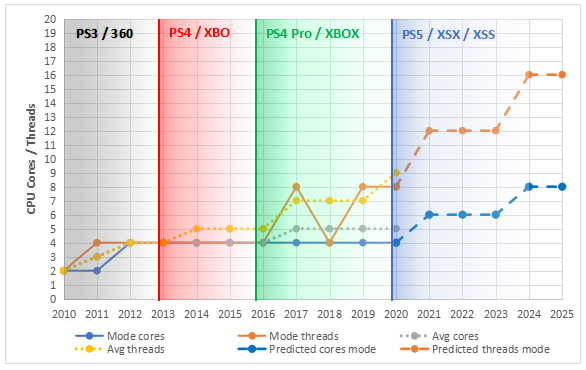

I am of the opinion that the uptick in multi-core performance we can observe this year is an artefact of both AMD and Intel shifting their product stack downward (i.e. you get more cores and threads at all price levels compared to back in pre-2016 times) in combination with the release of the new consoles which have much stronger single- and multi-threaded performance. It's much more reasonable for developers to request that the user have a 6 or 8 core CPU than it was just four years ago.

|

| Mid-year trending for predicting "required" performance in 2025... |

|

| End-of-year trending for predicting "required" performance in 2025... |

Looking at the predicted performance, though, we see that it's not so unreasonable to make the change to a polynomial fit: Single-core and multi-core peformance is only on par with a Ryzen 5 5600X - a part that is considered mid-range this year and for the coming six months. I think that for developers to expect a five-year-old CPU in 2025 is not out of the ordinary!

It does, however, draw into stark contrast how much the CPU landscape had stagnated and how quickly the performance uplift is occurring, across the board. I'm expecting the mid-range to increase in performance by around 53% and 70% in single- and multicore respectively over the next five years. I think that isn't unreasonable given that the inverse of that takes us back to around 2014-2015.

There is one extra consideration to take into account, though...

|

| I predict that 6-cores/12-threads will be the average suggested requirement from next year until 2023, with 8-cores/16-threads overtaking it from 2024 onwards... |

I had this discussion last time. I'm sure there are many people who will disagree with me on this but I intend to address this issue a little more in my end of year predictions post (which should be up over the coming days). However, yes, sure my predictions of performance for single- and multi-core are around a 5600X... but that part doesn't match up with this prediction that games will be asking for 8-cores/16-threads in 2025.

There's more to performance than a simple multi-threaded benchmark. I believe that we are on the long-awaited "systemic" precipice - I think that with the available processing power in the new consoles, we are going to see a renewed focus on simulation. World (social) simulations, physical attribute simulations, dependency simulations, visual simulations. These all require CPU power but, uniquely, they can all be running on separate processes from each other in the background, away from the main game process.

This means that more cores = more performance when these simulation elements are utilised by a game.

So, as I said previously, I think that the best CPU to buy right now is an 8+ core chip and I stand by that assertion. This would ideally be a Ryzen 7 3800X/XT or a Ryzen 7 5800X or an Intel i7-10700/K/F/KF. And given that these parts are essentially equal in price to a Ryzen 5 5600X at around ~£/€/$300, don't get sucked into the "new" thing.

RAM considerations...

|

| I don't really have any change in RAM predictions... I believe that they tend to match the limitations inherent in the consoles... |

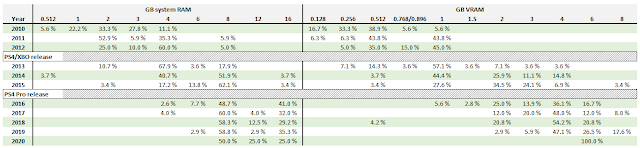

System RAM is on the same trajectory it has always been. The discrete nature of the quantity of RAM means that jumping from 4 GB to 8 GB was a given in much the same way that jumping from 16 GB to 32 GB will be a given at some point in time. However, I don't predict that games will routinely be asking for more than 16 GB of RAM in 2025. It just seems like overkill when consoles have only 16 GB of GDDR6 between the OS and the currently running application.

I think that 32 GB is nice to have but really not necessary.

|

| Mid-2020 requirements... |

|

| End-of-year results... |

What is interesting is that, over the course of the year, RAM requirements are slowly shifting towards higher quantities. Mid-way through 2020, system RAM was firmly at 8 GB whereas here at the end of the year, we have an even split between 8 and 16 GB. VRAM has also similarly increased from a 100% of games recommending 6 GB to almost a third of games recommending 8 GB VRAM.

There was a lot of commentary surrounding the launch of the 3070 and 3080 with their relatively small quantities of VRAM compared to the RX 6000 series cards and I do believe that those commentators are correct in the here and now. Pure VRAM requirements will continue to increase and will soon be above what Nvidia's current offerings are providing.

|

| My VRAM predictions are staying the same too... |

Saying that, there is more to VRAM usage than just straight numbers. Both GPU companies utilise compression techniques to improve their effective RAM utilisation and bandwidth throughput - both on-die and in the VRAM itself. It's very difficult to say that the Nvidia GPUs will suffer in the near future whereas the AMD GPUs will not because Nvidia can potentially implement better (or smarter) compression techniques by utilising their tensor cores, neutralising the pure number advantage that AMD has on their cards. Conversely, AMD doesn't need to use compression because of their on-card VRAM quantity and that suits the architecture because they do not have a tensor core equivalent in their compute unit design.

My personal bet is that VRAM is a wash between both current GPU architectures. 8-10 GB VRAM for the 3070-3080 is equivalent to the 16 GB on the 6800/6800XT. However, it will take increasing amounts of on-chip calculations to decompress that data for the Nvidia GPUs, resulting in a situation that people tend to frame as the "Radeon fine wine" effect. The result of which means that Nvidia's GPUs appear to age worse than AMD's, though the opposite is actually happening - despite more limited resources, the Nvidia GPU ages better than it should. AMD's GPUs have over-provisioned resources and so have less (or no) degradation in performance over time.

Both approaches work well so I don't really believe you'll have much of an issue with either architecture going forward over the next few years, with respect to VRAM.

GPU Performance...

|

| Comparison between mid-year results (grey) and end-of-year for 2020... |

We have ended up in a similar sitation to the CPU trending above: over the last six months, GPU requirements have increased, resulting in higher than previous predictions. I will note that I had an incorrect value for a GPU in 2015 and this is why you can observe the shift in the average recommended requirement for that year, though it has no effect on the current or prior predicted trajectories...

It's a relatively simple picture, compared to CPU and RAM, but essentially, we're looking at RX 6800 XT and RTX 3080 levels of performance in 2025. Considering a GTX 1080 Ti came out in 2017 and had a slightly higher performance than the RTX 2060 in rasterisation, that seems right on par with my predictions for 2022 being where the GTX 1080 Ti becomes a 1080p, 30 fps card - if we look at the cyberpunk Ultra CPU benchmarks, we can see it's already at 49 fps 1% low with an average of 60 fps (when using an i9-10900K). It wouldn't surprise me to see that reduced to approximately 30 fps in games running two years from now.

I mentioned in Part III that it seems to me that the best decision when purchasing a GPU is to go with either the high end or the ultra low end and we can see that playing out here: A 1080 Ti, 6800 XT or 3080 will last you five years. Conversely, the RTX 2060 will last around two and a half to three years. Considering you're paying $300-400 for the cheaper part and $700-800 for the more expensive parts (as long as you're not being price gouged) that doesn't really make financial sense (as long as you can afford it).

I believe that when you take into account the launch cadence between architectures (and the higher/lower end parts), you're always treading water if you go for the middle of the range. If you want ot go low and save money, you can upgrade and spend less, overall, than buying a mid-range GPU. If you want to go high, with a lower resolution, then you're getting all the benefits of the high-end card for those first two years without any penalties but you spend as much as buying two mid-range cards in that same four year time period where otherwise you'd have had to compromise the whole time.

So, you end up essentially paying the same amount of money but you never really get the same performance... If I'm able to afford it, I know which I will choose going forward.

Thanks for all the fish...

I really find this sort of navel gazing and trending interesting (if you hadn't already noticed) and, since I couldn't find this information anywhere else, I had to step up and put something together myself. I really hope this helps people to understand what's going on in the PC hardware landscape and it might even help influence some purchasing behaviour. It is my intention to perform a recap of this data at the end of each year - so, see you at the end of 2021!

Have a happy Christmas and I hope you are all looking forward to a happy and successful new year!

2 comments:

Wish you a happy new year, and thanks even biased for this valuable information.

You're welcome and happy new year to you as well!

Post a Comment