|

Last year, I looked at the possibility of a mid-generation refresh for Sony or Microsoft's current console line-ups and didn't really see any point in one. I posited some easy improvements and reasons why other possible improvements didn't really make any sense.

Now, after a series of rumours from various sources, Moore's Law Is Dead has presented a pretty concrete leak. which is confirmed to originate from Sony's developer portal, confirming the console's existence and also some key performance metrics.

Chasing the Dragon...

To start with, let's summarise the information from the leak via MLID:

- The system uses faster clocked memory.

- Rendering is about 45% faster than the standard PS5.

- Ray tracing improvement is 2 - 3x over the standard PS5, with extreme cases of 4x

- 67 TFLOPS of 16-bit FP.

- Custom architecture for machine learning to support PSSR (like DLSS).

- Currently takes ~ 2ms to upscale from 1080p to 2160p.

And that is basically, it. The whole thing is a little vague and, as a result, many commentators have weighed-in with outlandish or incorrect claims - something that Tom at MLID has also stumbled with a little, specifically in regards to the raytracing performance of the PS5 Pro based on the above.

What is NOT said or clarified:

- Memory speed is not mentioned.

- RDNA architecture is not mentioned or addressed.

While writing this blogpost, a new leak from Tom Henderson from Insider Gaming was put out. Additional info is below:

- System memory bandwidth increases from 448 GB/s to 576 GB/s.

- CPU is identical but can operate with a boost up to 3.85 GHz

- 30 WGP running BVH8 (PS5 base has 18 WGP running BVH4)

|

Addressing the Claims...

576 GB/s memory bandwidth corresponds to 18 Gbps GDDR6 RAM - already clarified by MLID and also predicted by myself in my last article. This was a no-brainer because this is probably the most readily available and cost effective GDDR at this moment in time. Sony would have likely still been buying the same modules but then down-clocking them...

Let's move onto the performance claims:

For RDNA3's release, AMD claimed a 50% RT performance increase per CU over RDNA 2 between an RX 7900 XTX and an RX 6900 XT when using indirect draw calls. They also made the claim of up to 1.8x when comparing a Ryzen 7900X + RX 7900 XTX system with a Ryzen 5900X + RX 6950 XT system (at 2.5 GHz core clocks). The problem here, and as I alluded to above, the two claims are not linked. The raytracing performance of RDNA 3 over RDNA 2 is not 1.5 - 1.8x:

- It is 1.5x per CU (unknown if this is iso-clock frequency or at what render resolution this test was performed at) in a specific scenario.

- It is up to 1.8x at iso-clock frequency between two specific GPUs with unknown factors relating to platform performance uplifts (CPU and system memory DO have an effect on framerate and RT performance, depending on the implementation) across a selection of games which include some Nvidia sponsored (read: optimised) titles.

Let's take a look at the first point, there.

From what I've been able to learn from a few articles, indirect draw calls are quite common command structures for raytracing scenarios (and GPU rendering in general) as there will be some sort of volume (or BVH) that must be traversed by the rays which the game engine wishes to cast to be able to provide the information for lighting, shadows, reflections, sounds etc. Basically, AMD are saying here that this is an optimal, but typical situation - there's likely no further improvement to be had.

Looking back at review data from TechPowerUp, the RX 6900 XT used a steady-state clock frequency of 2.29 GHz and the RX 7900 XTX was around 2.44 GHz - a 150 MHz or 1.066x difference. The 6900 XT has 80 CU and the 7900 XTX has 96 CU - a 1.2x increase. If we take the claim at face value, we must assume that AMD took into account these differences in the analysis. Otherwise, the actual performance improvement would be much lower.

In fact, in real-world scenarios, we're seeing around 1.43x @1080p, 1.35x @1440p, and 1.63x @2160p. Averaging those out, we come to 1.47x but for PS5 Pro discussion, we're most likely talking about 1080p and 1440p internal rendering situations so somewhere between 1.35 - 1.43x in ideal scenarios.

Moving onto the second point:

This specifically was tested at iso-frequency and the resolution is listed as 2160p for the applications tested. So, this is a GPU-bound situation. However, I am constantly repeating myself that small numbers make for poor percentage comparisons. TechPowerUp's numbers in Cyberpunk (one of AMD's comparison applications) show the difference is 19.9 fps to 11.8 fps - 1.69x but just a measly 8 fps... Not particularly impressive. If going from 1 fps to 2 fps is classed as a doubling of performance (it is) that doubling of performance is not impressive in an absolute sense, and the same applies here.

Taking the same test, the two cards achieve 1.58x @1440p and 1.52x @1080p for a 1.2x resource increase - and I can guarantee you that those are not CPU-limited situations...

|

| When taken as a whole, AMD claims the CU offers something more akin to the 10-20% uplift I found... |

What this all means is that for each compute unit, we're looking at around a 10 - 20 % performance increase from RDNA 2 to RDNA 3, like I showed in my prior article. So, Sony's claims are specifically speaking to the total chip configuration, not per hardware unit.

With the newly released information the confirguration of the PS5 Pro is as below. We can therefore estimate the theoretical performance uplift in relation to hardware units and architectural uplift assuming a largely unchanged operating frequency:

- 56 CU (two DCUs disabled) vs 36 CU for a potential 1.56x1.17 = 1.82x performance gain (RDNA3) with 2.7x RT performance.

With Insider Gaming's new leak, things appear more certain... I've removed the unlikely scenarios I had previously listed.

Why is this important? Because this isn't what Sony are claiming... Sony are claiming a 1.45x performance gain. What this tells us is that the chip is limited in some other manner.

The CPU on the PS5 APU is fairly similar to the Ryzen 7 4700G. Now, while the CPU can be a bottleneck, a Ryzen 4700G isn't going to stop a game from reaching 60 fps in most scenarios but the 4700G is operating at 4.4 GHz and the rumoured PS5 Pro "CPU" will be working at 3.5 GHz, with a situational boost to 3.8 GHz.

However, you will typically be GPU-bound in most graphically intensive games, especially if you have a render target of 1080p - 1800p and are pushing raytracing. So, if the GPU is increasing in resources by so much, why is the performance gain so little?

Well, let's look at the desktop equivalent parts:

|

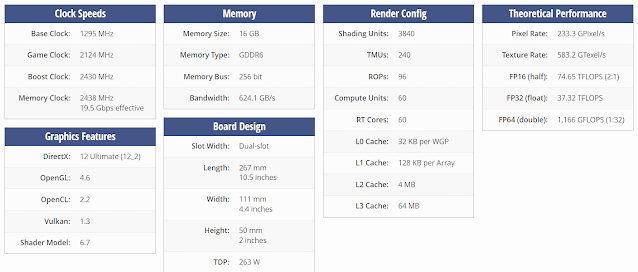

| The RX 7800 XT requires up to 263 W to operate... (TPU) |

|

| The RX 7700 XT requires 245 W to operate... (TPU) |

Those 54 CU and 60 CU configurations of RDNA 3 are not that power efficient (though they are using chiplets which push the operating power a little higher than would be for a monolithic design) and require 245 W and 263 W respectively in certain scenarios when the entire chip is being used at full tilt - which is around 2.5 GHz.

The base PS5 uses up to 200 W for the entire system...

And what happens when we power limit any CPU or graphics architecture? The operating frequency doesn't really drop that much but the performance of the chip drops - but less than the power consumption of the chip. Looking back at that power scaling exercise for the RX 6800, we can see that by halving the power (from ~200 W to ~100 W) we only lose 260 MHz* and 15 % performance.

*It's possible that I didn't need to drop that much frequency but I didn't spend hours optimising for each and every point like a hardware engineer designing a console might do...

Now, that was for RDNA2. Unfortunately, we don't have the same freedom with RDNA3 because we cannot power limit the card, only reduce the core frequency - which is not a representative scenario. But lets pretend that we can achieve the same scaling with RDNA3 that we did with RDNA2 what would we potentially get?

|

| Power scaling for Metro Exodus: Enhanced Edition on the RX 6800. We see the performance drop while the frequency stays the similar to stock (2219 vs 2000 MHz)... |

Let's imagine that Sony don't really want to lose all that much performance by saving on power budget. They spent the money on the die size and so, by god, they're going to use it! Looking up at that table, we can see that the optimum power performance is around 70 % where we're losing around 1% of the typical performance of the GPU but we're saving 58 W. (Note, we're also keeping the core frequency approximately identical.)

Applying this same logic to the base PS5 and we reduce the similar* RX 6700 (non-XT) from around 175 W to 125 W - giving comfortable headroom for a 30 - 35W CPU, 18 W GDDR6, 6 W SSD (x2) and around 20 W left over for sundry items on the board.

If we then transfer this logic over to RDNA3, we need to correct for the fact that we're paying relatively a large cost for running those memory chiplets. If we just assume the same component power usage of RDNA2 (7nm) parts, then we're probably more in the ballpark of the equivalent power usage on the PS5 Pro APU. My calculations put this at a theoretical maximum of 147 W for the RX 7800 XT and 138 W for the RX 7700 XT at stock boost frequencies. The theoretical 56 CU part would be equivalent to around 136 W @2337 MHz (this is purely the GPU core!!) IF it's produced on a 5 nm process.

I wouldn't be surprised if Sony may have opted for a 6nm process for cost reasons. Similarly, the two reasons I'm primarily talking about a 56 CU part are because 1) a cut-down GPU will lead to more usable dies for Sony to use, causing the overall per unit cost to decrease. And 2) a 56 CU part is hardware backwards compatible with the 36 CU base PS5*.

*3 shader engines, two with 18 CU and one with 20 CU - you just need to disable the 20 and you are left with an equivalent, symmetrical base PS5 configuration...

|

| Best guess scenario puts the PS5 Pro total power around 5 - 10 W more than the base model... |

Anyway, getting back to the power: if the APU is on the 7 nm process, we're probably looking at ~189 W GPU core. However, if we apply the same power saving technique that I did in my GPU scaling tests to both of these theoretical GPUs, we're looking at circa 97 W for the 5nm part and back to 135 W for the 7 nm part - with somewhere inbetween for a 6nm part (leaning more towards the 7nm value).

Taking into account the increased CPU clock speeds, we may only be looking at a 5 - 10 W increase over the base PS5 in the worst case scenario or equivalent power to the base PS5 in the middle... or 25 W lower in the absolute best case scenario!

My initial feelings were that any PS5 Pro would need to increase the power budget but going over the old power scaling numbers again, I can see how much potential power savings are left on the table! If we wanted a hard limit on power to remain identical between the base PS5 and Pro, we could drop it down by another 10 W and only lose around 5-10% performance.

|

| A comparison of reported power usage in Metro Exodus: EE, between the RX 6800 and RX 7800 XT... |

However, we are still assuming that the CPU isn't going to be stopping the console from normal performance (ignoring 120 fps gameplay and instead focussing on 30/60 fps) the GPU doesn't appear to be much limited by the power if these numbers and the estimates for the system power are accurate.

This, then, brings us back to the question: how do we get to only a 45 % performance gain when I'm calculating, at worst, around a 1.77x increase in performance?

Surely, it's due to power and thermal limits? Normally, I'd say there's maybe some sort of clockspeed regression but logic (based on the leaked specs and Playstation back compat) would suggest otherwise. Could it really be a CPU bottleneck? It doesn't seem likely... but maybe I'm wrong.

Or maybe it's more simple than that? Maybe I'm overthinking it?

|

| Et tu, Brutus? |

At the end of the day, the RDNA3 architecture was not that impressive over the RDNA2 architecture in many ways - at least that was the presentation and absorbed perception of the general populace. Even I, dedicated proponent of ground-truth, fell to this perceptual deception... until I tested it (or at least non N33).

But, the truth is that a console is not a PC. Consoles are inflexible (or are perceived as). And thus we come to the conundrum:

As Matías Goldberg points out, Changing architectures is risky. What if a game is doing something it shouldn't be doing? Changing an architecture might break that game in very wrong ways.

In my naivete I replied with garbled language, "Maybe this is the case, or maybe that..."

But Matías is right! So, what happens when you ignore architectural improvements as a default defensive mechanism? After all, RDNA3 is RDNA2 with some new boots and a swanky cape. It's still the same person underneath...

|

| So who would live in a house like this... (aka, 45% is close enough to 43% at 1440p) |

And there we have it: the PS5 Pro behaves exactly like the upgrade from an RX 6700 (non-XT) to an RX 6800* (non-XT). At least by default.

Sony, by disabling all potentials, would manage to sidestep this problem - to nip it in the bud. By ignoring the benefits of RDNA3 but including the architecture in the hardware, would leave existing games untouched in terms of architectural upgrades (power and frequency improvements would still help increased performance) but leave open the door for new titles to optimise for the Pro in ways which would surpass that limitation - especially when it comes to raytracing performance.

*In terms of pure performance. Obviously, it's not a 1-to-1 comparison...there are still performance increases in the RDNA3 architecture which are not based on the dual-issue FP32 design.

|

| If we're honest graphics whores, we know that RT performance really needs to be improved! |

Conclusions...

The specification leaks for the PS5 Pro seem pretty airtight, now. We've knocked back the initial rumours of 60 CU to a more sensible configuration. A configuration which allows for hardware backwards compatibility with both the base PS4 and PS5.

The CPU frequency is making more logical sense, and the use of RDNA3 is surprising to me given the paucity of the claimed performance improvement. But also because I felt it was a waste of die area to include the extra RDNA3-specific architectural changes to the DCUs.

The claimed performance uplift makes sense in terms of adhering to what has come before: Sony are not looking to stomp on any developers' toes, forcing them to patch when they don't need to, they are being careful and I respect that!

However...

Honestly, a part of me is wondering why AMD/Sony didn't go with the same 36 CU configuration, but using RDNA 3 instead. They'd get the RT bonus performance and they could have clocked the GPU frequency higher to achieve a similar level of raster performance though at a cost to power use. The die would also be cheaper - and this is doubly important if there is some sort of CPU bottleneck in play - you've got a lot of wasted die area spent without capitalising on the potential performance.

One last jab from a logical perspective: If there is no mid-gen Xbox Series X refresh on the table and, instead, the next Xbox is releasing in 2 years - Sony has badly miscalculated the release of the Pro. It's not that big of a jump in performance over the base console, it will be more expensive (total cost of ownership) and the resources and effort to take advantage of its power from a development side, not to mention from the point of view of creating it in the first place, seem very wasteful.

The base console was not performing poorly compared to the XSX and I can't imagine that more than the 20% rumoured sales that the PS4 Pro enjoyed compared to the base PS4's 80% will be achieved.

Last time, I was left scratching my head and, to be honest, I still am...

No comments:

Post a Comment