|

These days, there's a lot of talk about how CPU- or GPU-limited various games are but something I've always wondered is "where is the cut-off point?" for pairing a GPU with a particular CPU? This is especially important as we reach a point where the Ryzen 5 5600 and i5-12400 reach around €120 - these are really cheap and relatively performant options for the lower mid-range.

That's something that you don't tend to see explored too often at any well-rated benchmarking outlets like Hardware Unboxed, eteknix, or GamersNexus, etc. So, while I don't have many configurations to test out, I wanted to explore where the point of diminishing returns might be for games, as gamers would actually play them...

The Premise...

As gamers, we often get told where and how to spend our money from various spheres of the PC gaming space:

- Spend as much on the GPU as possible.

- Buy CPU with as much cache as possible.

- Get a CPU with as fast a single core throughput as possible.

- The fastest RAM with the lowest latency is the best.

- A fast SSD will result in the lowest loading times.

We often hear much advice about where to spend our time as well:

- Reduce the latency on your RAM and you'll get higher FPS and better 1% lows.

- Overclock your CPU and you'll get another 10% performance.

- Overclock your GPU and VRAM to improve performance.

The problem with a lot of this advice is that it is often anecdotal, platform specific, and, sometimes, even specific to a particular generation of hardware! Most people are looking at the high-end and extrapolating downward to lower performing hardware.

So far, I've looked at the effect of DDR4 RAM speed on mid-range systems and didn't find much of any improvement - on the order of around 5-10 fps (though only if you have the best RAM IC!) and that was fairly independent of latency, bandwidth, and RAM speed...

I've also noted the slight performance difference between the 12th gen cores and Zen 3 cores - with the 12th gen slightly out-performing the Zen 3 part in CPU-limited scenarios. This is despite the i5 having less cache than the R5 part, because different architectures work differently! Obviously, we know that the cache size is much more integral to Zen 3's performance profile as evidenced by the effect of adding the 3D cache to the desktop parts...

But, here's the thing: if, on a mid-range CPU RAM speed and latency really isn't that important (especially when considering total cost of ownership for your PC) how much should you spend on a GPU?

People like to throw out the idiom of "you'll be CPU-bottlenecked if you buy such-and-such a GPU, aim lower", and I've personally encountered situations where my advice to someone playing an esport to upgrade the CPU instead of focussing on the GPU was roundly criticised on a certain forum.

This, then, is a stab at further reducing the number of choices that mid-range gamers should be making.

Remember - what's important here is not the absolute performance but the relative performance scaling for each GPU relative to the most powerful one I have on hand - the RTX 4070 Super. However, we may delve a little into that absolute performance scales, as well...

The Setup...

For this testing, I have my trusty Ryzen 5 5600X and Intel i5-12400. These aren't the totally up-to-date modern mid-range gaming CPUs that they were a year or two ago and have been superseded by the Ryzen 5 7600 - if not in price, then by performance. However, even these CPUs are still probably more powerful than those a large percentage of gamers are bringing to their gaming table - but you can probably say that about the GPUs I'm going to be speaking about, as well.

Each of these CPUs is paired with five GPUs: the RTX 3070, RTX 4070, RTX 4070 Super, RX 6800 and RX 7800 XT.

These represent around three tiers of performance of the recent modern mid-range (price notwithstanding) and should be able to show whether there is any sort of performance bottleneck to be encountered!

The CPUs are paired with whatever RAM I have in their motherboards - since I have already looked at the effect of the DDR4 on the 5600X and 12400, (as mentioned above) I know there really isn't a lot of headroom in cranking up the speed and lowering the latency on these bad boys. So, these are the system setups:

Ryzen 5 5600X

- 2x 8GB Corsair 3200 MT/s CL16 DDR4 (1:1 ratio)

Intel i5-12400

- 2x 8GB Patriot 3800 MT/s CL18 DDR4 (1:1 ratio)

- 2x 16GB Corsair 6400 MT/s CL32 DDR5 (1:2 ratio)

The sub-timings of the DDR4 kits are listed in the spreadsheet linked above (in green in the case of the 3800 MT/s) but the DDR5 is stock with Trcd/Trp/Tras/Trc/Trfc at 40/40/84/124/510. If you really want to see the potential uplift for the configuration I had vetted on the Ryzen CPU, then add 10 fps to the average result. This will give you the possible increase in performance but not necessarily the real one.

Drivers used are 551.52 on Nvidia's side and 24.2.1 on AMD's. (This testing was actually performed a while ago but I didn't have time to write it up.)

Resolution is 1080p in all tests, with settings listed in the associated spreadsheet (though they are typically ultra/highest) and I've selected a number of games which have varying bottlenecks, between CPU, GPU and combinations of both...

The Results...

|

| Even at 1080p a lot of games are GPU-bound... |

Let's start with the Ryzen 5 5600X.

We can see that there are a number of games which are primarily CPU-bound: Hogwart's Legacy, Spider-man, Starfield and Counterstrike. These titles are all underutilising the power of the GPUs have on offer when using the 5600X - there is still improvement for the majority of them but it's not clear whether there are other effects in play.

Then we have the primarily GPU-bound titles: Avatar, Ratchet and Clank, Returnal, and Alan Wake 2. Here, the games generally scale with compute and RT performance - with the notable exception of Ratchet, which murders the 8 GB VRAM on the RTX 3070...

In comparison, Metro Exodus is a curious case because, for whatever reason, there is a bug which sometimes occurs with certain hardware on certain windows installations and I have been unable to find a way around it! Seriously! I haven't seen anyone else mention it and I have been unable to troubleshoot what, exactly, causes it. In this chart, you can see it at the high preset on the RX 6800 and RTX 3070 and, maybe, to a lesser extent, the RX 7800 XT - but I'm not 100% sure about that!

|

| The CPU can have a great effect on the minimum fps experienced in the games... |

Moving onto the minimum fps for each of these games, we see what might be more evidence regarding the 8 GB VRAM not being enough for Ratchet; the rest of the GPU-bound games confirming that they are, in fact so. What we do see, though, is evidence of the GPU overhead of running a powerful GPU on a CPU which isn't quite up to the challenge of managing it.

This is most evident for Counterstrike 2 - we saw this on the average fps, where the 4070 was rising slightly above the 4070 Super. However, the minimum fps of all the GPUs rises above that of the 4070 Super, indicating that when the going gets tough, the computational overhead of running that more power GPU weighs the performance down.

We see something similar for Ratchet, where both the RX 7800 XT and RTX 4070 are equal or slightly better in minimum fps than the 4070 Super. A more interesting case is Avatar at the low graphical preset - the more powerful GPUs are clearly running into a CPU bottleneck in the minimum fps but the RX 6800 and 3070 are sitting there in a category of their own in a way I cannot fully explain. For the 3070, we could posit that it is the 8 GB VRAM haunting it again, the RX 6800 being limited by its ray tracing prowess (or lack thereof)... but I do not know for certain.

|

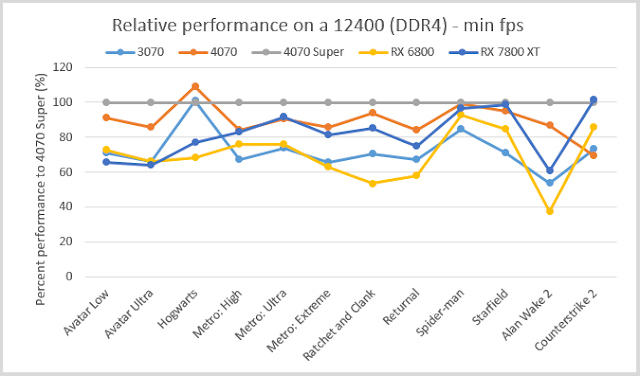

Moving onto the Intel 12400 (DDR4).

We see a very similar story - except, because the 12400 is a bit more powerful, we see better separation in performance in a couple of titles. Namely, Starfield, Metro Exodus, and Avatar.

Curiously, we observe a strange situation with Ratchet where the RTX 3070 is now performing better. Was the 8 GB VRAM really to blame for the poor result on the 5600X? Maybe not! However, the root cause is not clear to me. We're getting more performance from somewhere but it's a complicated situation because aside from a slightly faster processor, we're talking about maybe a ~50% increase in system memory bandwidth going from the 3200 MT/s RAM to the 3800 MT/s.

I should test that!

Looking more generally at the data, the 7800 XT is performing relatively* worse on the Intel platform than the 4070, whereas before it was matching or closer to that card. This is likely due to an actual CPU bottleneck!

*In some games it performs better in absolute terms but in others it's actually worse...

However, in Alan Wake, Ratchet and Avatar, the 7800 XT is losing performance while the Nvidia cards are gaining performance. At first I thought that this might be due to AMD's SAM giving an advantage on the 5600X or an Nvidia bias in the games but the RX 6800 gained performance just like the Nvidia cards did. So, could this be down to the RDNA 3 chiplet architecture?

It's a possibility, but we'll come back to this result in a moment...

|

| The 12400 removes the minimum fps CPU bottleneck we previously were experiencing for the 5600X... |

The situation with minimum fps is much improved - most titles now have the RTX 4070 Super firmly in the lead and games like Counterstrike and Ratchet are no longer displaying any weird behaviour that would indicate a CPU bottleneck.

On the other hand, Hogwart's is still displaying the signs of a CPU overhead from the RTX 4070 Super as both the 3070 and 4070 match the lows of this card. In contrast the 6800 and 7800 XT are probably GPU-bound in this title with ray tracing enabled, so the CPU-bottleneck isn't in effect, here...

|

Shifting over to the 12400 (DDR5) results, the Metro Exodus bug once again rears its ugly head for the RX 6800. It's such a difficult issue to pin down!

Aside from that immediately obvious issue, the second story coming from the average fps data is how well the RX 7800 XT is performing! The AMD card has closed the gap with the RTX 4070 again, now sitting in a more logical position, relative to the 4070 Super. This specifically speaks to the point I noted above regarding the 7800 XT's performance on the 12400 (DDR4) platform and, perhaps, gives us a hint as to what is going on: data management.

This has to be the only explanation there is - the bandwidth of the DDR5 memory is ~1.5x that of the 3800 MT/s DDR4 memory, which is ~1.5x the 3200 MT/s DDR4 memory of the 5600X. That's 2.2x that of the 5600X platform... It seems to me that AMD's SAM compensates for issues with data management to the VRAM across the PCIe bus which the faster DDR4 on the Intel system doesn't manage to match but which the DDR5 alleviates. I believe that the 6800 isn't affected in the same manner because of it's monolithic design.

What's interesting here is that the absolute values for all GPUs mostly increase - indicating that on the DDR4 system, the 12400 is constrained and that extra bandwidth provided by the DDR5 gives the chip more room to stretch its legs.

|

| With DDR5, the minimum fps values of the 7800 XT return to nearer where we saw them on the 5600X... |

The minimum fps values tell a similar story: the 7800 XT is greatly bolstered by having a fat data pipe feeding it. However, that's not the only GPU which is greatly benefitting.

The RTX 3070 with its 8GB is really pushed upwards in Spider-man and Counterstrike, as is the RX 6800 in Ratchet and Clank. Though, one of the biggest gains is had by the 4070 in Counterstrike - a whopping 57 fps on the minimum!

The Conclusion...

In certain games, all of these cards will be capable of performing better than we have seen here: being able to take advantage of a faster CPU. However, it's not wasted money to purchase up to an RTX 4070 Super on these two CPUs paired with these three platforms - it's still around 20% faster than both the 4070 non-super and 7800 XT in the majority of titles, with the notable exceptions of the really CPU-constrained titles... which matches well with Techpowerup's listing for the 4070 and 5-10% more relatively performant than that described by Tom's Hardware.

Now, both Tom's and TPU have the 7800 XT closer to the 4070 Super in performance which, likely means that the effect of the CPU is quite high in the game titles tested. My testing appears to indicate that both memory speed/bandwidth is important for some of these results and so the relative value that the mid-range consumer gets from these GPUs will vary with how much they've spent on the CPU, RAM and platform.

Lastly, the CPU overhead is still an issue for running more powerful GPUs. The RTX 4070 Super, although pretty much always top or joint top performance, suffered somewhat with these mid-range generation-old CPUs. Yes, there's still performance there in the tank - especially if you're thinking of upgrading to a 1440p monitor or running at higher graphical quality settings - but you won't get the most value for your money spent...

The upshot of all of this is if you have an AMD platform, you're well served by either the RX 7800 XT or the RTX 4070. However, if you're running an Intel platform, you're probably better off sticking to the RTX 4070 for best bang for your buck. The RTX 4070 Super, while not a waste, will mostly serve you well if you're hungering for more graphically intensive games at higher resolutions...

No comments:

Post a Comment