|

Much has been made about the performance, or lack thereof, in the RDNA 3 architecture. Before launch, rumours persisted of 3+ GHz core clocks, and I had tried to make sense of them at the time - to no avail, leading to predictions that were around 2x the performance of the RX 6900 XT.

Additionally, people (aka leakers) were really misunderstanding the doubling of FP32 units in each workgroup processor and so were counting more than actually existed in terms of real throughput performance and, I think it's safe to say that this decision from AMD was a disaster from a consumer standpoint.

I digress...

Now, this is the sort of post I tended to engage in back in 2019/2020 when looking semi-logically at all the information out there in order to make an uninformed opinion about how things might bear out in the market. This post is, as those were, an interesting and enteraining look at what reality presents to us - and may not reflect that reality because we have limited and often incomplete information.

With that noted, let's actually delve in...

|

| This looks familiar... |

The King is dead, long live the King!

Let's face it: RDNA 3 has been a let-down, and its story has been a strange one. Fitted with extra SIMD cores in each compute unit and laden with experimental chiplet designs, we've seen it all, so far... except for those elusive rumoured 3D stacked caches on each MCD.

What we have also seen in comparisons between the RX 7600 and RX 6650 XT; whose monolithic die configurations, core clocks, and memory systems are essentially identical (aside from the the double WGP SIMD32 layout); there is no performance increase per unit of architecture between RDNA 2 and RDNA 3. This would imply that the fault lies at the feet of the inability to capitalise on those extra cores in each compute unit.

All that extra silicon, wasted, for no benefit!

Well, that's probably a large portion of the lack of performance uplift - AMD have been unable to get those extra resources working in a proper fashion - for whatever reason - and the inability for RDNA3 to be pushed to higher core clocks than the prior generation has also been a major drain on the actual performance of the released products.

The other side of the story is a bit more complicated. You see, top-end RDNA 3 is not like bottom-end. The chiplet approach does something contrary to what the recent trends of silicon architecture have been moving towards: it moves data away from the compute resources.

Seriously, what is the major story of computing in the last five years, for gaming? Yes, that's right - keeping more data closer to the compute resources (CPU cores, GPU cores, etc.) improves a large proportion of game-related applications significantly! Hell, not only have the Ryzen 7 5800X3D, 7800X3D, and 7950X3D CPUs all been in high demand, along with the limited edition Ryzen 5 5600X3D but we have also seen the evolution of the Zen ecosystem from split CCXs on a CCD to a combined cache hierarchy in the Zen 1 to Zen 2 transition.

These are increasingly performant parts for their energy consumption - thus we can conclude that latency and data locality matters!

|

| Latency, schmaetency! We'll increase the clock speed to reduce the increase but... oh... we didn't manage to do that! Our bad! |

Looking over at that GPU side of the equation has us eyeing the RX 6000 series, which also brought increased cache onto the die (compared to RDNA 1) reducing the latency to, and energy access to that data. RDNA 2 was an amazing architecture...

So, how did AMD plan out top-end RDNA 3? They undid all that work!

Depending on how they've arranged things, there are now two pools of memory that are required to be synchronised between themselves, with data within each pool striped across them. The latency to each pool is increased and thus the amount of residency "checking" is likely increased as a result since the memory controllers for the sceond pool are physically separated from the scheduler on the main die, increasing energy cost and latency further due to 'communication' traffic. What's worse is that AMD was (as noted above) banking on RDNA3 clocking much faster than RDNA2 in order to counter the effect of latency - and I'm pretty sure this was only speaking about the latency of missed requests - not including the larger data management overhead...

At any rate, RDNA 3 doesn't clock faster than even base RDNA 2. 100 MHz does not a difference make! However, even RDNA 2 can almost reach 3 GHz with a bit of a push...

Additionally to this, the L2 cache on-die is laughably small, meaning that data has to be pushed off onto the MCDs more often than I would think is ideal... 6 MB will hold sweet f-A! (As we like to say where I'm from!) with today's more memory intensive game titles and that will cause issues with occupancy over the entire GCD.

|

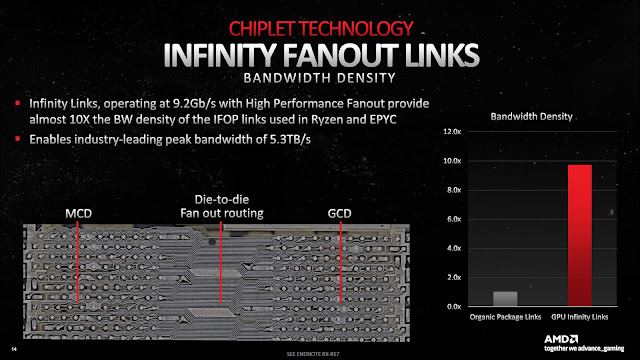

| Industry leading... but latency is king for gaming applications, not like the compute heavy cloud/AI industries... |

Sure, AMD extols the bandwidth of the interconnects but that extra layer of complexity is most probably hurting the potential performance of their top-end cards by allowing for more cases where the occupancy of compute resources may be low. Just look at the ratio of L2 and L3 cache between RDNA 2 and RDNA 3:

|

| Having a larger number of compute resources, RDNA 3 should have a higher ratio than RDNA 2, especially given the higher latency related to being off-die... |

Sure, Navi 21 had less L2 cache for its workloads compared with RDNA3 by a very small margin but the leap to L3 is much larger and the L3 capacity is also much smaller meaning that the likelihood of a cache miss in both L2 and L3 being MUCH larger than in the prior generation. That most likely leads to precious cycles of compute time being wasted... This is because number of times there will be a cache miss from L2 in Navi 31 will be higher than Navi 21... and the number of times there will be a miss in L3 will be a lot higher because of the ratio of cache to compute resources (Navi 31 has 1.2x the resouces of Navi 21*).

*Not counting the double-pumped SIMDs.

This could theoretically result in situations where the compute units cannot be utilised and the scheduler is unable to use the whole die effectively.

And it is this fact, combined with the clock frequency issues of RDNA 3 that I believe is holding the architecture back in gaming terms. In other workloads, it's not such an issue... but then wasn't that what CDNA was for?

|

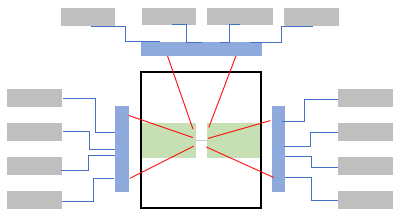

| A crude rendition of the Navi 31 layout as it currently stands... |

Getting back on Track...

As I said, this is all conjecture and inference - based on the limited information available to me. However, if these facets of the architecture are the reasons its being held back, well there are some remedies that I think AMD can do to fix the situation.

First up is that we need to increase data residency and locality: bandwidth does not make up for exponentially increased latency - especially when there are specific situations that lead to edge case race conditions.

Secondly, we would need to reduce latency to data and the latency in data management.

Sounds simple, right?!

|

| My adjusted conception of RDNA 3 - or its successor... |

Now, I'm going to heavily caveat this speculation with the fact that I'm not an expert or even amateur in this field - I would rate myself as, at best, a poor layman but (and this is a big 'but') if I am understanding the concept and logic correctly, this is what I would expect AMD to do to fix RDNA3 or to move forward into the next architecture...

- To increase data locality and residency, there needs to be a larger L2 cache on the GCD. I would posit 32 MB.

- To reduce latency further, and cost to produce (in terms of interposer complexity and size), as well as improving data management, I would combine two MCDs into a single unit. So, 32 MB (combined) Infinity cache, and 8x 16bit GDDR6 memory controllers... per MCD.

That's it!

Sounds ludicrously simple and it probably is stupidly wrong. However, there would be reduced data transfers between all dies, reducing power consumption. There would be better data residency in the three layered memory buffers - reduced latency between buffers and as a bonus, ease between choosing 3x MCD for 24 GB, 2x MCD for 16 GB and 1x MCD for 8 GB configurations... which would also reduce packaging costs because there's fewer dies to be futzing around with on the interposer. The L2 cache could scale better with core size (Navi x1, x2, x3, etc) while off-die L3 would also scale with both cache and memory configs down the entire stack.

Conclusion...

A few years ago, I asked an AMD representative if it made sense to scale RDNA2 down the entire stack and they replied that it did. If I had the chance, I would ask the same person whether it still made sense for RDNA3 - given the performance indifference between the two architectures.

AMD have hard questions lying ahead of them and, quite frankly, I wouldn't want to be in their shoes because they keep giving the wrong answers, despite what appears to be their best efforts. RDNA2 is like the time I accidentally answered all questions in an exam where you were meant to only answer 3 or 4 (and aced it!) whereas RDNA3 is like the time when I went to an exam and, despite preparations, all knowledge left my head and I spent the entire time sweating and almost failed.

I hope that RDNA4 will be at least somewhere inbetween...

5 comments:

Yeah, RDNA3 has been a pretty big let-down. I believe the issues you've pointed out lead to WHY (despite the leaks to the contrary) we've seen no mid-tier (78xx) product. This far into RDNA3 you have to suspect AMD has produced >some< amount of Navi32, I'm willing to bet it's performance has been crap versus Navi22 or even Navi21.

I really liked, what they did. But now it seems like not finished experiment. It seems to me, like they stopped in the middle of the road....next logical step was to have not only MCSs but also GCDs to scale gpu. Maybe they were stopped by even more ill effective communication then in this case, so they stopped it. But that was (for me) main goal. To be able to scale gpu power. To have two, (three), four GCDs....To have something more Ryzen like. I hoped, they solved throughput for infinite fabric finally.

Plus higher frequencies.....Expectations were so high!

@fybyfyby: I am going to say something controversial - controversial because I've been met with resistance on this facet by actual technical people on twitter who hold the recent Apple silicon b#in high regard...

Multiple GCDs is a BAD idea for any application that is latency sensitive. For AI; graphical applications; etc. It's not such a problem - in the same way that multiple GPUs allow decent scaling for the application in question, multi-GCD is identical, maybe even better!

For game applications with realtime rendering, multi-GCD is a nightmare - just as bad as SLI and crossfire. Especially, when the responsibility is forced onto the driver programmers. That's a terrible scenario.

There's a reason why Intel cancelled their multi-die designs for Alchemist.

I think that the recent leaks (potentially true) of RDNA 4 high end dual die designs being cancelled supports my view on this point - it's incredibly difficult to marry disparate compute resources within a tight frametime target that requires constant shifting of data and realtime manipulation of new, 'unexpected' data.

As much as AMD touted "chiplet" GPUs as the next great evolution when RDNA3 launched, how they spin the marketing for RDNA4 if there's no "chiplet" GPUs in the family should be interesting.

Post a Comment