|

I've never really been a fan of the Harry Potter series - I've never read the books, though I have seen most of the films and thought that they were okay. However, I was immediately interested in Hogwart's Legacy when I saw the game for the first time due to the graphical effects used (i.e. ray tracing) and also once I saw the recommended system requirements for the game. I wanted to test it to see why the requirements were so relatively high and to see how they run.

This isn't a review of the game - I might do one of those at a later point, this is a look at how the game scales with certain system resources...

Test System and benchmark...

Hogwart's Legacy ("HL" from now on!) doesn't feature an in-game benchmark that can be used to make a comparison run between different hardware setups. However, many different reviewers have identified Hogsmeade village as one of the more strenuous sections in the game due to the number of NPCs and other graphical effects going on. I've chosen an arcing route from the southern bridge entrance, through the streets around to the left where the potion shop is located. It's approximately 32 seconds worth of data each run, all-in-all but can give us a decent look at how the game performs.

The important thing to note about this testing is that both DLSS and FSR 2.0 are active - running at the Quality setting. The game settings are set to high, with all RT options enabled, with material and texture quality set to ultra for the RX 6800 and with only the material quality set to ultra for the RTX 3070 (due to its lower quantity of VRAM).

One last thing to note about the game settings is that V-sync is enabled - and both monitors are set to a 60 Hz refresh rate*.

*Since we're using all RT features, and FSR/DLSS, we are not guaranteed to manage to keep this rate of frames consistent so it is a good test...

The test systems are as below:

- Intel i5-12400

- 2x 8 GB Patriot Steel series DDR4 4400 (Samsung b-die)

- Geforce RTX 3070

- AMD Ryzen 5 5600X

- 4x 8 GB Corsair Vengeance LPX DDR 3200 (Samsung c-die)

- Radeon RX 6800

I will be using the RAM configurations that I identified last time in my analysis of the best settings in Spider-man (ray tracing). Both graphics cards are slightly overclocked, undervolted and power limited (both perform above stock in these configurations). The CPUs are running at stock settings.

What I hope to show with this testing is where the bottlenecks are for this game and perhaps guide other players in how to optimise (or what to change) in the cases where they feel like they aren't achieving the performance they would like. Additionally, I will show the ability of this hardware, using these settings to be able to maintain an approximate 60 fps experience for the user.

I feel like this is an under-represented type of analysis, with only a couple of outlets focussing on this aspect - most look at raw, unlimited performance of GPU hardware, without any eye towards stability of the presentation on more realistic configurations... or the graphical effects are analysed, without any real view towards performance.

|

| RT shadows, light sources and high texture and material qualities, all in combination with a well-chosen near-realistic art style make the game seriously good-looking... |

Visual Design...

HL is a heavy game - especially with raytracing enabled - and was not without its share of bugs at launch. Lower quality settings for materials, textures, and models result in some pretty poor visual experiences but luckily these have been fixed with patches released just after launch and it is clear, now, that the game can visually scale incredibly well.

The game features densely packed interiors and quite a large open world with a good draw distance and a primary feature of the game which I find particularly 'next generation' in terms of experience is the number and detail of NPCs in the various locations of the game world. Both the castle and the villages feel alive in a way that many last gen and older games did not. Sure, Spider-man has a LOT of generic NPCs walking around, fading into and out of existence, but these are copy-pasted entities of pretty low quality in terms of materials and textures, and you will easily spot repeating instances of NPCs. HL has a combination of randomly generated NPCs and hand-crafted NPCs, which you can run into repeatedly throughout the game.

Combined with the high level of geometric and texture detail to make those NPCs and environments look good, ray tracing options for shadows, reflections, and light bouncing put a real strain on system resources which have historically been neglected by both Nvidia and AMD on lower-end hardware. This is the essential crux of why the system requirements may be considered so high by many, but in my opinion, HL is one of the first truly next gen experiences and, while not perfect, the game is asking a lot from the PC hardware it is running on.

|

| Without ray tracing enabled, the game looks dull, flat, and washed-out... |

Quite honestly, I believe that the ray tracing (RT) implementation in HL is almost vital to the visual make-up in practically every scene. I've seen many games where the RT implementation results in very slight differences between that mode and the purely rasterised presentation, with Spider-man being a standout with respect to the RT reflections available in that game. However, while I find it unfortunate that the RT reflections in HL are muddier and lacking clarity in comparison with the implementation in Spider-man, overall, in combination with the dynamic weather and time of day systems it gives rise to visual experiences that are at the very top of the game in the industry today - assuming you have the hardware to run the experience at an acceptable level.

|

| Spider-man's reflections were impressive on a level I don't think I've seen before... |

Added to this, the RT ambient occlusion (RTAO) and shadows/reflections are so superior to the terribly distracting screen space alternatives that I am willing to suffer the increased amounts of stutter and judder (due to data management), and slight softness of the image due to upscaling, along with lower overall performance in frames per second from enabling all three RT techniques.

|

| The ambient occlusion is exceptionally better than the traditional methods... Just look at those trowels! |

I've casually mentioned elsewhere that I believe that this game, out of all the games that "core" gamers might want to play, will likely be the one that could encourage a large population of people to upgrade their PC hardware. Back in the day, that would have been a game like Quake or, later, Half Life/Crysis... but these days, we really need a knock-out property with huge mainstream appeal to affect the needle in terms of more modern hardware adoption.

But what do users need to upgrade to? What is important for this game when aiming for the best experience*? I'm choosing to test at the high-end of the settings options as the bottlenecks become more obvious at that point.

*Good quality visuals, combined with a good framerate.

First up, let's take a look at the GPU scaling in Hogwart's Legacy. Then move onto the RAM scaling.

|

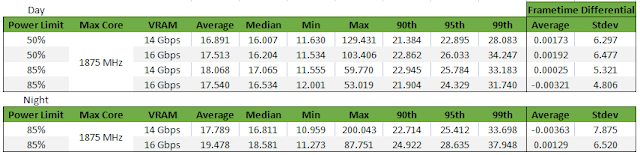

| A small comparison between day/night, as well as power/memory frequency scaling for the RTX 3070... |

GPU clout: Nvidia's Nightmare...

As I mentioned before, I'm doing a run in Hogsmeade. However, it's important to note that I'm performing this run in the daytime. With ray tracing enabled, I observed a difference in strain on the system equipped with the RTX 3070 between day and night.

It's possible that this is because in the day, we appear to mostly have a point light source in the form of the sun. Whereas, in the night, we're looking at multiple light-casting objects (in the form of candles, lamps, etc.) which may eat into the hardware budget of the RTX 3070's 8 GB of VRAM.

I've not seen another outlet mention this difference - nor at what time of day they are testing. Anyway, just a note - night appears to be the worst case scenario for VRAM-limited graphics cards and I'm seeing a drop of approximately an average 6 fps on my RTX 3070, but the stutters the player experiences are much worse during play*: an effective timespan of 5 missed frames at an expected 16.666 ms frametime target (60 fps) is quite an evident stutter!

*Though, inconsistent - which I will get to in a little bit...

Regarding the capability of the GPU - back when I described the power scaling of my RTX 3070, I showed that at 50% performance, we are approximately at the level of a stock RX 6700 XT/RTX 3060 Ti. At the 85% level, with the undervolt, I am at essentially the stock performance for the RTX 3070, only with less power drawn and less heat produced. The recommended specs ask for an RX 5700 XT at high quality settings to enable a 1080p/60fps experience - which is approximately 25% slower than an RTX 3060 Ti.

|

| This is the same data as in the table above... |

However, when we look at the individual values in the table above, we see that a "stock" 14 Gbps RTX 3060 Ti is performing better than a "stock" 3070 in every primary metric other than those damnable frametime spikes. That doesn't make sense!

Let's take a look at the effect of video memory speed on the values trended: we are sometimes seeing a very slight regression in the faster memory configurations but the standard deviation and frametime spikes are, once again, tighter/reduced - indicating an overall better performance because we're seeing fewer stutters. So, clearly the increased frequency is doing something beneficial - even if, as many outlets do, we looked at the average and 1% or 0.1% lows*, we'd be saying the performance is worse...

*This may be presented in a slightly confusing manner, here. Because I'm labelling the 1% low (fps) as the 99th percentile (high frametime).

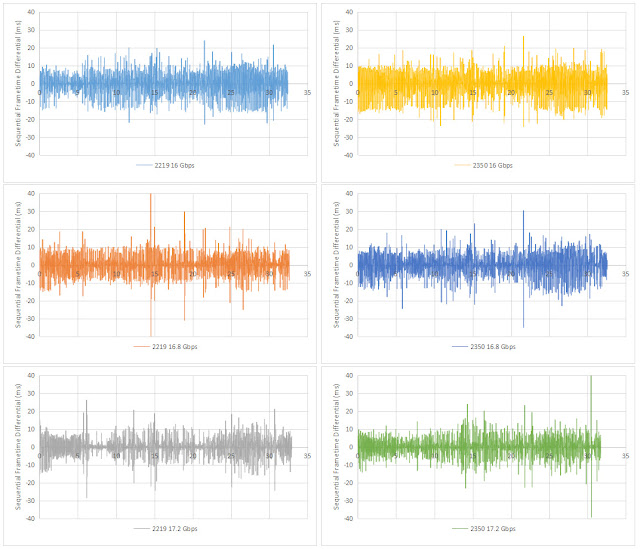

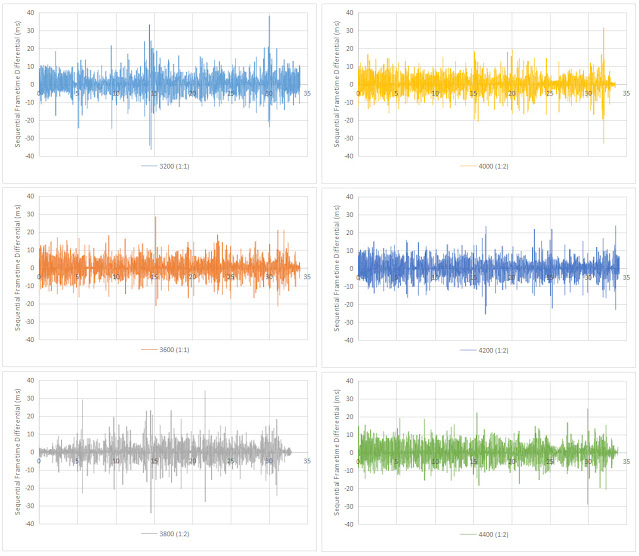

Sequential frame presentation is also improved, with fewer spikes observed with the faster GPU configuration and VRAM frequency. This can be observed in the graphs below and is exactly the reason why I started doing this sort of analysis in the first place - you just cannot get all the information from a static number like average, minimum or percentile figures. There is more going on than just those in the moment to moment experience...

Getting back to those inconsistencies in the numbers - what could be happening?

Well, what seems most likely to me is that, while raw GPU performance and VRAM speed are helping in terms of smoothness, the bottleneck is elsewhere in the system - causing instances where the GPU/VRAM are waiting for data to operate on and this is occurring, essentially, at random times. Meaning that, on one run, we might not see a spike, on another we might see two (given the short duration of the testing period).

If we are seeing that the frametime spikes are reduced by increasing GPU performance and memory speed, and that the overall presentation is becoming a little more consistent, but that there is a slightly lower overall average performance, that would indicate that we need to provide things to the GPU in a faster/more consistent manner. i.e. the GPU is not the absolute bottleneck for this game.

|

| We see a similarly confusing story for the RX 6800 as we did with the RTX 3070... |

AMD's Saving Grace...

Moving over to the RX 6800, we observe similarly strange behaviour as we did with the Geforce card though, as expected for a stronger graphics card, results are improved somewhat.

Back in my power scaling article, I found I could push my card from stock to around 9% faster by increasing the frequency and memory speed a little. I didn't push the memory to the maximum in the slider, but I have also included some testing to see if the excess memory speed is actually helping in this particular title.

To add some value to the testing, the types of tests are slightly different between the two setups due to the different possibilities offered by the software controls available to the user... i.e. I'm not so much interested in testing a lower performing part, here. Instead, I wanted to look at frequency scaling.

|

| The data from the table above, plotted. As with the RTX 3070 results, it doesn't really make a lot of sense... |

I would like to be able to say that I was no longer observing any frametime spikes, but we have already deduced from multiple runs on the RTX 3070 that these are not originating from the GPU so, while the max frametimes listed in this table appear lower than on the Nvidia part, it was merely the luck of the draw, as you will see in just a moment.

In the interim, the frametime differential plots show us that we are getting more consistent sequential frame presentation with increasing memory frequency (and bandwidth) - even without the extra GPU performance granted by increasing the core clock frequency.

|

| Aside from being very lucky not to get many spikes in these tests, we can see the effect of increasd video memory speed (and thus bandwidth) on narrowing down the inter-frame lag... |

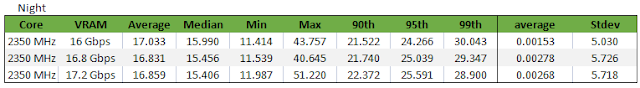

The question now, is whether we see the same hiccups we saw at night when testing the RTX 3070. The answer is, "no". We see no issues with VRAM occupancy on the RX 6800 - the 16 GB video memory is keeping us in good stead in this regard...

However, we are observing the opposite of what we saw during the daytime. We see a tighter sequential frame presentation with the slower memory configuration. Now, I'm not sure if this is down to errors being introduced as the memory is clocked faster, or whether there is some other aspect that I'm not understanding... but the essential take away is that we're not seeing a difference when faster memory is present on the RX 6800.

|

| There's nothing to say, here. They're essentially equivalent by these metrics... |

|

| Backwards results? I'm at a loss to explain the difference observed between night and day... |

To Cap It All Off...

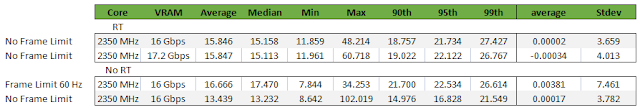

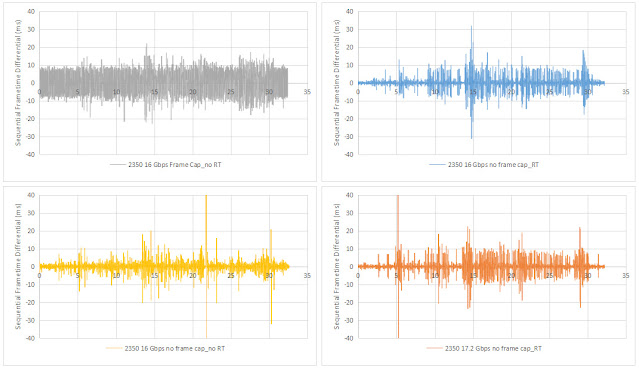

Going back to the point that the frametime spikes can strike any time and anywhere: note the 102 ms spike in the data below...

While searching for an answer to the thorny issue above, I came across something interesting. Enabling v-sync within the game, appears to introduce quite a lot of frame scheduling overhead when ray tracing is enabled. When v-sync is disabled, sequential frametimes become much more consistent! The unfortunate side effect of this is that on a non-variable refresh rate display, the experience for the player is absolutely horrendous because of the screen tearing...

This is a shame because, I'm seeing an improved average framerate performance here... meaning that I could be having a better experience!

|

| Without ray tracing enabled, the framerate cap |

|

| The framerate cap introduces a lot of overhead in terms of sequential frame presentation when ray tracing is enabled... |

As part of this testing, I also discovered that in non-ray tracing situations, the frametimes are consistent with the "RT on and v-sync disabled" scenario... and achieve a smooth 16.666 ms frametime, when v-sync is enabled. This means that the game can achieve much better scheduling, along with the better presentation of zero screen tearing!

While I might be wrong, I believe that this indicates a CPU bottleneck due to the extra demands driven by ray tracing when trying to schedule rendered frames to match the v-sync setting (60 Hz in this case)...

|

| Frametimes compared between the various DDR4 frequencies tested.. |

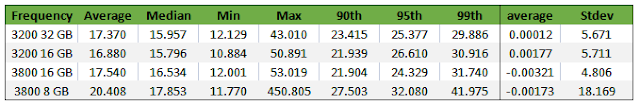

RAM Speed...

Like the open world of Spider-man, the game relies heavily on asset streaming to get all this graphical data into RAM/VRAM, and on RAM/PCIe bus speed and bandwidth to deal with the ray tracing calculations more effectively. Consequently, and given we've seen odd behaviour in our GPU performance scaling tests, I am going to explore the effect of both quantity of RAM and RAM bandwidth/speed in order to see their importance on stability of presentation for the end user.

This testing was performed on the RTX 3070 system with ray tracing enabled.

Unfortunately, because the frametime spikes are effectively random, it is difficult to draw a definitive conclusion from this testing - the absence of spikes in any given test, does not mean that there are no spikes present during the gameplay of that configuration, just that we did not capture one.

What I can say, though, is that the spikes and firsthand gameplay experience was worst at DDR4 3200. In contrast, it appears that DDR4 3800 gave the best experience and frametime presentation. However, in terms of subjective experience, I could not tell the difference between any configuration above DDR4 3600.

For DDR4 3800: this particular tuned configuration has the lowest primary timings of all the setups I tested, despite them all being validated as "the best" during my Spider-man testing... but, from the results obtained here - it does not seem like Hogwart's Legacy is all that RAM sensitive.

|

| The tuned RAM configurations... |

|

| And the associated latencies and bandwidths... |

Lastly, let's take a quick look at the effect of system memory quantity in this game.

RAM Quantity...

Playing with v-sync and RT enabled, we are still seeing that frame presentation overhead, resulting in more messy sequential frame differential plots (the DDR4 3800 being a strange exception). However, the experience when playing with the 8 GB of RAM was not fun. Not only were the stutters really bad, they were constant. 16 GB of RAM is both the minimum and recommended system requirement for this game and my results confirm that - 32 GB adds a bit of stability when running at DDR4 3200 but going to 8 GB is a whole lot of pain...

|

| Oof! |

|

| Don't play this game with 8GB of RAM... just don't do it, please! |

CPU demands...

I haven't really ever delved into CPU overclocking, and I wasn't about to blindly do that for this particular analysis but just looking at the on-screen display during testing, I can see that the game isn't utilising the processor all that well. On one hand, it's really not demanding for non-RT play, and we can see that there is better utilisation in that scenario.

Turning on ray tracing effects drops overall utilisation, as I believe that this is reflective of a bottleneck caused by something being tied to a single processing thread. This also significantly increases system memory usage...

It's also fun to note how much more energy RT causes to be used on both the CPU and GPU! That's got to be good for my energy bill.

|

| Performance monitoring on the i5-12400 + RTX 3070 system... |

Conclusions...

I apologise for the long-winded, sometimes meandering post. Given the data generated, the amount of testing performed and the amount of hardware configurations checked (and double-checked) I feel like I've done an okay job trying to go through everything without going crazy. The amount of text here is not terrible, it's just that the graphics don't lend themselves to a short format.

However, I feel like there are some takeaways for people who want to play this game and get the most out of their current or soon-to-be-upgraded hardware:

- V-sync is essential if you don't have a VRR display but this setting incurs a performance and presentation penalty when ray tracing is enabled.

- The game isn't that graphically demanding. It really isn't! I had no issues managing to achieve approximately 60 fps for the majority of the time based on GPU hardware that scaled between the performance of an RTX 3060 Ti and an almost RX 6800 XT at 1080p. Yes, those are now mid-range cards in 2023 but I was also using high settings... turn those settings down to get more performance.

- Graphics cards with faster VRAM will help - if you can overclock your video memory a bit, do it!

- Graphics cards with 8 GB or less will struggle with certain parts of the game at certain times of day when ray tracing is enabled. However, even with RT disabled, at higher settings, you are pushing the limit on an 8 GB card... Either lower texture settings to medium or use a GPU with more than 8 GB if you want to use RT effects.

- The game is CPU-bound, not only due to the large numbers of NPCs in the most demanding scenes such as the castle and Hogsmeade, but also due to the demands of data management and ray tracing. Having a higher clocked CPU will likely alleviate some of the problems.

- You need 16 GB of RAM, no more, no less. Ideally, that RAM has as tight timings as possible and should be at least DDR4 3600 - but that's just a general idea rather than a specific observation. Try not to run 16 GB of DDR4 3200 - if you must run that slow a configuration of RAM, try and use 4x 8 GB sticks (32 GB total) or dual rank 2x16 GB sticks to improve bandwidth and stability a little.

Lastly, and though I didn't test this aspect here - use an NVMe SSD. Do NOT use HDDs or SATA SSDs for newly released modern games like Hogwart's Legacy. They will make the experience better by a rather large margin.

Anyway, it's time for me to go an veg-out. I'm a little tired after all of this. I hope it was useful to some of you!

No comments:

Post a Comment