For the last year, I've been looking at the predicted performance and efficiency of the RDNA architecture(s) as those were what I was most familiar with and most interested in due to their presence in the now current gen consoles. I determined early on that there was no IPC improvement between RDNA 1 and 2 and that it was merely* a clockspeed bump that was dictating any performance increase per compute unit (CU). However, in the back of my mind I was asking myself how Nvidia's architectures stack up against one another in a similar comparison but I knew that you can't normally directly compare between architectural designs except when the comparison is abstracted via different metrics.

*I say "merely" but I still think it's impressive how far AMD were able to push the working frequency of their chips.

Luckily for me, this is exactly what I did for the final RDNA analysis - I used real world benchmarks in games and actively used frequency (performed by TechPowerUp) to inform the relationship between performance and architectural aspects. While I wasn't able to pull as much information due to the differences in architecture, I was still able to compare and contrast between AMD's and Nvidia's respective approaches to graphics card design and I'll try and take a look into the future to see what we might expect next, as I always do...

Comparison design...

As with the RDNA analysis, I need to have a reference point. In that case it was the RX 5700 XT, a fully utilised mid-range* die for which there are no parts disabled or cut-down. For this analysis, I chose the GTX 1060 - also a fully featured, mid-range part which had no parts disabled. This is so that we can more easily see performance changes when adding/removing resources. Other people like to start at the top or the bottom but it's purely a personal preference and shouldn't affect the overall conclusions, though I think it is important to have the reference be the "ideal" design as intended by the manufacturer (i.e. not cut-down).

*In this context, although the RX 5700 XT was not in the middle of the AMD lineup at the time of release, it was mid-range when considering the increased numbers of CU at the high end of the RX 6000 series cards...

I chose eight game titles that are benchmarked by TechPowerUp with a resolution of 1440p which I feel represent a good variation of game engines and rendering challenge for the cards covered, which would require high performance (i.e. high numbers of high quality frames per second). The only type of engine/game I didn't include was for strategy games but I am not of the belief that those engines really push the capabilities of graphics architectures all that much - especially at the tested resolution where CPU bottlenecking can still be the main limiting factor.

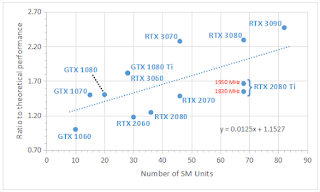

For the comparison, I made a ratio between the performance in each title with the card in question to that of the GTX 1060 then I made a theoretical calculation per FP32 unit or per SM of each card based on the performance per unit of the GTX 1060. I compared the ratio between reality and theory to get a metric of performance efficiency per FP32 unit or per SM. From this I hoped to visualise how efficient, bottlenecked and/or resource starved the Pascal, Turing and Ampere gaming architectuers are relative to each other when performing rasterisation workloads. I will not be comparing ray tracing performance in this analysis but that could be something I explore in the future if I feel it's interesting enough.

What is important to note is that this is specifically with respect to gaming performance (if that wasn't obvious). It can be argued that the direction Nvidia are taking with their architectural design is moving away from being gaming focussed and that is something I am not equipped to analyse right now.

Finally, when comparing clock frequencies, I took the median frequency from TechPowerUp's reviews of each card into account. This is a necessary simplification that will mostly reflect reality in a non-thermally constrained setup but be advised that there may be some variation not taken into account for certain types of game engine workload.

Finally, finally, the one big omission in this comparison is that it is assumed that there are no glaring off-die bottlenecks for each SKU holding back performance due to cheaping out on design. Though I will address this a little in the conclusion.

The efficiency of Nvidia's architectures...

|

| Real world data compared to that predicted by theory... |

All cards used were reference versions with the exception of the MSI Lightning Z version of the RTX 2080 Ti which was included to explore the relationship of clock frequency with the number of resources on the same card in the 20 series as I noticed that the 30 series doesn't appear to benefit much from increases in clock frequency from the stock setup and the 10 series has a more modest benefit from slight increases in clock speed (around 60% of that in the 20 series).

Ultimately, I'm limited on time for what I can and can't include but it was just for my curiosity.

|

| Plot showing the ratio between real world perf / theoretical perf based on a Pascal GTX 1060 FP32 unit and the number of FP32 units within a given card... |

Unlike with the RDNA comparison, where we observed a clear relationship between the two architectures (because there's very little compute difference between RDNA 1 & 2), the Nvidia architectures have a very non-linear relationship. This is not just a case of losing or gaining performance based on clock speed and or individual units of resources (FP32/SM/ROPs etc).

At this point, I'm sure many would say, "Well, what did you expect?!". Thankfully, this isn't an exercise performed in vain as when we separate out the generations of cards we can see some interesting results.

From the data, I believe we can see the relative efficiency of each generation, in terms of numbers of FP32 units, in pumping out fps in the games listed above. The parallel lines of the 10 and 20 series show that, in reality, the RTX 20 series cards were really quite a jump in performance from the prior generation and a much higher efficiency per FP32 unit present in each card was achieved. In contrast to this, the RTX 30 series cards are quite inefficient, dropping well below the 20 series in terms of performance ratio (which takes into account frequency change per FP32 unit) and also within its own generation, a shallow gradient is indicative of how much brute force is being applied in order to get the performance uplifts to keep on coming.

|

| The same data, highlighted by architecture... |

Switching over to the same analysis but instead focussing on a much larger resource unit (the SM), we see quite a different trend with the performance increase per SM being very similar between the 20 and 30 series (though much less than for the 10 series). We can also observe that Turing was not providing a good performance uplift in rasterisation per SM implemented when compared to Pascal and Ampere.

So that leaves us with a strange dichotomy: on the one hand, Ampere is very inefficient per FP32 unit but decently effcient per SM. I think the logical implication here is that Pascal lacked enough compute resources per SM to really get the most from the architecture, as-designed, and it explains Nvidia's push for much more being available in Turing and then continuing through to Ampere.

However, as I suspected at the launch of Ampere, there isn't quite enough supporting infrastructure around (and perhaps within) each SM to actually capitalise on all those extra compute resources in gaming workloads, leading to rather lacklustre performance increases with each FP32 resource added to a given chip (indicated through the gradient which is around 1/4 of that observed for Pascal).

To put it in a more concise manner: Each Turing FP32 unit is more effective than either Pascal or Ampere FP32 units but each Ampere SM contributes more to performance than the prior two architectures, despite each one being over-provisioned with FP32 resources.

The take-home message is that somewhere between Turing and Ampere is a beast of a combination of resources for gaming.

|

| The data above, interpreted through SM unit quantities, instead of FP32... |

|

| ... and again, with the generational data separated. |

One possible reason each Ampere SM is more effective at boosting performance than each Turing SM could be the fact that Nvidia moved the ROP units into each SM, increasing their number and unhitching them from the L2 cache. Turing also improved floating point calculations by allowing double FP16 operations to be performed instead of a single FP32 operation Another improvement was the increase of L1 cache per SM though it's less clear to me how this affects overall SM performance.

Moving on, lets look at the relationship between the frequency adjusted ratio of FP32/SM units (the performance per unit) at a given frequency.

|

| Ratio to the theoretical performance per FP32 unit... |

|

| Ratio to the theoretical performance per SM unit... |

Unlike with RDNA, there's basically no relationship between frequency and performance for Nvidia's architectures and it's probably why Nvidia is not needing to push their cards as hard as AMD are in order to eke out more performance. In the graph, you can notice that each card in a generation rises vertically in order of ascending performance and tier in Nvidia's sales structure with the only exception being the GTX 1080 which lies incredibly close to the GTX 1070 (more so than the 3070/3080). However, you can see that increasing frequency per card, increases the performance...

This is in contrast to RDNA 1/2 where increasing numbers of compute units per card are coupled with a lower ratio to theoretical performance per CU and increasing frequency required to achieve that increase in gaming performance. i.e. the RTX 2080 Ti goes up in performance ratio with higher clock, the RX 6800 XT goes down in performance ratio with higher clock.

|

| A downward trend with increasing CU and frequency... |

What is evident from the plots of Nvidia's cards is that their cards have a positive rate of improvement over the GTX 10 series per resource unit whereas RDNA is losing performance as it's scaling to larger sizes. Now, I haven't plotted the relationships between RDNA's performance and the number of shader engines but given that these are not changing in a semi-random manner as they are in Nvidia's architectures, I don't expect this to change the graph. This is one of the reasons that I have previously said that I believe RDNA 2 to be a dead-end architecture. It cannot scale outward forever and it cannot scale upward (with respect to frequency) forever, either.

The Future...

I suspect that the way forward for the coming architectures might be that hinted performance improvement I mentioned before - the halfway point between Turing and Ampere. Looking at the layout of the GA102 in comparison with the GA106 die and how Ampere has been improved over Turing, I wonder if more load/store units will provide improved data throughput per SM as is found on the A100.

The other thing to note is that I have not tried to take into account issues surrounding off-chip design bottlenecks and these could be a big step forward for performance. Nvidia's design of the RTX 3080/3090 utilising GDDR6X shows that memory bandwidth and speed are a big issue for Nvidia's current design. Could they do something similar to AMD and put in a large intermediate L3 cache to improve overall access latencies? I believe that this would push the current architectures to even greater power efficiencies and performance heights.

Other than that, pushing faster memory, in general, will help the next architecture gain an easy 10-20% performance if memory overclocking of the non-GDDR6X Ampere variants is any indication. Couple this with a move to a smaller Samsung process node and I believe that Nvidia's next generation will be a souped-up version of Ampere without much in the way of big architectural changes.

Beyond that sort of "Ampere-on-steroids refresh", chiplets are ultimately the way forward for Nvidia* as their die sizes are way too large right now to expand outward.

*The same for AMD...

|

| There'll probably be some sort of extra hardware management of scheduling and queuing and less of the I/O but this is probably what a RX 7900 XT would look like... pretty big, huh? |

Conclusion...

Nvidia are slowly leaving behind the focus on rasterisation that was the point of graphics cards over the recent past. AMD are still there, plugging away at getting better at that one thing. Unfortunately for AMD, Nvidia's attention to the world of AI and machine learning and their chasing of those markets is pushing their actual product development in those directions which means that the company is increasingly going to incorporate those hardware/software advances in their consumer graphics cards.

Yes, AMD can likely keep up with Nvidia's rasterisation performance from here on out but Nvidia sort of aren't really even trying any more in this particular arena. Nvidia's focus is on AI, ML and RT and it seems that rasterisation is sort of a side-benefit to that. Amazingly, Nvidia's still improving their raster performance despite this split focus but it does lead us to the question of where Nvidia's next architecture will go from here?

On the one hand, we know that AMD will likely smush together two RDNA 2 80 CU dies together for their RX 7900 XT equivalent, probably leading to an overall increase in CU down their entire product stack - probably without much improvement in frequency due to the limitations of process node and heat generation.

On the other hand, AMD is lacking in AI/ML features at this point in time and their ML (INT4/8) TOPS are lagging FAR behind Nvidia's, meaning that anything they do implement will likely be weaker than Nvidia's current solutions which are likely to only get stronger from here on out. Nvidia is heavily pushing their DLSS and ray tracing solutions and there is a risk that AMD are slowly but surely pushed out of the market as more games adopt these two technologies.

In the end, I think Nvidia have a few options to explore for their next generation of cards (not least of which is going to Samsung's 7 or 6 nm nodes) before heading towards multiple dies per substrate. AMD might be able to win on pure rasterisation... but is anyone really worried about that at this point in time? A GTX 1080 Ti is able to manage well at 1080p and decently at 1440p native resolutions, all of the RTX cards can use DLSS so "native" is a meaningless term in more and more game titles.

Nvidia don't have to beat AMD at raster, they have to beat them at features and they're already doing that.

6 comments:

Really nice analysis and this information is valuable to see the face of the future.

Hey, how did you do those plots?

Anyway thanks again.

Thanks, I know it's a dry, boring topic for many people. Is not a sexy thing to think about but I like to understand things and visualisation and comparison can help.

The plots are coming directly from the table of numbers near the top of the post. I'm taking the average column in the end bracket of data that is generated by dividing the ratio of the performance to a GTX1060 of each card by the number of FP32/SM units in that part. Then I'm scaling that by how many FP32/SM units are in the next card I'm using in the comparison to generate a theoretical performance.

If the actual real world performance of a card is higher or lower than the theoretical, it gives you an indication for different things - depending on what you're looking at.

The graphs are made in an Excel clone - nothing special.

Hey thanks for the explanation hehe. Ye, I see why there are no many comments (or not at all lol) in this area. But it was interesting to see that in the NVIDIA part we will see improvements on its AL, ML, RT (RT is what fascinates me most ;) ) units but I know it is speculative until NVIDIA lands its official new RTX cards, but hey, I've read something similar in another blog (speculative as well in his words) but on your side it's nice to see it in the scientific part with data.

Like the motto says: time will tell. And let's see where all of this lands.

Best wishes.

Hi! Thank you for another interesting article!

I have one comment. I think difference in FP32/SM to performance ratio is absolutely logical due to fact, that in turing gen there was int/fp32 config of compute units, but in ampere there is fp32/fp32 config. So practicaly it is like if nvidia made hyperthreading in terms of fp32. So logically it means less effectivity per thread (analogy to one fp32 part of compute unit) than per core (analogy to SM unit). Its like comparing Ryzen 4700U vs 4800U performance per thread and per core. Comparing per thread will always be in disadvantage to multithread architectures (like more fp32 parts in compute unit in Ampere).

Good point!

I wonder if Nvidia provided *too much* Int perf, though? For gaming, could they potentially reduce the (multi threaded fp32/int) to a single track?

Of course, every workload is unique so i guess that's a difficult thing to gauge.

I dont know. What seemes logical to me is that they realized fp32 workloads are more common now so upgrading int to fp32 makes se se.

Post a Comment