|

| Is that centered? Well, guess what?! Inflation isn't balanced either! ;) |

The rumour mill has been highly productive this last week*, especially with regards to the next gen GPU output of both Nvidia and AMD, and as we near the release window of those products, we are likely to see more and more said about them. However, there is something that's concerning me a little and it might not quite be what you think:

I'm worried about performance inflation...

*Yes, I know all those links are to RedGamingTech... but I'm tired and don't feel like searching across multiple youtube channels and websites for the same information...

Previously, I predicted that we could have a GPU market crash in 2022, based on where I saw the market heading with respect to the available resources in a given GPU... and also where I saw both AMD and Nvidia's architectures leading*. I thought that would lead to a price war and an improvement of performance to unit currency in the provided products but it appears that I may have read that backwards if these ongoing leaks are to be believed.

*I was incorrect about certain things and details in my prognostications but the overall theme appears to be on-target... more compute resources for RDNA; more cache and front/back end for Ada.

Essentially, what I am seeing now, is that there will be a big improvement at the very top but that prices are likely to be hiked to the extreme in relation to that. Additionally, I'm thinking that prices on the lower-end cards will go up by 20-30% and by a complete tier of performance compared to the prior generation.

It is looking like it won't be the case that you're getting RX 6900 XT performance for €370 (the RX 6600XT release price around €400-450) but that the price tier for the 7600 XT will become that of the 6700 XT - i.e. €500-550 (launch price)...

This, in theory, is actually fine.

Normally, we get the top performance card of the prior gen hanging out around the 70-class cards, so just changing the name and keeping a similar price point doesn't have a negative effect in that sense. However, it does have a negative impact on the low-end products because, as I pointed out last time, they get less performance increases and more expensive each generation.

The one big caveat here is that is not the only pressure on these products.

|

| The purported names and specs of the RDNA3 lineup (remember that currently, the rumour is that there are 128 shaders per CU, not 64 as per RDNA/RDNA2)... via RedGamingTech |

There really aren't any games being played at resolutions at or below 1440p where the equivalent performance of the RX 6700 XT/ RX 6800 / RTX 3060 Ti / RTX 3070 cards are not sufficient to drive them at 60+ fps without FSR or DLSS enabled (obviously, without raytracing on!). So, why would any gamer actually pay more for better performance if the games aren't demanding them and there's not too many players playing at 1440p, let alone 4K?!

This is where the performance inflation comes into play.

If neither AMD or Nvidia can sell too many ultra expensive, margin-making, GPUs at the high end, then they must, logically, attempt to make more money at the low-to-mid-range in order to keep their accustomed profit margins. If gamers are able to get ahold of cheap ex-mining cards and fire-sale new cards for lowish prices and, thus, don't need to upgrade - how can the manufacturer's continue to sell new products?

So, take those tier-up prices and add another 20-30% to them and you get exactly the situation where both AMD and Nvidia can sell fewer units through to the consumer but still retain their profits!

That 7600 XT which is priced like a 6700 XT (~€500-550) becomes €600-650, which is a price we routinely saw during the mining craze over the last year - and not even at the height of the period where we could observe 6700 XTs being sold for close to €1000!

All of a sudden, we're no longer getting the performance of the last generation's top-tier card for half the price, we're getting it for around 60% or two-thirds of the price... and that logic continues to scale down. A 7500 XT goes from 6500 XT's €200 to €350 for approximately an RX 5700 XT's performance... maintaining the performance per unit currency for that tier - which we also observed in the RX 6000 series prices too (though with less VRAM).

And VRAM is fast becoming an issue for gamers.

6 GB was already tight in 2018-2020. In 2022-2023, 6 GB isn't enough, even for 1080p high. Sure, the compute performance to drive that resolution is easy to get, even in the here and now with the current generation of cards... but if you want to run current games at high to ultra quality settings, you already require 8 GB of VRAM. If anything, more VRAM is actually more important to gamers at 1080p going forward over the next 3-5 years than adding more compute performance.

In that scenario, it makes sense that AMD and Nvidia would gate VRAM behind increased prices rather than increases in performance...

|

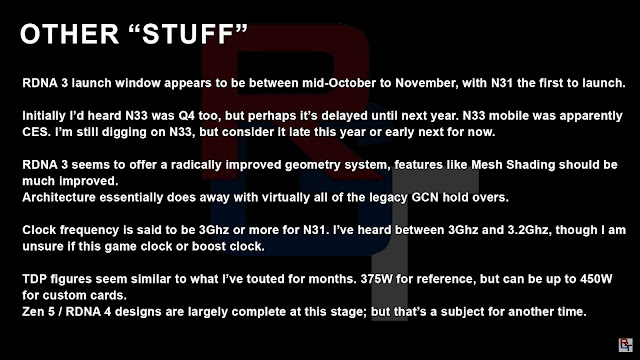

| RedGamingTech, again, with the ancilliary details regarding the next gen RDNA cards... |

Conclusion...

In a world of diminishing returns, where graphics card manufacturers need to keep selling new generations of cards but where the majority of consumers remain on relatively undemanding resolutions, and the availability of cards with enough compute resources to drive those resolutions continues to increase, something has to give.

Yes, AMD and Nvidia might push for 2x the performance of the top-end cards from the current gen for next gen's top-end cards... but that's actually a stupid game. Why would you do that? I mean, seriously, bragging rights aside, these are companies that need to make money and appease their shareholders. What is coming that requires so much performance and is that rate of performance increase even sustainable?

From a business perspective, would it make more sense to drip-feed out performance rate increases over 2-3 generations instead of jumping by double in a single generation? I think it absolutely would.

So, here's my dilemma: If I chose to put out such an increase in performance, without the requirement to have that performance in the market pre-existing, what would I do in order to be able to have something to advance to in the next generation beyond that?

Silicon isn't going to get cheaper - or much smaller. Resources are likely to keep increasing in cost due to current global events and increasing scarcity. A global recession appears to be on the cards, dropping consumer spending and free cash for frivolous spending...

In my mind, releasing a graphics card with double (forget the wilder speculations) the performance of the current generation doesn't actually make any sense!

It feels like we're on the verge of the biggest bait and switch in graphic history - I can fully see a scenario where N31 and Ada 102 are never released to the public and we only get N32 and Ada 103. That's still a 60%-ish improvement in performance over what we have this generation... but it could be at the price of the top-end of this generation too.

60% is approximately the average we've received for the last three generations for the 90-class cards and each class down the stack and that would make sense, for me, from a perspective of getting the most return on investment for the consumer products from both AMD and Nvidia for a generation of potentially weak demand and relatively low requirements... better to save your best for the following generation.

|

| While there are certainly exceptions, the vast majority of cards in each Nvidia class only achieve at least 2x the performance as the card to be replaced when leaving a space of three cards... (taken from the GTX 400 series onwards) |

No comments:

Post a Comment